Dear community,

we are in the process of building a file server for our small postproduction / 3D company. We need fast and responsive access from several Windows 10 clients (workstations) to a centralized storage server for our collaborative projects. Besides video data / raw footage we mainly work with file image sequences (EXR images in Full HD or 4K resolution, typically about 8MByte filesize each (varying from several hundred KByte to 25 MByte). Accessing out data from internal SSD allows for smooth playback and operation, our goal ist to achieve a similar degree of operability with a 10GbE-network on our new centralized storage.

So far we have successfully built a server based on decent supermicro hardware with sufficient amount of RAM. We are running Truenas Core 12 and wanted to run some initial network performance tests before implementing our full storage. Please take a look at the signature for detailed system specs.

right now we have only few workstations to be connected to the server, so our idea was to initially pass on using a 10GbE switch and instead put 2-3 network interface controllers inside the server to directly connect it to the Win10 clients, each using different network IDs (so not on the same subnet). No Bridging intended. So the 10GbE connections are used for nothing else but pure storage access. Every other communication between the workstations is done using conventional 1 GbE network.

A switch should be added in the future once there ist need to connect additional clients.

For testing purposes we only run one single storage device: a Samsung EVO 860 SSD 512 GByte. It has been tested under windows 10 and is running flawless.

We basically got our network running as intended, got several independend connections from several clients to the server.

Beforehands we did some tests using windows10 only, directly connecting our workstations in different combinations with each other. So under Win10 everything works as intended. Connection speeds are perfect, all NICs and all transceivers and Cabling works flawless. We get "correct" benchmarks and real life file access scenarios are more or less the same as if the files (image sequences) were stored on a local SSD.

Right now we have access to:

- two different intel X520 cards (genuine, passed yottamark verification), original intel transceivers as well as intel compatible ones from fs.com,

- one "OEM" intel X710-DA4 (probably chinese knockoff), two mellanox connectX-3 cards with transceivers from mellanox and fs.com,

- proper OM4 fiber cable and optional DAC cables

- as well as a 10GBaseT-based option (supermicro /intel X540 NIC in the server and onboard Aquantia-NICs in two workstations).

So next we did the same performance tests with Truenas 12, using different combinations of NICs in the server /workstations.

The single SSD is shared using SMB.

The problem we ran accross is that each time we use an intel based NIC inside the Truenas-server, we get poor read performance when accessing small(er) files (such as our typical image sequences), while sequential read performance is very good under every situation.

When we instead access one of the mellanox CX3-Adapters inside of the server, read performance is perfect.

Its always the same behaviour regardless of which combination of NIC / Transceiver, PCIE-Port and so on we use. Mellanox NICs give us great read performance in both sequential and small file access, while intel NICs have poroblems accessing smaller files.

The problem can be measured in benchmarks, as well as experienced under real world-usage conditions (processing/playback of image sequences in our editing software.). Accessing image sequences via the server's intel NICs is even slower than on conventional 1GbE connections, while transfer of huge files (ZIP-Folders, Raw Video Footage) is perfectly saturating 10GbE. Interestingly it does not matter if the image sequences are cached in Truenas' ARC or not - the read performance remains slow.

We made some benchmarks / screenshots to explain the problem. For further explanations, please see below:

The above screenshots (iperf3) show that the connections themselves are okay. The NIC mentioned in red letters is the one inside of the truenas box.

With iperf3, the results are almost the same regardless of the kind of nic in the Win10 client, the transceiver and so on. Also the RJ45-connections show more ore less the same behavior.

To keep things simple, Jumbo frames are off, (though i get slightly different results with enabled Jumbos, especially the mellanox cards run a bit faster with Jumbos).

Next, we have Crystal Diskmark. as you can see, accessing the mellanox cards we get snappy file access in every situation. But with intel, read performance tanks dramatically under certain situations.

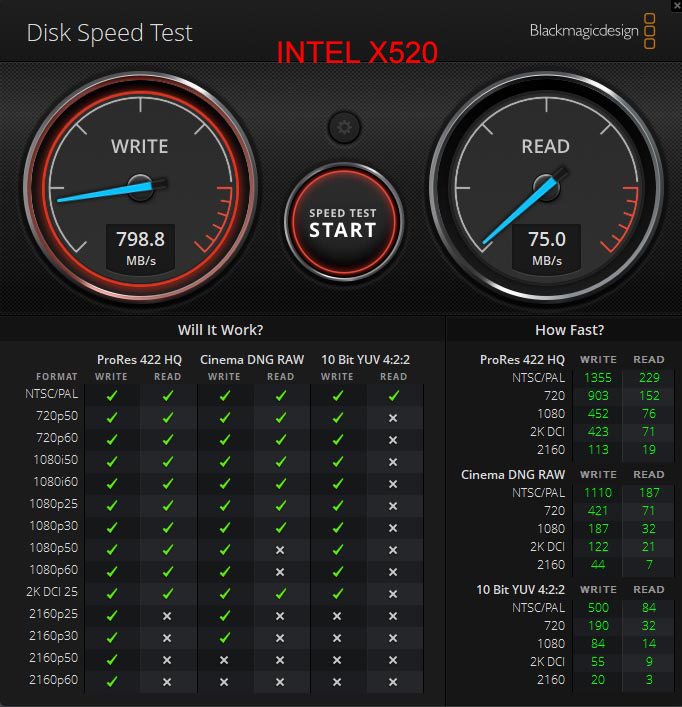

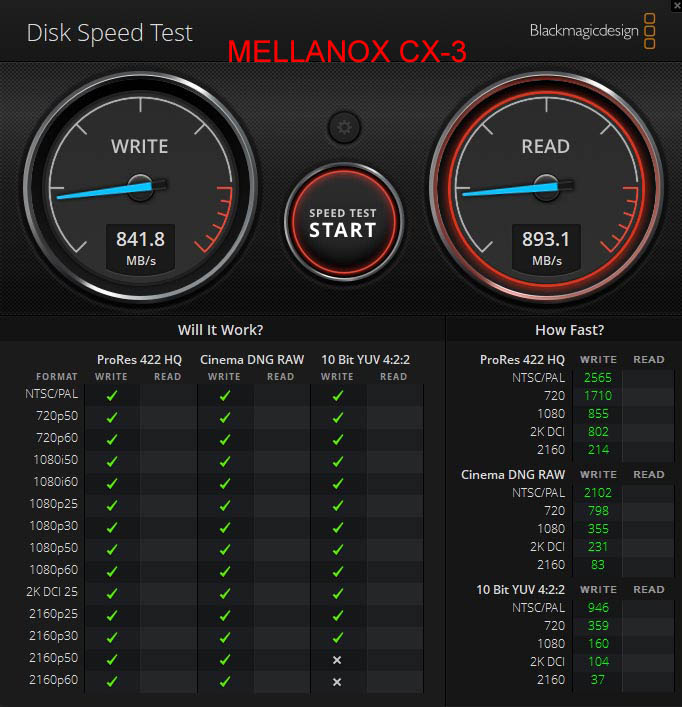

Same goes for the Blackmagic Disk Speed test, which delivers perfect performance values with the mellanox, but very bad performance with every intel NIC.

The JRJ45-Connection (intel X540) seens generally a bit faster than the X520 /X710 with Optics or DAC. But nevertheless, the performance is not acceptable.

While the above screenshots are taken without any tuneables, we did try different settings, especially regarding buffer sizes and other things we came accross in differend postings. But while we got small variances, the mainproblem persisted, the intel controllers in the truenas box seem to have major performance impacts when accessing smaller files, while huge sequential transfers are no problem at all.

Does anybody have made similar experiences? Is there a simple solution to this? Some driver setup/tuning/optimization required? We have made our experience right out of the box, so maybe there is a simple setting we did miss?

Do you need additional information?

Thank you very much,

Leif

we are in the process of building a file server for our small postproduction / 3D company. We need fast and responsive access from several Windows 10 clients (workstations) to a centralized storage server for our collaborative projects. Besides video data / raw footage we mainly work with file image sequences (EXR images in Full HD or 4K resolution, typically about 8MByte filesize each (varying from several hundred KByte to 25 MByte). Accessing out data from internal SSD allows for smooth playback and operation, our goal ist to achieve a similar degree of operability with a 10GbE-network on our new centralized storage.

So far we have successfully built a server based on decent supermicro hardware with sufficient amount of RAM. We are running Truenas Core 12 and wanted to run some initial network performance tests before implementing our full storage. Please take a look at the signature for detailed system specs.

right now we have only few workstations to be connected to the server, so our idea was to initially pass on using a 10GbE switch and instead put 2-3 network interface controllers inside the server to directly connect it to the Win10 clients, each using different network IDs (so not on the same subnet). No Bridging intended. So the 10GbE connections are used for nothing else but pure storage access. Every other communication between the workstations is done using conventional 1 GbE network.

A switch should be added in the future once there ist need to connect additional clients.

For testing purposes we only run one single storage device: a Samsung EVO 860 SSD 512 GByte. It has been tested under windows 10 and is running flawless.

We basically got our network running as intended, got several independend connections from several clients to the server.

Beforehands we did some tests using windows10 only, directly connecting our workstations in different combinations with each other. So under Win10 everything works as intended. Connection speeds are perfect, all NICs and all transceivers and Cabling works flawless. We get "correct" benchmarks and real life file access scenarios are more or less the same as if the files (image sequences) were stored on a local SSD.

Right now we have access to:

- two different intel X520 cards (genuine, passed yottamark verification), original intel transceivers as well as intel compatible ones from fs.com,

- one "OEM" intel X710-DA4 (probably chinese knockoff), two mellanox connectX-3 cards with transceivers from mellanox and fs.com,

- proper OM4 fiber cable and optional DAC cables

- as well as a 10GBaseT-based option (supermicro /intel X540 NIC in the server and onboard Aquantia-NICs in two workstations).

So next we did the same performance tests with Truenas 12, using different combinations of NICs in the server /workstations.

The single SSD is shared using SMB.

The problem we ran accross is that each time we use an intel based NIC inside the Truenas-server, we get poor read performance when accessing small(er) files (such as our typical image sequences), while sequential read performance is very good under every situation.

When we instead access one of the mellanox CX3-Adapters inside of the server, read performance is perfect.

Its always the same behaviour regardless of which combination of NIC / Transceiver, PCIE-Port and so on we use. Mellanox NICs give us great read performance in both sequential and small file access, while intel NICs have poroblems accessing smaller files.

The problem can be measured in benchmarks, as well as experienced under real world-usage conditions (processing/playback of image sequences in our editing software.). Accessing image sequences via the server's intel NICs is even slower than on conventional 1GbE connections, while transfer of huge files (ZIP-Folders, Raw Video Footage) is perfectly saturating 10GbE. Interestingly it does not matter if the image sequences are cached in Truenas' ARC or not - the read performance remains slow.

We made some benchmarks / screenshots to explain the problem. For further explanations, please see below:

The above screenshots (iperf3) show that the connections themselves are okay. The NIC mentioned in red letters is the one inside of the truenas box.

With iperf3, the results are almost the same regardless of the kind of nic in the Win10 client, the transceiver and so on. Also the RJ45-connections show more ore less the same behavior.

To keep things simple, Jumbo frames are off, (though i get slightly different results with enabled Jumbos, especially the mellanox cards run a bit faster with Jumbos).

Next, we have Crystal Diskmark. as you can see, accessing the mellanox cards we get snappy file access in every situation. But with intel, read performance tanks dramatically under certain situations.

Same goes for the Blackmagic Disk Speed test, which delivers perfect performance values with the mellanox, but very bad performance with every intel NIC.

The JRJ45-Connection (intel X540) seens generally a bit faster than the X520 /X710 with Optics or DAC. But nevertheless, the performance is not acceptable.

While the above screenshots are taken without any tuneables, we did try different settings, especially regarding buffer sizes and other things we came accross in differend postings. But while we got small variances, the mainproblem persisted, the intel controllers in the truenas box seem to have major performance impacts when accessing smaller files, while huge sequential transfers are no problem at all.

Does anybody have made similar experiences? Is there a simple solution to this? Some driver setup/tuning/optimization required? We have made our experience right out of the box, so maybe there is a simple setting we did miss?

Do you need additional information?

Thank you very much,

Leif