liukuohao

Dabbler

- Joined

- Jun 27, 2013

- Messages

- 39

Hi All,

FreeNAS Server Hardware Spec:

Motherboard = PDSME

CPU = Pentium D930 3.0GHz dual core 775 socket

RAM = 8GB DDR2 (max.)

NIC = Intel 82573 PCI-E Gigabit Ethernet

OS = FreeNAS v9.1.0 x64

ZFS file system formated

The server is using the motherboard's south bridge ICH7R

as the disk SATA II controller for connecting 4 disks.

Mirror A = 2TB WD20EARX x 2

Mirror B = 2TB WD20EARSX x 2

WD Intelipark = disabled

ZFS raid 10 = Mirror A stripe Mirror B

Total capacity = 3.6TB

ZVOL created

Volume size = 3TB (cannot set higher)

Compression Level = Off

Block Size = 4,096 bytes

iSCSI extend device (not file) created

iSCSI initiator created

iSCSI portal added

iSCSI target created, Logical Block Size = 4096 bytes

Extend associated with target.

Windows 7 Client PC

Windows 7 Ultimate 64bit client PC can connect to FreeNAS server's iSCSI target successfully.

Windows 7 Ultimate 64bit client PC connected to FreeNAS server using a dedicated network,

separate gigabit switch and network cables.

Windows 7 Ultimate 64bit client PC's 3rd party virus scanner and firewall is disabled. But by default

Windows 7 built-in firewall is activated automatically when 3rd party firewall is disabled.

Windows 7 Ultimate client PC is using Atheros L1 Gigabit Ethernet.

Atheros L1 Gigabit Ethernet

Device Manager- Network adapters- Advanced- Property:

Flow Control = ON

Interrupt Moderation = ON

Max IRQ per Second = 5000

Maximum Frame Size = 1514

Media Type = Auto

Network Address = Not Present

Number of Receive Buffers = 256

Number of Transmit Buffers = 256

Power Saving Mode = Off

Shutdown wake up = Off

Sleep Speed down to 10M = On

Task Offload = On

Wake Up Capabilities = None

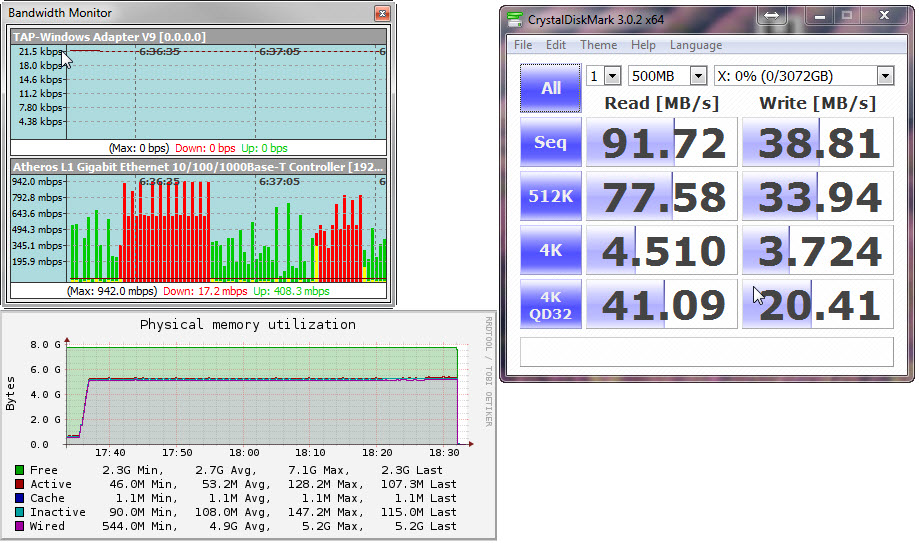

Below is the result that I have screen captured.

As you can see the Write(sequential) speed demonstrated on CrystalDiskMark = approx. 39MB/s.

I cannot complain about the Read(sequential) speed = 92MB/s

The Writes connection seems very choppy, unsteady (show in the vertical green lines) according to the Bandwidth Monitor.

RAM usage for FreeNAS during the Write operation is approx. 5GB on average as you can see on the

physical memory utilization diagram below.

Question:

Does having a higher capacity of RAM, say about 16GB of RAM improves

the writing speed to perhaps 80MB/s?

Your advice is much appreciated here.

Thank you.

FreeNAS Server Hardware Spec:

Motherboard = PDSME

CPU = Pentium D930 3.0GHz dual core 775 socket

RAM = 8GB DDR2 (max.)

NIC = Intel 82573 PCI-E Gigabit Ethernet

OS = FreeNAS v9.1.0 x64

ZFS file system formated

The server is using the motherboard's south bridge ICH7R

as the disk SATA II controller for connecting 4 disks.

Mirror A = 2TB WD20EARX x 2

Mirror B = 2TB WD20EARSX x 2

WD Intelipark = disabled

ZFS raid 10 = Mirror A stripe Mirror B

Total capacity = 3.6TB

ZVOL created

Volume size = 3TB (cannot set higher)

Compression Level = Off

Block Size = 4,096 bytes

iSCSI extend device (not file) created

iSCSI initiator created

iSCSI portal added

iSCSI target created, Logical Block Size = 4096 bytes

Extend associated with target.

Windows 7 Client PC

Windows 7 Ultimate 64bit client PC can connect to FreeNAS server's iSCSI target successfully.

Windows 7 Ultimate 64bit client PC connected to FreeNAS server using a dedicated network,

separate gigabit switch and network cables.

Windows 7 Ultimate 64bit client PC's 3rd party virus scanner and firewall is disabled. But by default

Windows 7 built-in firewall is activated automatically when 3rd party firewall is disabled.

Windows 7 Ultimate client PC is using Atheros L1 Gigabit Ethernet.

Atheros L1 Gigabit Ethernet

Device Manager- Network adapters- Advanced- Property:

Flow Control = ON

Interrupt Moderation = ON

Max IRQ per Second = 5000

Maximum Frame Size = 1514

Media Type = Auto

Network Address = Not Present

Number of Receive Buffers = 256

Number of Transmit Buffers = 256

Power Saving Mode = Off

Shutdown wake up = Off

Sleep Speed down to 10M = On

Task Offload = On

Wake Up Capabilities = None

Below is the result that I have screen captured.

As you can see the Write(sequential) speed demonstrated on CrystalDiskMark = approx. 39MB/s.

I cannot complain about the Read(sequential) speed = 92MB/s

The Writes connection seems very choppy, unsteady (show in the vertical green lines) according to the Bandwidth Monitor.

RAM usage for FreeNAS during the Write operation is approx. 5GB on average as you can see on the

physical memory utilization diagram below.

Question:

Does having a higher capacity of RAM, say about 16GB of RAM improves

the writing speed to perhaps 80MB/s?

Your advice is much appreciated here.

Thank you.