kyaky

Cadet

- Joined

- Feb 25, 2021

- Messages

- 2

Hi Community,

I have some issues with the NVME ZFS pool performance over 100GbE

The setup:

The Server:

TrueNAS 12.0 U2.1

CPU:AMD Ryzen 9 3900x

Motherboard: X570 Aorus

Memory: 128G

Mellanox 100G SFP

3x 2T PCIE4 NVME (benchmark> 3500mb/s r/w)

The Client:

CPU: i7 9th Gen

Memory: 16G

Mellanox 100G SFP

1x NVME (benchmark> 3500mb/s r/w)

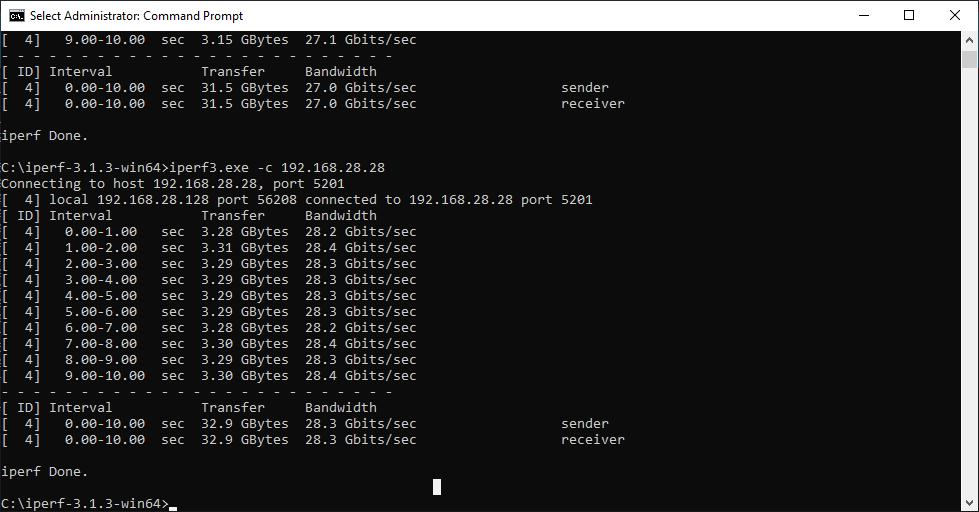

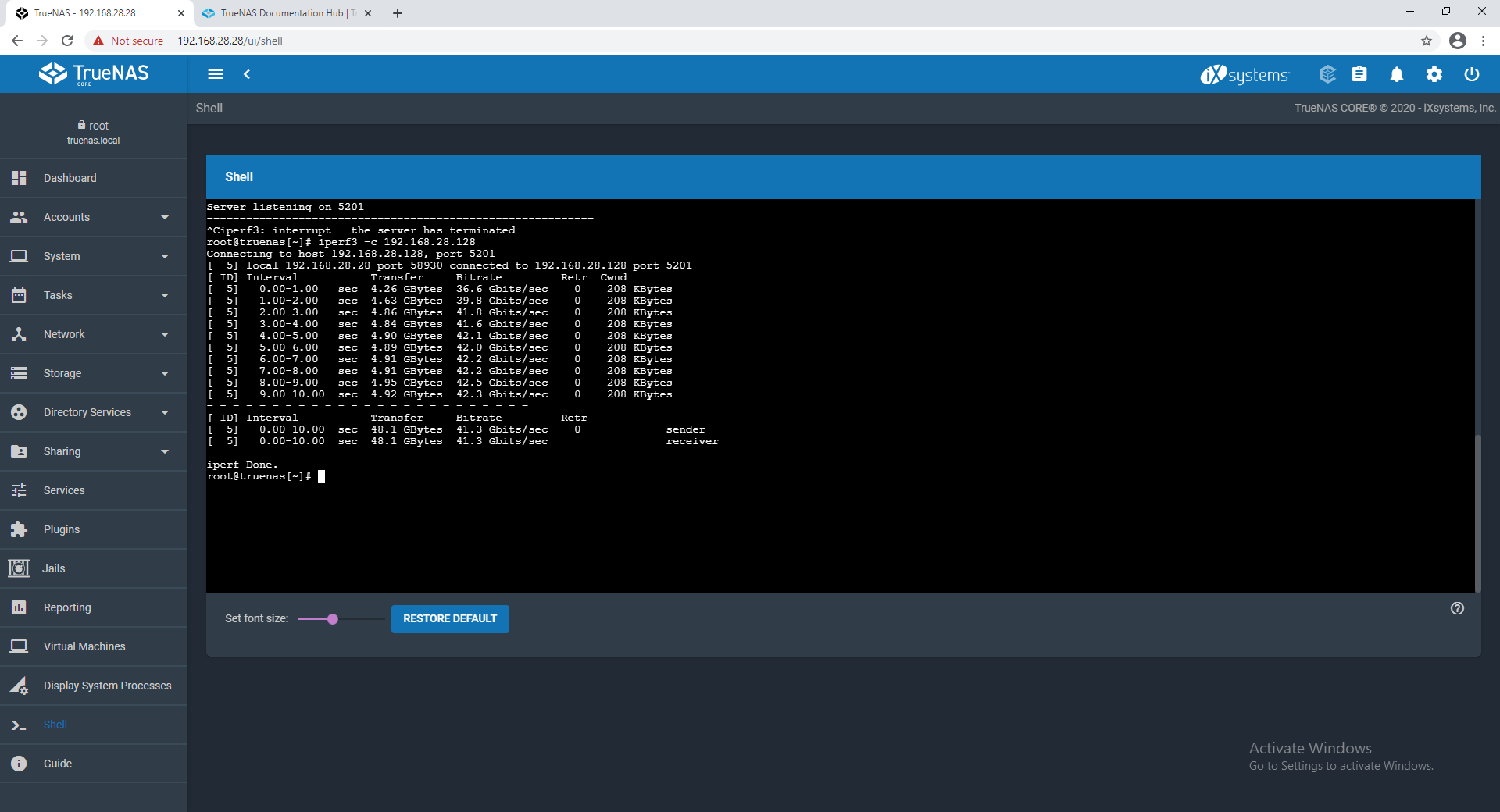

Network bench using iperf3 between client and server:

30~40Gbit/s (although there is a bottleneck somewhere between two systems, but theoretically should do 30/8~40/8 = 3.75~5 Gbyte/s)

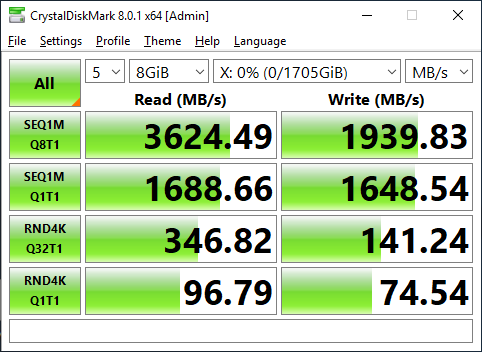

The pool test:

pool1:

1x nvme by itself.

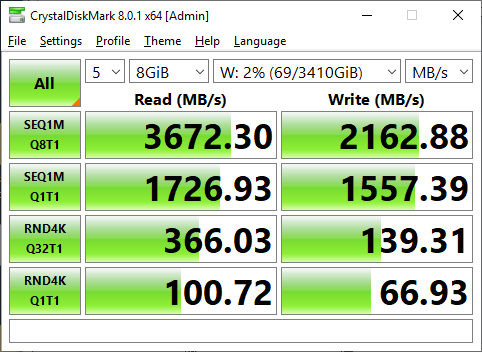

pool2:

2x nvme stripe

or

vdev1: 1x nvme

vdev2: 1x nvme

write sync off, mtu 9000,

pool1:

pool2:

as you can see there is no performance gain at all for pool2. I tested 3 nvme in stripe and still not gain.

Shouldn't pool2 perform 2x times faster than pool1 and saturate the available bottlenecked 100G (30~40Gbit/s)?

In real world copy via samba share,

server -> client

60G file

pool1: 1.5Gbyte/s

pool2: 1.5Gbyte/s

to test if thats the single pool limit, i have:

server -> client

2x 60G file from pool1 + pool2 at same time: 800mb/s + 800mb/s

so, it seems in this system, the total traffic that is able to transfer out is limited to 1.5~1.6Gbtye/s

with the default FreeBSD tuneable settings the result was worse. This test above was done after some tweaks on tuneable settings.

Is this a bottleneck with TrueNAS or a bottleneck of my existing hardware? How can I improve this?

Many Thanks

I have some issues with the NVME ZFS pool performance over 100GbE

The setup:

The Server:

TrueNAS 12.0 U2.1

CPU:AMD Ryzen 9 3900x

Motherboard: X570 Aorus

Memory: 128G

Mellanox 100G SFP

3x 2T PCIE4 NVME (benchmark> 3500mb/s r/w)

The Client:

CPU: i7 9th Gen

Memory: 16G

Mellanox 100G SFP

1x NVME (benchmark> 3500mb/s r/w)

Network bench using iperf3 between client and server:

30~40Gbit/s (although there is a bottleneck somewhere between two systems, but theoretically should do 30/8~40/8 = 3.75~5 Gbyte/s)

The pool test:

pool1:

1x nvme by itself.

pool2:

2x nvme stripe

or

vdev1: 1x nvme

vdev2: 1x nvme

write sync off, mtu 9000,

pool1:

pool2:

as you can see there is no performance gain at all for pool2. I tested 3 nvme in stripe and still not gain.

Shouldn't pool2 perform 2x times faster than pool1 and saturate the available bottlenecked 100G (30~40Gbit/s)?

In real world copy via samba share,

server -> client

60G file

pool1: 1.5Gbyte/s

pool2: 1.5Gbyte/s

to test if thats the single pool limit, i have:

server -> client

2x 60G file from pool1 + pool2 at same time: 800mb/s + 800mb/s

so, it seems in this system, the total traffic that is able to transfer out is limited to 1.5~1.6Gbtye/s

with the default FreeBSD tuneable settings the result was worse. This test above was done after some tweaks on tuneable settings.

Is this a bottleneck with TrueNAS or a bottleneck of my existing hardware? How can I improve this?

Many Thanks