-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Build Report: Node 304 + X10SDV-TLN4F [ESXi/FreeNAS AIO]

- Thread starter Stux

- Start date

Hi, Is this still in play? :)Will write up the pfSense install eventually.

I'm thinking of doing this exact same thing (running FreeNAS and pfSense as vm under ESXi), and I would love to see your take on the pfSense part as well!

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Hi, Is this still in play? :)

I'm thinking of doing this exact same thing (running FreeNAS and pfSense as vm under ESXi), and I would love to see your take on the pfSense part as well!

Been busy :(

Worked it out though.

Eds89

Contributor

- Joined

- Sep 16, 2017

- Messages

- 122

Stux, what kind of benchmarks did you run performance wise, and what kind of results did you achieve?

I'm having some odd issues I cannot seem to find the cause of;

I have FreeNAS running as a VM on ESXi (stored on 960 evo SSD), with my storage passed back through to ESXi using an internal switch for other VM storage (VMXNET 3 NIC to ensure capable of 10Gbps)

I have 4x 2TB drives in a mirror, with an SSD slog and L2ARC (stored as VMDK on my 960 evo SSD).

This has a single zvol on with sync enabled and sparse, and added to ESXi via iSCSI (mtu on freenas interface and ESXI switch set to 9000).

I also then have 4x 3TB drives in a mirror, with no SLOG or L2ARC added, which has one dataset with sync disabled. This then has an SMB share on it for client file storage.

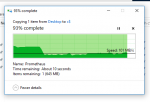

If I copy a large file (7GB) from a test VM which is on the 960 evo (to avoid read speed bottlenecks), over the network (internal ESXi switch all VMXNET3 so 10Gbps) to a VM stored on the iSCSI datastore in FreeNAS, I see about 300MB/s for a little while, then it drops down to below 100MB/s as per attachment. I can definitely see activity on the SSD SLOG, and I know it is capable of far higher than 100MB/s

If I copy the same file from the same VM to the SMB share, I get a constant ~350MB/s

If I do the reverse, and copy from a VM on the iSCSI datastore to the test VM, it tends to stick around 100MB/s

If I copy from the SMB shared to the test VM, I get an almost constant 350MB/s

Any thoughts on where I should be looking for issues, as the disks hosting the iSCSI for VMs are 7200rpm, compared with the 5400rpm of those with just an SMB share on, so I would expect any difference to be the other way around.

I'm having some odd issues I cannot seem to find the cause of;

I have FreeNAS running as a VM on ESXi (stored on 960 evo SSD), with my storage passed back through to ESXi using an internal switch for other VM storage (VMXNET 3 NIC to ensure capable of 10Gbps)

I have 4x 2TB drives in a mirror, with an SSD slog and L2ARC (stored as VMDK on my 960 evo SSD).

This has a single zvol on with sync enabled and sparse, and added to ESXi via iSCSI (mtu on freenas interface and ESXI switch set to 9000).

I also then have 4x 3TB drives in a mirror, with no SLOG or L2ARC added, which has one dataset with sync disabled. This then has an SMB share on it for client file storage.

If I copy a large file (7GB) from a test VM which is on the 960 evo (to avoid read speed bottlenecks), over the network (internal ESXi switch all VMXNET3 so 10Gbps) to a VM stored on the iSCSI datastore in FreeNAS, I see about 300MB/s for a little while, then it drops down to below 100MB/s as per attachment. I can definitely see activity on the SSD SLOG, and I know it is capable of far higher than 100MB/s

If I copy the same file from the same VM to the SMB share, I get a constant ~350MB/s

If I do the reverse, and copy from a VM on the iSCSI datastore to the test VM, it tends to stick around 100MB/s

If I copy from the SMB shared to the test VM, I get an almost constant 350MB/s

Any thoughts on where I should be looking for issues, as the disks hosting the iSCSI for VMs are 7200rpm, compared with the 5400rpm of those with just an SMB share on, so I would expect any difference to be the other way around.

Attachments

Chris Moore

Hall of Famer

- Joined

- May 2, 2015

- Messages

- 10,079

Is that a SATA SSD? If it is, that is going to be a performance bottleneck. Your best speed from a SATA SSD is around 450MB/s, after overhead.with an SSD slog and L2ARC (stored as VMDK on my 960 evo SSD)

How many SSDs do you have in the system?

Is that the same 960 that you are also using for you SLOG and to host the VMs?If I copy a large file (7GB) from a test VM which is on the 960 evo (to avoid read speed bottlenecks)

@Stux , what do you say, am I understanding this correctly?

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

To directly answer the question, I tested the iSCSI/NFS performance and all the various disks using macos/linux and Windows in VMs. Sometimes with direct pass-through.

I also conducted various iPerf tests from various VMs to FreeNAS, and from real hardware over 10gbe switches etc.

As @Chris Moore points out, SLOG performance is greatly affected by the SLOG. I performed a number of benchmarks in this thread https://forums.freenas.org/index.php?threads/testing-the-benefits-of-slog-using-a-ram-disk.56561

And you can use the same methodology.

A 960 Evo makes a terrible SLOG btw.

So, can you provide some more detail on what hardware you actually have, and how its set up

I think you're using a VMDK as your SLOG, and the VMDK is on your Evo. I never did determine if the sync writes are getting through to ESXi. I assume they're not really, otherwise I'd expect performance to be pretty bad.

My initial SLOG via VMDK tests were performed with a Samsung 960 Evo holding a VMDK, which might make a difference, but notice I upgraded to an intel P3700 after doing my ram disk tests.

Also, be aware that L2ARC can cause performance issues if you don't have enough L1ARC (aka ARC) as result of not having sufficient extra RAM to support the L2ARC data.

My system seems to only be able to write a total of about 1GB/s or so, and that then results in circa 450MB/s when using a SLOG with sync=always as everything gets written twice.

Have a good look through the RAM disk test thread.

I also conducted various iPerf tests from various VMs to FreeNAS, and from real hardware over 10gbe switches etc.

As @Chris Moore points out, SLOG performance is greatly affected by the SLOG. I performed a number of benchmarks in this thread https://forums.freenas.org/index.php?threads/testing-the-benefits-of-slog-using-a-ram-disk.56561

And you can use the same methodology.

A 960 Evo makes a terrible SLOG btw.

So, can you provide some more detail on what hardware you actually have, and how its set up

I think you're using a VMDK as your SLOG, and the VMDK is on your Evo. I never did determine if the sync writes are getting through to ESXi. I assume they're not really, otherwise I'd expect performance to be pretty bad.

My initial SLOG via VMDK tests were performed with a Samsung 960 Evo holding a VMDK, which might make a difference, but notice I upgraded to an intel P3700 after doing my ram disk tests.

Also, be aware that L2ARC can cause performance issues if you don't have enough L1ARC (aka ARC) as result of not having sufficient extra RAM to support the L2ARC data.

My system seems to only be able to write a total of about 1GB/s or so, and that then results in circa 450MB/s when using a SLOG with sync=always as everything gets written twice.

Have a good look through the RAM disk test thread.

Last edited:

Eds89

Contributor

- Joined

- Sep 16, 2017

- Messages

- 122

In response to Chris' questions;

1. The SSD I am using as a SLOG is a Samsung SM953 NVMe SSD passed directly through to FreeNAS and dedicated to SLOG

It is capable of about 1.8GB/s read and roughly 1.5GB/s write I believe

2. The only things on the 960 Evo in this test, are FreeNAS install VMDK, Test VM VMDK and L2ARC VMDK

I have noticed similarly poor/fluctuating copy speeds even when the test VM is stored elsewhere, and even when copying from a VM on my iSCSI datastore, directly to my SMB share.

The underlying hardware is a SuperMicro X9SRL-F MB with an E5-2618L v2 6 core processor 12 threads, 64GB RAM.

FreeNAS virtual, and has been assigned 24GB RAM and 4 vCPU.

It has an LSI 9207-8i passed through directly with access to all large spinners, in addition to the SM953 NVMe SSD for use as a SLOG.

Drives are a mix of 7200rpm Hitachi NAS drives, and 5400rpm WD Reds.

Config is essentially the same as you have been doing in this thread, other than slightly different hardware.

All tests are internal to the ESXi host, as outside that I only have 1Gbps networking.

You did suggest RAM disk tests in another thread I posted in, and I saw similar 450MB/s write speeds, but about 900MB/s writes in CrystalDisk Mark. I then rebooted the VM after increasing the MTU in FreeNAS, and seemed to start getting 900MB/s reads and writes. Nothing has changed since then, and now the speeds have dropped down to the 100MB/s in my screenshots.

This pattern seems to be true whether sync is enabled or disabled on the zvol hosting the iSCSI datastore.

My L2ARC is 64GB sitting on a VMDK on the 960 Evo. Would you expect that to be ok with 24GB ram assigned to FreeNAS? How can I go about determining if that might be an issue?

1. The SSD I am using as a SLOG is a Samsung SM953 NVMe SSD passed directly through to FreeNAS and dedicated to SLOG

It is capable of about 1.8GB/s read and roughly 1.5GB/s write I believe

2. The only things on the 960 Evo in this test, are FreeNAS install VMDK, Test VM VMDK and L2ARC VMDK

I have noticed similarly poor/fluctuating copy speeds even when the test VM is stored elsewhere, and even when copying from a VM on my iSCSI datastore, directly to my SMB share.

The underlying hardware is a SuperMicro X9SRL-F MB with an E5-2618L v2 6 core processor 12 threads, 64GB RAM.

FreeNAS virtual, and has been assigned 24GB RAM and 4 vCPU.

It has an LSI 9207-8i passed through directly with access to all large spinners, in addition to the SM953 NVMe SSD for use as a SLOG.

Drives are a mix of 7200rpm Hitachi NAS drives, and 5400rpm WD Reds.

Config is essentially the same as you have been doing in this thread, other than slightly different hardware.

All tests are internal to the ESXi host, as outside that I only have 1Gbps networking.

You did suggest RAM disk tests in another thread I posted in, and I saw similar 450MB/s write speeds, but about 900MB/s writes in CrystalDisk Mark. I then rebooted the VM after increasing the MTU in FreeNAS, and seemed to start getting 900MB/s reads and writes. Nothing has changed since then, and now the speeds have dropped down to the 100MB/s in my screenshots.

This pattern seems to be true whether sync is enabled or disabled on the zvol hosting the iSCSI datastore.

My L2ARC is 64GB sitting on a VMDK on the 960 Evo. Would you expect that to be ok with 24GB ram assigned to FreeNAS? How can I go about determining if that might be an issue?

Eds89

Contributor

- Joined

- Sep 16, 2017

- Messages

- 122

Disabled sync on the zvol hosting the iSCSI datastore, removed the SLOG from the pool, and removed the L2ARC;

It still does the same thing of starting the transfer high, then ultmiately dropping down to sub 100MB/s speeds

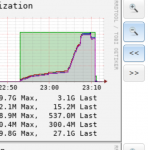

EDIT: Is it memory usage?

The attached is memory usage after running a couple of test copies of this 7GB file

It still does the same thing of starting the transfer high, then ultmiately dropping down to sub 100MB/s speeds

EDIT: Is it memory usage?

The attached is memory usage after running a couple of test copies of this 7GB file

Attachments

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Run a local benchmark writing to the pool that hosts the zvol. Ie dd etc from FreeNAS terminal

My suspicion is the pool can only sustain 100MB/s for some reason. The initial fast performance is the initial writes hitting ram buffers etc, but eventually the data has to hit the disk.

My suspicion is the pool can only sustain 100MB/s for some reason. The initial fast performance is the initial writes hitting ram buffers etc, but eventually the data has to hit the disk.

Eds89

Contributor

- Joined

- Sep 16, 2017

- Messages

- 122

Forgive me, but I'm not a *nix guy, so don't have a working knowledge of dd and other commands.

Would my command be something akin to:

EDIT: I'm obviously doing something wrong, as if I run dd if=/dev/zero of=/mnt/VMs/testfile bs=1G count=1 as others have run here: https://forums.freenas.org/index.php?threads/performance-testing-with-dd.351/

It completes in 1 second, with a transfer rate of about 1GB/s.

Would my command be something akin to:

Code:

dd if=/dev/zero of=/dev/zvol/VMs/ESXi-iSCSI-DS1 bs=7G count=1 oflag=direct

EDIT: I'm obviously doing something wrong, as if I run dd if=/dev/zero of=/mnt/VMs/testfile bs=1G count=1 as others have run here: https://forums.freenas.org/index.php?threads/performance-testing-with-dd.351/

It completes in 1 second, with a transfer rate of about 1GB/s.

Last edited:

Hey @Stux,

Awesome build report! I’m new to FreeNAS and am looking into a build of my own, and really like the idea of an AIO server that will closely follow your build – in particular I’m looking at the Supermicro 5028D-TN4T with 64GB RAM (https://www.supermicro.com/products/system/midtower/5028/sys-5028d-tn4t.cfm). Initially I think I'll probably only be running about half a dozen VMs at any one time.

A couple of questions that I had:

You passed through the AHCI controller to FreeNAS – I have room for 4 HDDs and 2 SSDs. Assuming I used a M2 nvme boot disk, and used this for swap/L2ARC as in your initial build, could the 2 SSDs be used as a separate pool in FreeNAS for NFS or iSCSI VM datastores? If so, do you think that would reduce or remove the need for a dedicated slog device?

Alternatively, with a large enough M2 boot drive, could I just store the VMs on the M2 and remove the need for sharing VM datastores through FreeNAS? Are there any downsides to this?

Lastly, with 4 HDDs, I’m looking at 8TB drives but I’m torn between a mirrored pool or RaidZ2. Scouring the forums there are very differing opinions - I’m leaning toward RaidZ2 for the added redundancy, but am concerned about resilvering times for the 8TB drives. Do you have any thoughts one way or the other?

Definitely still have a lot of learning to do, but just thought I’d get your opinion on this particular setup before I jump into it headfirst!

Cheers again for this great guide!

Awesome build report! I’m new to FreeNAS and am looking into a build of my own, and really like the idea of an AIO server that will closely follow your build – in particular I’m looking at the Supermicro 5028D-TN4T with 64GB RAM (https://www.supermicro.com/products/system/midtower/5028/sys-5028d-tn4t.cfm). Initially I think I'll probably only be running about half a dozen VMs at any one time.

A couple of questions that I had:

You passed through the AHCI controller to FreeNAS – I have room for 4 HDDs and 2 SSDs. Assuming I used a M2 nvme boot disk, and used this for swap/L2ARC as in your initial build, could the 2 SSDs be used as a separate pool in FreeNAS for NFS or iSCSI VM datastores? If so, do you think that would reduce or remove the need for a dedicated slog device?

Alternatively, with a large enough M2 boot drive, could I just store the VMs on the M2 and remove the need for sharing VM datastores through FreeNAS? Are there any downsides to this?

Lastly, with 4 HDDs, I’m looking at 8TB drives but I’m torn between a mirrored pool or RaidZ2. Scouring the forums there are very differing opinions - I’m leaning toward RaidZ2 for the added redundancy, but am concerned about resilvering times for the 8TB drives. Do you have any thoughts one way or the other?

Definitely still have a lot of learning to do, but just thought I’d get your opinion on this particular setup before I jump into it headfirst!

Cheers again for this great guide!

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Thanks :)

I’m new to FreeNAS and am looking into a build of my own, and really like the idea of an AIO server that will closely follow your build – in particular I’m looking at the Supermicro 5028D-TN4T with 64GB RAM (https://www.supermicro.com/products/system/midtower/5028/sys-5028d-tn4t.cfm). Initially I think I'll probably only be running about half a dozen VMs at any one time.

Nice system, same motherboard as mine. Wired zone have good prices on them, and tinkertry has lots of info specific to that system.

A couple of questions that I had:

You passed through the AHCI controller to FreeNAS – I have room for 4 HDDs and 2 SSDs. Assuming I used a M2 nvme boot disk, and used this for swap/L2ARC as in your initial build, could the 2 SSDs be used as a separate pool in FreeNAS for NFS or iSCSI VM datastores?

Quite possibily

If so, do you think that would reduce or remove the need for a dedicated slog device?

Yes. Maybe. But I don’t know.

Alternatively, with a large enough M2 boot drive, could I just store the VMs on the M2 and remove the need for sharing VM datastores through FreeNAS? Are there any downsides to this?

That would work, but your VMs would not be ZFS protected. No redundancy, no bitrot protection and susceptible to single point Of failure. But VMs are not irreplaceable data. So store the data on ZFS.

Lastly, with 4 HDDs, I’m looking at 8TB drives but I’m torn between a mirrored pool or RaidZ2. Scouring the forums there are very differing opinions - I’m leaning toward RaidZ2 for the added redundancy, but am concerned about resilvering times for the 8TB drives. Do you have any thoughts one way or the other?

I’m not worried about the resilver times. The decision is really down to IOPS or space.

Definitely still have a lot of learning to do, but just thought I’d get your opinion on this particular setup before I jump into it headfirst!

Cheers again for this great guide!

Chris Moore

Hall of Famer

- Joined

- May 2, 2015

- Messages

- 10,079

The RAIDz2 pool will give you roughly the IOPS of a single drive where the pool with two mirror vdevs will give you roughly the IOPS of two drives. IOPS is a function of the number of vdevs in the pool. More vdevs generally equates to more IOPS and in that case, more mirror pairs makes the pool 'faster' although it is not really raw speed that I am referring to. I have a pool of 16 drives to give me 8 mirror pairs so I can have better random I/O. I would suggest that you should go with more drives.Lastly, with 4 HDDs, I’m looking at 8TB drives but I’m torn between a mirrored pool or RaidZ2. Scouring the forums there are very differing opinions - I’m leaning toward RaidZ2 for the added redundancy, but am concerned about resilvering times for the 8TB drives.

Absolutely amazing, what a guide, thanks Stux. I've been tinkering with this stuff for years and learned alot from reading this.

I have one question though, why did you share both via nfs and iscsi from freenas to ESXi? I know nfs was named storage and iscsi vm but is there a reason behind this choice of nfs vs iscsi? Or was it just to show examples of what is possible?

I have one question though, why did you share both via nfs and iscsi from freenas to ESXi? I know nfs was named storage and iscsi vm but is there a reason behind this choice of nfs vs iscsi? Or was it just to show examples of what is possible?

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Absolutely amazing, what a guide, thanks Stux. I've been tinkering with this stuff for years and learned a lot from reading this.

I have one question though, why did you share both via nfs and iscsi from freenas to ESXi? I know nfs was named storage and iscsi vm but is there a reason behind this choice of nfs vs iscsi? Or was it just to show examples of what is possible?

Mostly the latter.

BUT, the NFS storage is good for storing files that ESXi can acccess and that can be loaded from other systems, so for example I can put ISO’s and other files their direct from the FreeNAS CLI. Can’t do that with iSCSI

That would work, but your VMs would not be ZFS protected. No redundancy, no bitrot protection and susceptible to single point Of failure. But VMs are not irreplaceable data. So store the data on ZFS.

Thanks for the feedback. I've revisited my configuration and it seems that I'd likely be better off with the slog. You mentioned in your build that a SATA slog is not necessarily performant - why is this? I'm considering the S3710 - Obviously it wouldn't compete with the speeds of your P3700 drive (which I'm finding pretty hard to find in Australia), but is there another reason as well? I guess the question is, what sort of read/write speeds are reasonable for a slog device, and is a SATA drive like the S3710 a reasonable compromise between price/performance?

Chris Moore

Hall of Famer

- Joined

- May 2, 2015

- Messages

- 10,079

The SATA interface limits the potential performance of the SLOG.

Sent from my SAMSUNG-SGH-I537 using Tapatalk

Sent from my SAMSUNG-SGH-I537 using Tapatalk

Eds89

Contributor

- Joined

- Sep 16, 2017

- Messages

- 122

Stux, have you tested SMB connectivity between VMs and FreeNAS in an AIO?

I'm finding an application I have on a Windows VM, that reads and writes data to FreeNAS over a vSwitch, keeps complaining about being able to "read from disk".

I initially thought it was a vmxnet3 driver issue, but see the same thing with E1000 adapters, and also on multiple VMs.

I'm not really sure how I would go about looking for SMB issues on the FreeNAS end, as I've never dug into logs before.

I'm finding an application I have on a Windows VM, that reads and writes data to FreeNAS over a vSwitch, keeps complaining about being able to "read from disk".

I initially thought it was a vmxnet3 driver issue, but see the same thing with E1000 adapters, and also on multiple VMs.

I'm not really sure how I would go about looking for SMB issues on the FreeNAS end, as I've never dug into logs before.

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Build Report: Node 304 + X10SDV-TLN4F [ESXi/FreeNAS AIO]"

Similar threads

- Locked

- Replies

- 11

- Views

- 10K

- Replies

- 2

- Views

- 8K

- Replies

- 6

- Views

- 5K

- Replies

- 3

- Views

- 4K

- Replies

- 18

- Views

- 21K