Quite likely. You should search for multipath SCSI support in that Proxmox thing.

That is my next thing to do. And it is definitely the same drive. I formatted sde and then did fdisk -l again and sdf was formatted exact the same.

Quite likely. You should search for multipath SCSI support in that Proxmox thing.

It's the same LUN.Turned out Proxmox is just as easy. I had my HBA configured wrong. As soon as I went through all the steps again, Proxmox now sees my test FC store.

Strange thing though, Proxmox sees two 500GB drives. I only did 1 file extent but have 2 fibres going between the two. Is it actually seeing the same thing?

I suppose you should ask Intel about that. I don't have Intel 10g NIC, but at least on Chelsio FCoE is separate device, that require separate driver.FC is great, but what about FCoE on intel 10g NIC's?

Unfortunately my FC-fabric experience is close to zero, so I am not sure what is actually needed here. All FreeNAS LUNs already for purposes of XCOPY have their own 16-byte LUN IDs in NAA format, that, as I understand, in some cases are called WWN. FC ports also have their own 8-byte IDs flashed by vendor, known as WWPN. Also CTL now supports (though WebUI doesn't does not configure that yet) 8-byte node IDs, known as WWNN. So, could you please specify what exactly is missing here?On the SAN switch, it presents as scsi-fcp: target.

Is it possible to present LUNs with their own WWNs?

Therefore, instead of supporting one ESXi server, we can share the storage with multiple ESXi servers.

Unfortunately my FC-fabric experience is close to zero, so I am not sure what is actually needed here. All FreeNAS LUNs already for purposes of XCOPY have their own 16-byte LUN IDs in NAA format, that, as I understand, in some cases are called WWN. FC ports also have their own 8-byte IDs flashed by vendor, known as WWPN. Also CTL now supports (though WebUI doesn't does not configure that yet) 8-byte node IDs, known as WWNN. So, could you please specify what exactly is missing here?

It works great for one ESXi server. After connecting to the storage's HBA, the server sees all the LUNs.

Because LUNs are not visible on the switch, sharing the storage with multiple servers is not feasible.

"...reported separately...." is exactly what I mean. Is it possible for each LUNs to have its own WWPN? Maybe I am asking too much. :)

Hi Shang,

I think what you're looking for is more commonly called "LUN Masking" in FC terminology.

From my reading there doesn't appear to be any support in the FreeBSD CTL target for this. Sorry.

With that said, I haven't tried it recently under FreeNAS. I may give it a shot as I have a spare system and a supported QLE2462 HBA to play with.

Hi HoneyBadger,

Thanks for your inputs.

LUN mapping and masking is exactly what I want to accomplish

If it is not supported, then it is not supported.

Thanks

Shang

Tranced,Hi, FC is great, but what about FCoE on intel 10g NIC's ?

Special thanks to mav@ and cyberjock who may or may not know it but have helped me immensely to complete this by having their posts/replies on the forums.

My ESXi/FreeNAS sandbox is complete. Thank you guys!!!!!

Here is my exact config for anyone who is looking to set this up...

----------------------------------------------------------------------------------------------------------------------------------------

FreeNAS Target side

1. Install the right version

2. Install FC HBAs - configure manual speed in HBA BIOS

- FreeNAS 9.3 BETA (as of this writing) --- install!

3. Check FC link - status after bootup

- I'm using a Qlogic QLE2462 HBA card

- 4Gbps speed Manual set in HBA BIOS (recommended by Qlogic)

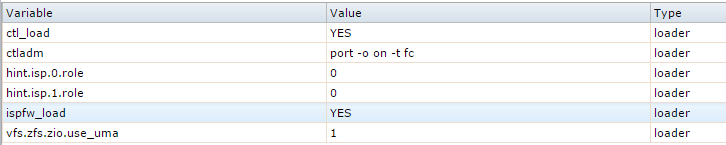

4. Add Tunables in "System" section

- I have 2 fiber FC cables for the 2 ports on each of my HBA cards

- Check that Firmware / Driver loaded for card, shown by solid port status light after bootup

- I have the Qlogic-2462 [solid link orange=4Gbps] check your HBA manual for color code diagnostics

- variable:ispfw_load ____value:YES_________type:loader___(start HBA firmware)?

- variable:ctl_load ______value:YES _________type:loader___(start ctl service)?

- variable:hint.isp.0.role__value:0 (zero)______type:loader___(target mode FC port 1)?

- variable:hint.isp.1.role __value:0 (zero)______type:loader___(target mode FC port 2)?

- variable:ctladm _______value:port -o on -t fc _type:loader___(bind the ports)?

Add Script in "Tasks" section

- Type:command________command:ctladm port -o on -t fc____When:Post Init

5. Enable iSCSI and then configure LUNs

enable iscsi service and create the following...

create portal (do not select an IP, select either 0.0.0.0 or the dashes) whichever allows you to advance

create initiator (ALL, ALL)

create target (select your only portal and your only initiator) give it a name...(doesn't quite matter what)

create extent (device will be a physical disk, file will be a file on zfs vol of your choice) Research these!

create associated target (chose any LUN # from the list, link the target and extent)

If creating File extent...

Choose "File" Select a pool, dataset or zvol from the drop down tree and tag on to the end

You must tag on a slash in the path and type in the name of your file-extent, to be created.

e.g. "Vol1/data/extents/ESXi"

If creating Device extent...

Choose "Device" and select your zvol (must be zvol - not a datastore)

! ! ! BE SURE TO SELECT "Disable Physical Block Size Reporting" ! ! !

[ Took me days to figure out why I could not move my VM's folders over to the new ESXi FC datastore... ]

[ They always failed halfway through and it was due to the block size of the disk. Checking this fixed it. _ ]

REBOOT! REBOOT! REBOOT! REBOOT! REBOOT! REBOOT! REBOOT! REBOOT! 1 time.

now...sit back and stretch your sack - your Direct Attached Storage is setup as a target

---------------------------------------------------------------------------------------------------------------------------------------------

ESXi Hypervisor Initiator side

1. Add to ESXi in vSphere

Configuration > Storage Adapters and click Rescan All to check it's availability by selecting your fiber card.

If you don't see your card, make sure you have installed the drivers for it on ESXi. (Total PIA if manual)

2. Datastores

I was able to use my LUN when created using a device extent.

But I had trouble creating a datastore with a file extent... after some futzing I somehow fixed that.

But before I used the file ext as a datastore I ended up using file ext. this way...

VM Guest attach

Presented the drive by adding a new virtual "Hard Drive" on one of my guest VMs machine's settings...

as a "RAW DISK"

Gets presented to the VM Guest just like another hard drive but the speeds are blazing of course.

It will pool capacity and double my speed

You can add multiple physical disks to a vSphere datastore but while it doubles in capacity as expected... I found that it does not actually use them both simultaneously for advantageous throughput.

MULTI-PORT HBAs

If have hundreds or thousands of file requests per minute...

You can configure load-balancing in ESXi... (this is for very intensive access count and fail-over purposes)

- Create datastore (like below) and right-click, select "Properties..." and click "Manage Paths..."

- Change the "Path Selection" menu for Round-Robin to load balance with fail-over on both ports.

- Click "Change" button and "OK" out of everything.

OR

If you have multi-port HBAs and want a performance advantage...

Setup MPIO for a performance and redundancy.

You could make an iSCSI target for each disk "Device" and present them to Server 2012 or whatever you use on the ESXi side.

Read about the rest!!! Good luck!

Please reply if this helped you! I've been trying to get this working for almost 6 months! Thanks again to everyone on the forum.