Robert Marley

Dabbler

- Joined

- Sep 11, 2014

- Messages

- 29

new server details:

Supermicro 6047r-e1r36n 36-bay

Motherboard X9DRi-LN4F+

2x 2.0Ghz 6-core

64 GB DDR3 ECC Ram

Myricom 10Gbe + 41GBe onboard Intel i350X

option between LSI 9300-8i and 9207-8i

Edit: AFP protocol used for Sharing

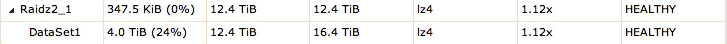

So far my test raidz2 and 12x 2TB 7200rpm SATA drives has had lackluster write speeds, around 12MB-50MB/s tops.

Even though the project is to attempt a maximum MTTDL with Raidz3, I'm positive I can reach for higher write speeds with all that ram, just not sure how.

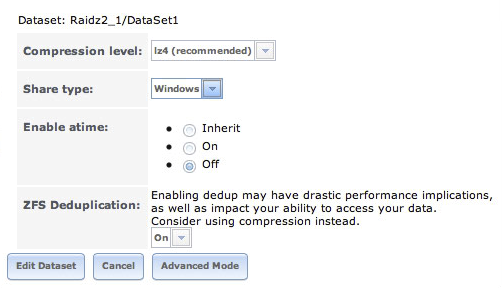

My current Test Raidz2 has no dedicated ZIL or Log SSD and Dedupe is turned on, but write seemed fairly lame when I had Dedupe off as well. I noticed that wired ram usage went to 50GB and stayed there early on in the big data move, which consists of several million photos between 11-50MB.

the source of the data is an Xserve with 10Gb fiber as well, and a DAS hardware Raid6 that I have already seen do 650MB/s read.

I am open to purchasing SSDs if they can be proven a sure win, but with these speeds, I'll have to look into other options like Win2k12 R2 storage spaces or hardware raid :(

All told, this server will need to hold over 100TB, some of which could really benefit from compression and dedupe.

Thanks in advance for any advice or links.

Supermicro 6047r-e1r36n 36-bay

Motherboard X9DRi-LN4F+

2x 2.0Ghz 6-core

64 GB DDR3 ECC Ram

Myricom 10Gbe + 41GBe onboard Intel i350X

option between LSI 9300-8i and 9207-8i

Edit: AFP protocol used for Sharing

So far my test raidz2 and 12x 2TB 7200rpm SATA drives has had lackluster write speeds, around 12MB-50MB/s tops.

Even though the project is to attempt a maximum MTTDL with Raidz3, I'm positive I can reach for higher write speeds with all that ram, just not sure how.

My current Test Raidz2 has no dedicated ZIL or Log SSD and Dedupe is turned on, but write seemed fairly lame when I had Dedupe off as well. I noticed that wired ram usage went to 50GB and stayed there early on in the big data move, which consists of several million photos between 11-50MB.

the source of the data is an Xserve with 10Gb fiber as well, and a DAS hardware Raid6 that I have already seen do 650MB/s read.

I am open to purchasing SSDs if they can be proven a sure win, but with these speeds, I'll have to look into other options like Win2k12 R2 storage spaces or hardware raid :(

All told, this server will need to hold over 100TB, some of which could really benefit from compression and dedupe.

Thanks in advance for any advice or links.

Last edited: