Ixian

Patron

- Joined

- May 11, 2015

- Messages

- 218

I built a new Freenas system (specs for both below). What I'd like to do is:

Copy data from system_old to system_new

Switch to using system_new (jails, etc.) as primary

When system_new is settled, destroy pools on system_old, reconfigure new pools using more disks (8 instead of 6), then use it as an ongoing replication target/backup system.

system_old:

Xeon D-1541 64GB ECC

Asrock Rack D1541 D4U-2TR MB

6x 5TB WD Red drives (poolname: Slimz) RaidZ2

2x 5TB WD Red drives (poolname: Backups) Mirror

1x 512GB Toshiba SATA SSD (poolname: Jails)

1x 240GB Intel P905 (log & cache for pools)

Intel x520 10GBase-T NIC (Storage interface, 10.0.0.2)

Intel i350 1GB NIC (Mgmt. interface, 192.168.0.90)

system_new:

Xeon E5-2680v3 64GB ECC

SM X10SRM-F MB

8x 10TB WD Red drives (poolname: Slimz) RaidZ2

2x 1TB Samsung 860 Evo SSD (poolname: Jails) Mirror

1x 400GB Intel DC3700 (log & cache for pools)

Intel x540 10GBase-T NIC (Storage interface, 10.0.0.3)

Intel i350 1GB NIC (Mgmt. interface, 192.168.0.91)

This is my first FreeNAS server migration so I need a little help. Specifically, I'm not familiar enough with ZFS send/receive on the CLI to set this up successfully.

Ideally I'd sync the entire pool "Slimz" and its datasets (19TB total) on system_old to the same pool on system_new, same for pool Jails and its datasets (340GB), and as for pool Backups I'd just like the datasets it contains to go under the Slimz pool on server_new as well.

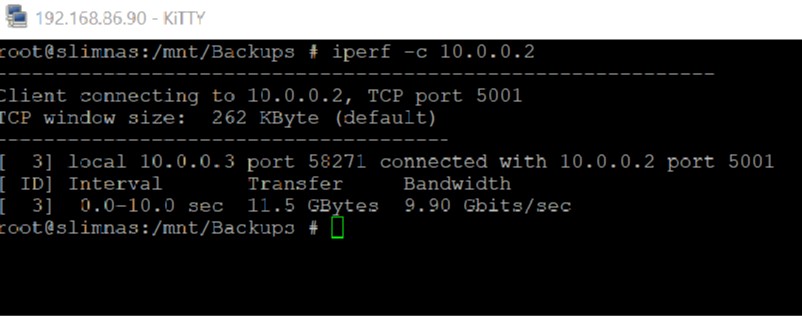

I've done some basic tuning for the 10GB NICs on both - hw.ix.enable_aim = 0 as a tunable, mtu 9000 for both, etc. I have both servers peered together; here's my iperf report:

Which seems pretty decent for Intel NICs. I'm aware I won't get data transfers speeds anywhere near that as the Reds are 5400 spindles, but obviously I'd like to max it.

Can anyone assist:

With the correct command line process to create manual snapshots and use ZFS send/recv? I've read up on piping with netcat to max the transfer rate but clearly I am doing something wrong.

Should I disable the log/cache on one/both server pools?

Once the data is sync'd over, can I promote the snapshot datasets so they can be used? Or clone instead?

Really appreciate any help/advice. Thanks!

Copy data from system_old to system_new

Switch to using system_new (jails, etc.) as primary

When system_new is settled, destroy pools on system_old, reconfigure new pools using more disks (8 instead of 6), then use it as an ongoing replication target/backup system.

system_old:

Xeon D-1541 64GB ECC

Asrock Rack D1541 D4U-2TR MB

6x 5TB WD Red drives (poolname: Slimz) RaidZ2

2x 5TB WD Red drives (poolname: Backups) Mirror

1x 512GB Toshiba SATA SSD (poolname: Jails)

1x 240GB Intel P905 (log & cache for pools)

Intel x520 10GBase-T NIC (Storage interface, 10.0.0.2)

Intel i350 1GB NIC (Mgmt. interface, 192.168.0.90)

system_new:

Xeon E5-2680v3 64GB ECC

SM X10SRM-F MB

8x 10TB WD Red drives (poolname: Slimz) RaidZ2

2x 1TB Samsung 860 Evo SSD (poolname: Jails) Mirror

1x 400GB Intel DC3700 (log & cache for pools)

Intel x540 10GBase-T NIC (Storage interface, 10.0.0.3)

Intel i350 1GB NIC (Mgmt. interface, 192.168.0.91)

This is my first FreeNAS server migration so I need a little help. Specifically, I'm not familiar enough with ZFS send/receive on the CLI to set this up successfully.

Ideally I'd sync the entire pool "Slimz" and its datasets (19TB total) on system_old to the same pool on system_new, same for pool Jails and its datasets (340GB), and as for pool Backups I'd just like the datasets it contains to go under the Slimz pool on server_new as well.

I've done some basic tuning for the 10GB NICs on both - hw.ix.enable_aim = 0 as a tunable, mtu 9000 for both, etc. I have both servers peered together; here's my iperf report:

Which seems pretty decent for Intel NICs. I'm aware I won't get data transfers speeds anywhere near that as the Reds are 5400 spindles, but obviously I'd like to max it.

Can anyone assist:

With the correct command line process to create manual snapshots and use ZFS send/recv? I've read up on piping with netcat to max the transfer rate but clearly I am doing something wrong.

Should I disable the log/cache on one/both server pools?

Once the data is sync'd over, can I promote the snapshot datasets so they can be used? Or clone instead?

Really appreciate any help/advice. Thanks!