bjbishop92

Cadet

- Joined

- Jun 27, 2023

- Messages

- 2

Hi everyone,

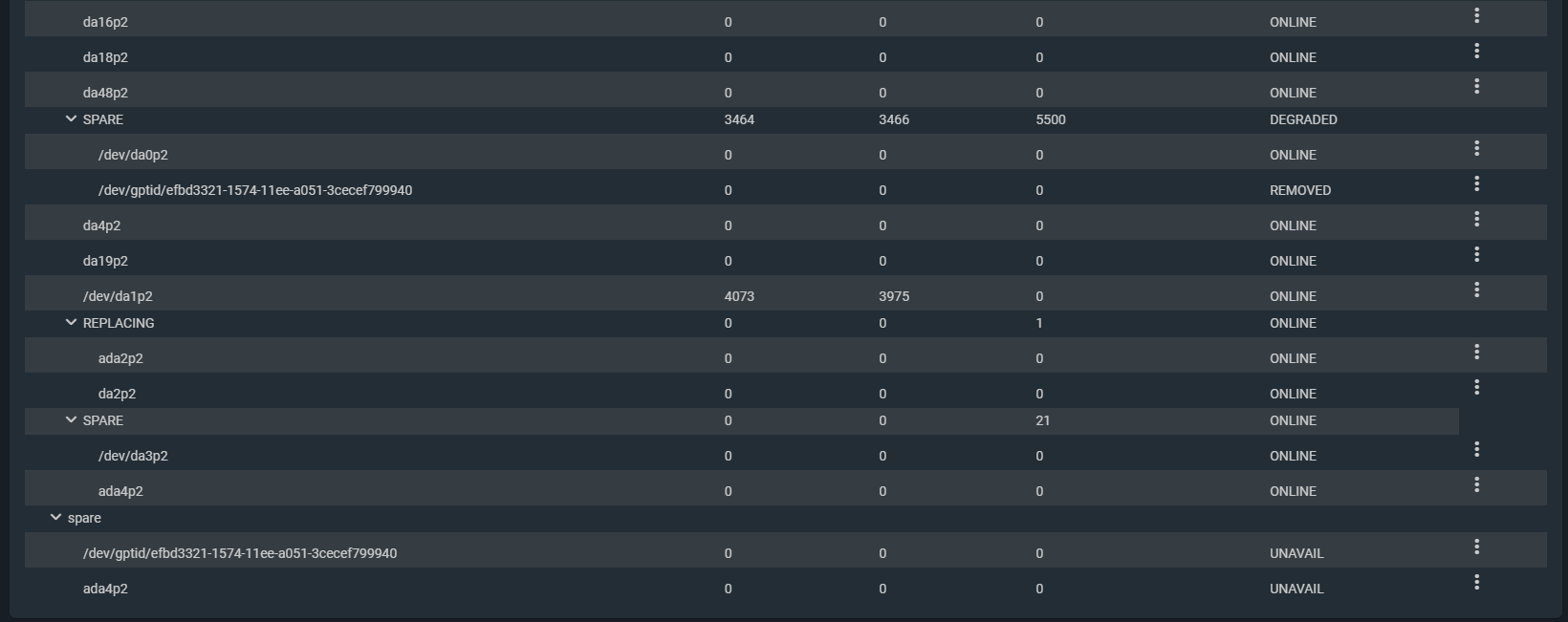

I have been having issues with my storage pool - The pool started having some failures one at a time.

I have added in 3 spares which were used up instantly to recover the pool and has been resilvering.

Storage wise all the disks look to be okay from SMART data.

This is part of one of the 3 vdevs in operation (ZFS RaidZ2).

Is my storage going to recover? Or am i better off remounting the pool as read only and pulling out as much as I can?

I have been having issues with my storage pool - The pool started having some failures one at a time.

I have added in 3 spares which were used up instantly to recover the pool and has been resilvering.

Storage wise all the disks look to be okay from SMART data.

This is part of one of the 3 vdevs in operation (ZFS RaidZ2).

Is my storage going to recover? Or am i better off remounting the pool as read only and pulling out as much as I can?