Probably discussed many time, and I spent 3 days reading different articles, still not sure I have a good sense how to properly test setup and how to design ZFS pool in most efficient way. So Have to ask for help and advice.

Here is my setup:

For zpool config I am trying:

- TrueNAS installation on mirror 2 x 250GB 12Gb/s SAS SSD

- Z2 with 12 x 1.9TB 12Gb/s SAS SSD

- spares 2 x 1.9TB 12Gb/s SAS SSD

- ZLOG mirror 2 x 512GB NVMe SSD

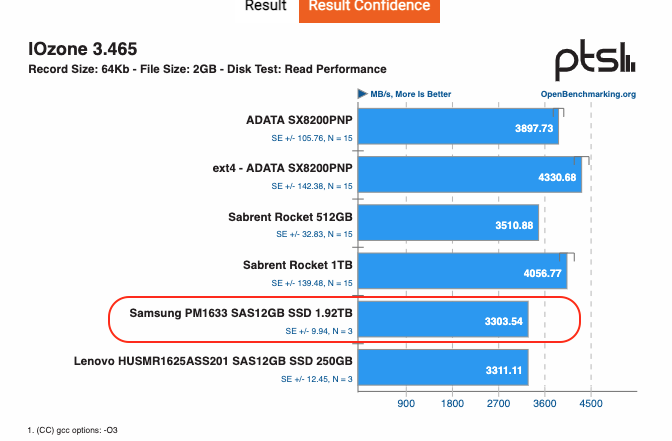

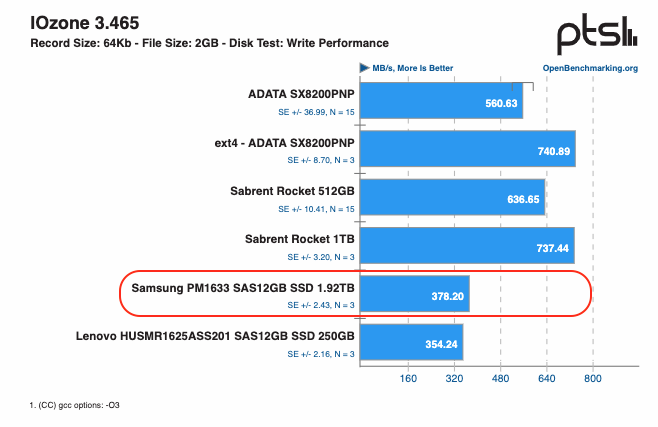

Before I configured all as ZFS I booted of CentOS 8 Live CD and run Phoronix Test Suite, against multiple individual drives (some did not even ended up in this system), but for specific individual drive I have in Z2 (1.9TB 12Gb/s SAS SSD), I got:

Read: 3,303MB/s

Write: 378MB/s

More extensive report is here https://openbenchmarking.org/result/2005053-NI-R730XDMUL59

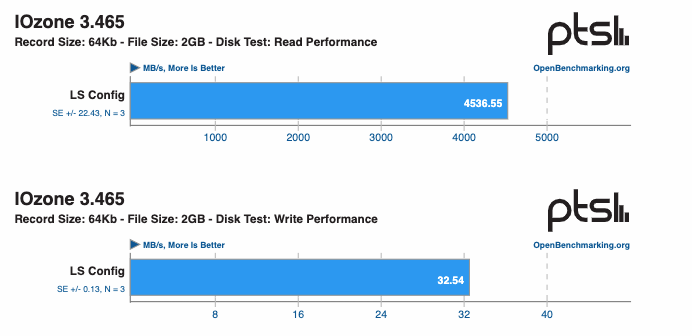

After I build a TrueNAS and ZFS, I created a Jail, loaded Phoronix Test Suite and run IOzone test on ZFS mount inside iocage mounted from Z2 pool.. result is more than puzzled to me

Report is here https://openbenchmarking.org/result/2104096-HA-12XSSDZ2Z42

Where READ is slightly better than single drive, WRITE is 10X worse. Seems like I am doing something wrong here or this testing methodology is not right. I was reading online about testing with `dd`, but also that refers mostly to spinning disks, not to SAS SSDs.

Here are some result of `dd` as well

Write: ~ 532Mb/s

Read: ~216Mb/s

at this point I am looking for any advice or pointers of relevant materials to read.

Here is my setup:

Code:

TrueNAS-12.0-U2.1 Dell R730xd CPU: 2 x Intel(R) Xeon(R) CPU E5-2650L v3 @ 1.80GHz Memory: 128GB ECC Dell HBA330 mini (IT mode) Disks: 2 x 250GB 12Gb/s SAS SSD 14 x 1.9TB 12Gb/s SAS SSD 2 x 512GB NVMe SSD Network: LACP - 2 x 40Gbe Mellanox MT27500 [ConnectX-3]

For zpool config I am trying:

- TrueNAS installation on mirror 2 x 250GB 12Gb/s SAS SSD

- Z2 with 12 x 1.9TB 12Gb/s SAS SSD

- spares 2 x 1.9TB 12Gb/s SAS SSD

- ZLOG mirror 2 x 512GB NVMe SSD

Before I configured all as ZFS I booted of CentOS 8 Live CD and run Phoronix Test Suite, against multiple individual drives (some did not even ended up in this system), but for specific individual drive I have in Z2 (1.9TB 12Gb/s SAS SSD), I got:

Read: 3,303MB/s

Write: 378MB/s

More extensive report is here https://openbenchmarking.org/result/2005053-NI-R730XDMUL59

After I build a TrueNAS and ZFS, I created a Jail, loaded Phoronix Test Suite and run IOzone test on ZFS mount inside iocage mounted from Z2 pool.. result is more than puzzled to me

Report is here https://openbenchmarking.org/result/2104096-HA-12XSSDZ2Z42

Where READ is slightly better than single drive, WRITE is 10X worse. Seems like I am doing something wrong here or this testing methodology is not right. I was reading online about testing with `dd`, but also that refers mostly to spinning disks, not to SAS SSDs.

Here are some result of `dd` as well

Write: ~ 532Mb/s

Code:

dd if=/dev/zero of=/mnt/z2/files/test/file.out bs=4096 count=1000000 oflag=direct 1000000+0 records in 1000000+0 records out 4096000000 bytes transferred in 7.339412 secs (558082826 bytes/sec)

Read: ~216Mb/s

Code:

dd if=/mnt/z2/files/test/file.out of=/dev/null bs=4096 1000000+0 records in 1000000+0 records out 4096000000 bytes transferred in 18.042795 secs (227015825 bytes/sec)

at this point I am looking for any advice or pointers of relevant materials to read.

Last edited: