mangelot

Dabbler

- Joined

- May 24, 2016

- Messages

- 11

I'm building my second Freenas system.

But I'm not happy with the performance speed (read 400MB / Write 300MB) to Proxmox Client (ISCSI LVM or NFS same result)

Iperf3 shows full 9.89 Gbits from Server to Client (and 9.81 Gbits from Client to Server)

When running Bonnie++ on Freenas pool, I receive more than 2000MB/s

Hardware:

Dell R520 2x Quadcore E5-2407 CPU's

128GB RAM

8x Samsung SSD 480GB

Dell Perc 710 mini flashed in IT-Mode

CHELSIO CC2-S320E-SR 10GB DUAL PORT (cxgb driver)

Software:

Latest freenas 11.3

4 vdev configured (4x2 Samsung SSD) (without L2ARC/SLOG)

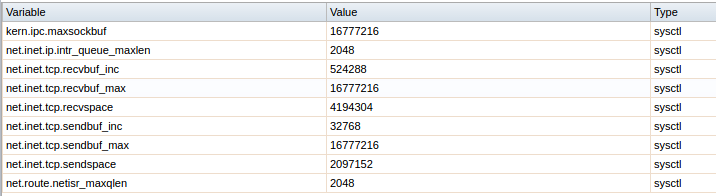

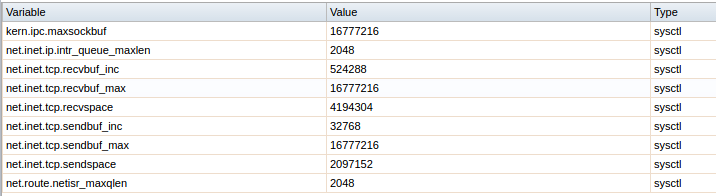

Tunables:

enabled auto tuning in freenas and 10Gbe tuning advice below

Who has any suggestions, what could go wrong?

But I'm not happy with the performance speed (read 400MB / Write 300MB) to Proxmox Client (ISCSI LVM or NFS same result)

Iperf3 shows full 9.89 Gbits from Server to Client (and 9.81 Gbits from Client to Server)

When running Bonnie++ on Freenas pool, I receive more than 2000MB/s

Hardware:

Dell R520 2x Quadcore E5-2407 CPU's

128GB RAM

8x Samsung SSD 480GB

Dell Perc 710 mini flashed in IT-Mode

CHELSIO CC2-S320E-SR 10GB DUAL PORT (cxgb driver)

Software:

Latest freenas 11.3

4 vdev configured (4x2 Samsung SSD) (without L2ARC/SLOG)

Tunables:

enabled auto tuning in freenas and 10Gbe tuning advice below

Who has any suggestions, what could go wrong?