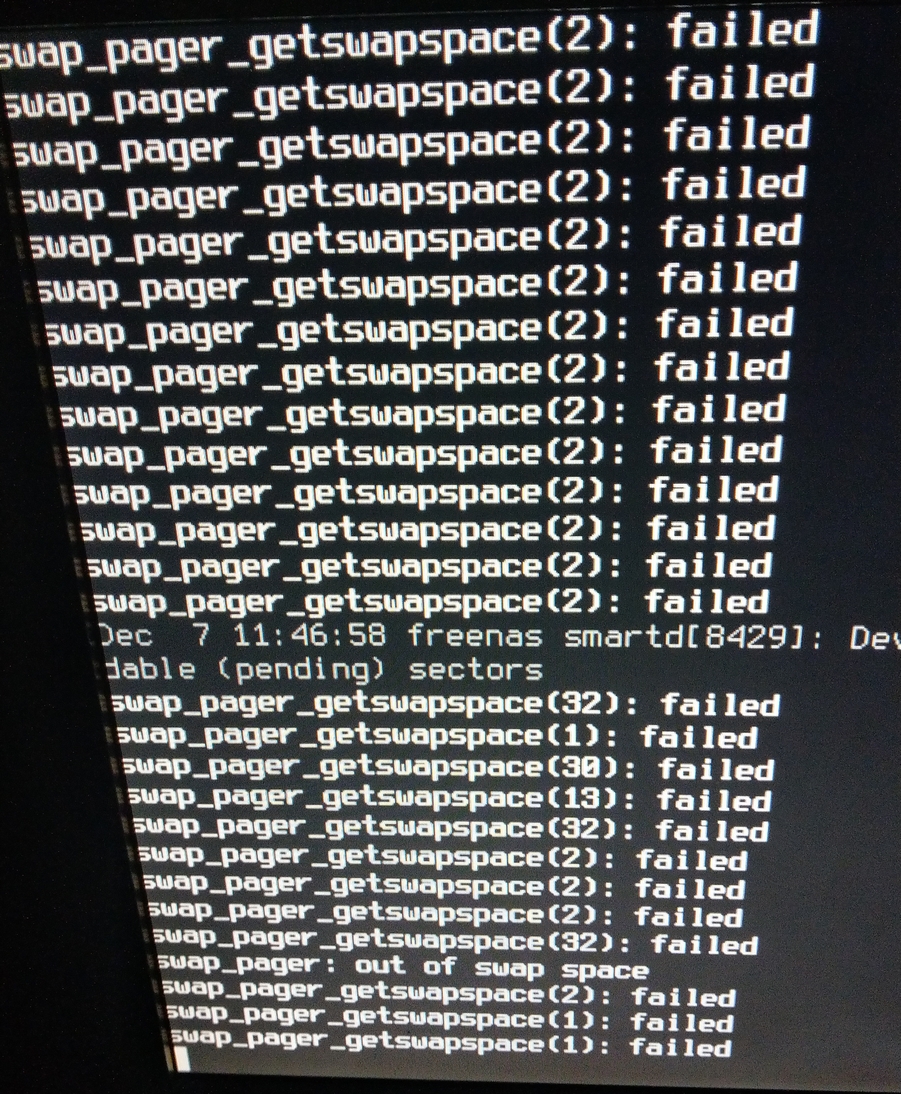

I suddenly started getting these "swap_pager_getswapspace(2): failed" errors and then started getting more of them but with different numbers. Then I started getting "swap_pager: out of swap space".

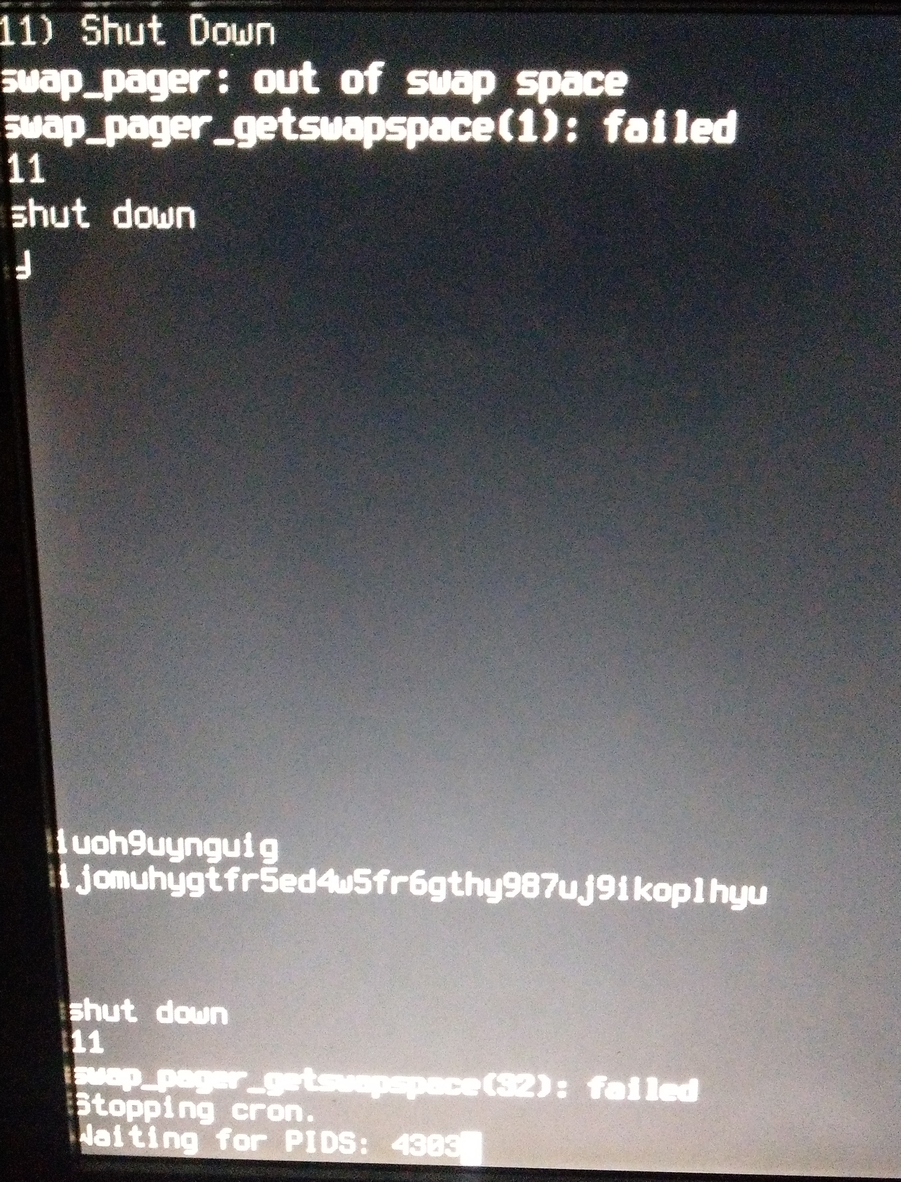

Then I couldn't log back into my server anymore via the webUI. I tried shutting it down from the console but it wouldn't respond to anything I typed. So I tried doing a shutdown by pressing the power button but it just said "Stopping cron." (whatever that means) and "Waiting for PIDS: 4303". I got tired of waiting and did a hard shutdown.

This isn't my first FreeNAS server. I haven't seen this error before on my other server. Can anyone shed a light on this issue?

Then I couldn't log back into my server anymore via the webUI. I tried shutting it down from the console but it wouldn't respond to anything I typed. So I tried doing a shutdown by pressing the power button but it just said "Stopping cron." (whatever that means) and "Waiting for PIDS: 4303". I got tired of waiting and did a hard shutdown.

This isn't my first FreeNAS server. I haven't seen this error before on my other server. Can anyone shed a light on this issue?