I recently got an LSI 2308, and have not had a chance to test it yet. My motherboard only has one PCIE 3.0 x16 slot (no bifurcation), and it will be occupied by an Intel x710-DA2. Other PCIE slots are x16 physically, but they all operate as 3.0 x1 electrically. With that said, if I put my HBA to the 3.0 x1 slot, what is the implication? The PCIE 3.0 x1 is only 8 Gbps, so there will be performance limitations, but to what extent (e.g., HDD vs SSD, seq vs rand4k, parity check, rebuild speed, etc.)?

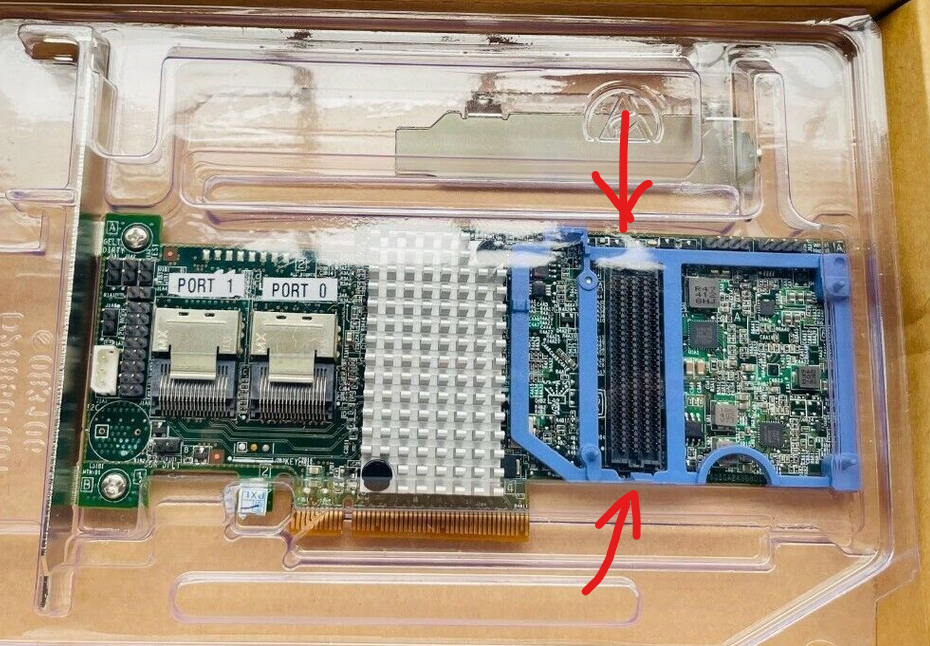

And a side question, does anyone know what the slot on this IBM m5110 is for? From a quick lookup, it is for a daughter board, a flash cache module of some sort, but I'm not certain about its functionalities and use cases.

And a side question, does anyone know what the slot on this IBM m5110 is for? From a quick lookup, it is for a daughter board, a flash cache module of some sort, but I'm not certain about its functionalities and use cases.