Sawtaytoes

Patron

- Joined

- Jul 9, 2022

- Messages

- 221

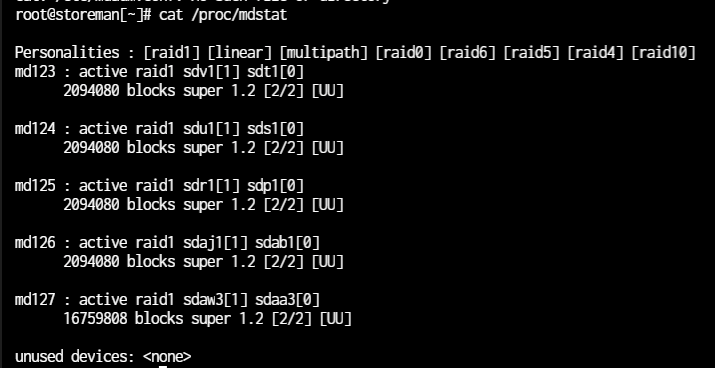

Ever since upgrading from TrueNAS Core to SCALE, I've been noticing these RAID drives show up:

None of these drives are in RAID1 configs except the last two (boot drives in md127). Those other drives are in ZFS mirrors, but two drives listed here are in different zpools, and the others are in different vdevs.

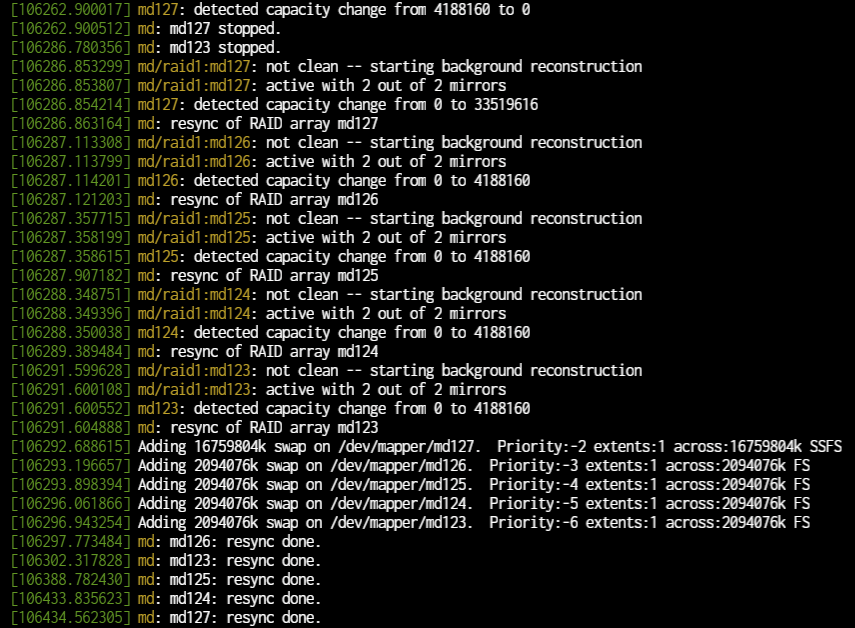

These are the log messages:

It's adding swap space to my ZFS drives?

What can I do to fix this?

None of these drives are in RAID1 configs except the last two (boot drives in md127). Those other drives are in ZFS mirrors, but two drives listed here are in different zpools, and the others are in different vdevs.

These are the log messages:

It's adding swap space to my ZFS drives?

What can I do to fix this?