jcpt928

Cadet

- Joined

- Jan 16, 2023

- Messages

- 4

This one is going to be pretty self-explanatory. TrueNAS Scale - running solid as a VM, have tested NFS, SMB, etc.; and, after working through some [senseless] issues with getting TrueNAS to actually let XCP-ng connect to it (there are clearly some standards not being adhered to in TrueNAS), I am running into this oddity.

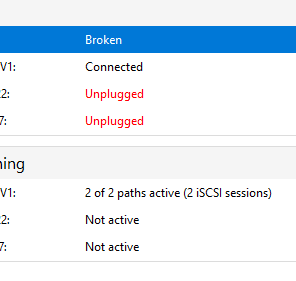

A single host in the pool is connecting to the iSCSI LUN, and, neither of the others. I can confirm that both "unplugged" hosts can see the portal, the targets, and the LUN; but, neither of the others will connect. I can confirm TrueNAS is receiving the login requests when connecting; but, am seeing this interesting tidbit...

"Jan 16 19:08:21 truenas kernel: [14964]: iscsi-scst: Negotiated parameters: InitialR2T No, ImmediateData Yes, MaxConnections 1, MaxRecvDataSegmentLength 1048576, MaxXmitDataSegmentLength 262144,"

Is that "MaxConnections 1" what I think it is? If so, how do I modify it?

A single host in the pool is connecting to the iSCSI LUN, and, neither of the others. I can confirm that both "unplugged" hosts can see the portal, the targets, and the LUN; but, neither of the others will connect. I can confirm TrueNAS is receiving the login requests when connecting; but, am seeing this interesting tidbit...

"Jan 16 19:08:21 truenas kernel: [14964]: iscsi-scst: Negotiated parameters: InitialR2T No, ImmediateData Yes, MaxConnections 1, MaxRecvDataSegmentLength 1048576, MaxXmitDataSegmentLength 262144,"

Is that "MaxConnections 1" what I think it is? If so, how do I modify it?