Hamberglar

Cadet

- Joined

- Nov 7, 2022

- Messages

- 6

I'm consistently facing an issue where reading or writing around 60 MB/s of data from my NAS causes both the NAS and the client to lose all network connectivity for a short time until the SMB share is closed due to said network connectivity loss.

The network itself is, as far as I can tell, fine during this time. However, from the client computer, you lose connectivity to everything, including the gateway.

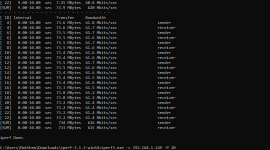

The NAS and client are stable until you reach about 50-60 MiB/s, according to the readout on the dashboard. At which point a ping test to any local network resource (such as the gateway or the nas itself) will go from the typical <1ms to about 25ms.

I've tested and encountered this issue both with smb and ftp, so it's not a protocol issue as far as I can tell.

I did a bit of research and determined that the realtek NIC I was using is not supported and switched to an explicitly supported intel chipset (i210). The issue persists. I have disabled the onboard nic and all other non-essential onboard functions like rgb and sound. Memtest86 passed. All temps are ice cold. Brand new machine, brand new install. It's been an issue since day 1. The client machine doesn't seem to be part of the equation because I haven't found a computer that doesn't experience this problem with my NAS and I've tried several.

When the crash happens, nothing is written to /var/log/messages or any other log as far as I can tell. I've attached a ping test that shows the severity of the issue.

TrueNAS-13.0-U3

AMD Ryzen 5 5600G

ASRock A520M-ITX/AC

Intel i210

Samsung 860 evo (truenas system drive)

3x 8TB MaxDigitalData 7200RPM

1x 16GB Intel Optane (cache)

16GB non-ecc memory

I found a few other threads that mentioned similar issues but the "solution" seemed to always be switching to a supported NIC, which I've done.

Unfortunately, I can't think of a convenient way to do any testing to see if this is also an issue with a different OS. These drives are all I have at the moment.

I'd be happy to share any additional information, I'm a Truenas novice.

The network itself is, as far as I can tell, fine during this time. However, from the client computer, you lose connectivity to everything, including the gateway.

The NAS and client are stable until you reach about 50-60 MiB/s, according to the readout on the dashboard. At which point a ping test to any local network resource (such as the gateway or the nas itself) will go from the typical <1ms to about 25ms.

I've tested and encountered this issue both with smb and ftp, so it's not a protocol issue as far as I can tell.

I did a bit of research and determined that the realtek NIC I was using is not supported and switched to an explicitly supported intel chipset (i210). The issue persists. I have disabled the onboard nic and all other non-essential onboard functions like rgb and sound. Memtest86 passed. All temps are ice cold. Brand new machine, brand new install. It's been an issue since day 1. The client machine doesn't seem to be part of the equation because I haven't found a computer that doesn't experience this problem with my NAS and I've tried several.

When the crash happens, nothing is written to /var/log/messages or any other log as far as I can tell. I've attached a ping test that shows the severity of the issue.

TrueNAS-13.0-U3

AMD Ryzen 5 5600G

ASRock A520M-ITX/AC

Intel i210

Samsung 860 evo (truenas system drive)

3x 8TB MaxDigitalData 7200RPM

1x 16GB Intel Optane (cache)

16GB non-ecc memory

I found a few other threads that mentioned similar issues but the "solution" seemed to always be switching to a supported NIC, which I've done.

Unfortunately, I can't think of a convenient way to do any testing to see if this is also an issue with a different OS. These drives are all I have at the moment.

I'd be happy to share any additional information, I'm a Truenas novice.