beezone

Cadet

- Joined

- Feb 7, 2018

- Messages

- 5

Hi everyone,

I have a problem but don't even know how to start solving it.

I got a time machine backup server on FreeNAS-11.1-U1, CPU E5-2609 v3 @ 1.90GHz, 96Gb RAM, 36x6Tb 7200rpm Toshiba Enterprise SATA drives. Disks are split to 6 raidz1 volumes by 6 drives. Each backup user has a personal dataset to isolate one from the other. Every day I got same problem, disks busy counters almost at 100% during the working time as a result backup performance is terrible. Mac are using afp shares. Some backups are 40000+ of 8mb files.

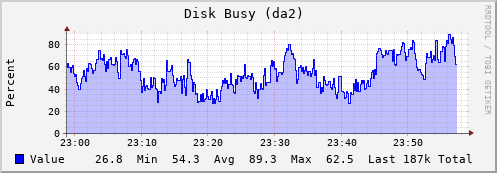

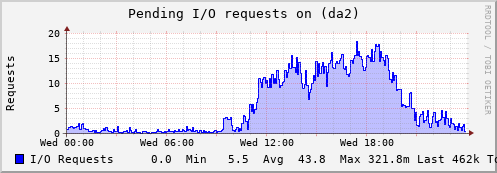

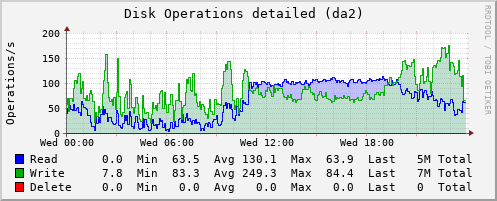

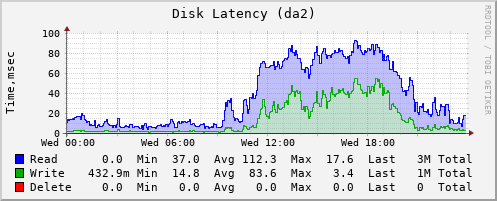

24h graph of disk busy time by volumes.

Is it possible to increase the performance of disk subsystem? What of this may help or not: add ZIL, SLOG, ZFS settings or maybe block-size. Which advanced benchmarks or stats I can get to understand what to do?

Or nothing can help me and I need to find a cozy corner and cry?

I have a problem but don't even know how to start solving it.

I got a time machine backup server on FreeNAS-11.1-U1, CPU E5-2609 v3 @ 1.90GHz, 96Gb RAM, 36x6Tb 7200rpm Toshiba Enterprise SATA drives. Disks are split to 6 raidz1 volumes by 6 drives. Each backup user has a personal dataset to isolate one from the other. Every day I got same problem, disks busy counters almost at 100% during the working time as a result backup performance is terrible. Mac are using afp shares. Some backups are 40000+ of 8mb files.

24h graph of disk busy time by volumes.

Is it possible to increase the performance of disk subsystem? What of this may help or not: add ZIL, SLOG, ZFS settings or maybe block-size. Which advanced benchmarks or stats I can get to understand what to do?

Or nothing can help me and I need to find a cozy corner and cry?