We re-purposed 2 older Dell R200 1U servers for FreeNAS storage which is intended to hold Veeam backups. The server only can hold 2 drives, but I do have a 32GB SSD squeezed in there which it boots FreeNas 9.2.0 x64. The server has 4GB RAM and contains 2 4TB WD RED SATA drives in Raid-0. We are using Raid-0 so we can get a volume size large enough to hold our backups.

The second nic is connected directly to the second nic of the other server and they run ZFS replication over that interface to keep that traffic separate. Since the one chassis can't hold enough drives for RAID-5 or 10, this is sufficient for now and it is achieving its goal if one system goes down, I have the other that I can still use while I repair the original.

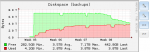

Now this used to have around 7.1 TB maximum space but I noticed today that I'm only showing 4.10 TB maximum space. I went in and noticed that there are 9 snapshots and I'm thinking maybe that is eating up the space. The thing is that under Periodic Snapshot Tasks I have it create 1 snapshot a day and keep it for 1 day. Its enough to snapshot after all the nightly backups have completed, and then it replicates to the other appliance. Data only is written at night, so might as well snapshot it the next morning after the backups have been stored.

So this was working great for a few weeks but now why are the snapshots not deleting? Is that what reduced my maximum capacity on the shared volume from 7 TB to 4.10 TB?

The second nic is connected directly to the second nic of the other server and they run ZFS replication over that interface to keep that traffic separate. Since the one chassis can't hold enough drives for RAID-5 or 10, this is sufficient for now and it is achieving its goal if one system goes down, I have the other that I can still use while I repair the original.

Now this used to have around 7.1 TB maximum space but I noticed today that I'm only showing 4.10 TB maximum space. I went in and noticed that there are 9 snapshots and I'm thinking maybe that is eating up the space. The thing is that under Periodic Snapshot Tasks I have it create 1 snapshot a day and keep it for 1 day. Its enough to snapshot after all the nightly backups have completed, and then it replicates to the other appliance. Data only is written at night, so might as well snapshot it the next morning after the backups have been stored.

So this was working great for a few weeks but now why are the snapshots not deleting? Is that what reduced my maximum capacity on the shared volume from 7 TB to 4.10 TB?