Hi. I am getting slow write speeds when copying files from Windows desktop to FreeNAS via SMB share over 10Gb copper. Any suggestions? Thank you.

I've tried adding the 10Gb tunable settings from this page, no change/

jro.io

jro.io

Similar results with Windows explorer copier and Teracopy

Results (44GB single file)

From Windows desktop to FreeNAS

169 MB/s

From FreeNAS to Windows desktop

547 MB/s

Also tried to my Synology DS1812 (6-disk SHR-2)

From Windows desktop to Synology

325 MB/s

From Synology to Windows desktop

439 MB/s

Here is my setup

FreeNAS-9.10.2-U6

X9SRL-F

E5-1620v2

96GB RAM

12-disk 8TB RAIDZ3 w/ 1MiB recordsize

X540-T2 NIC

Windows 10 build 1809

C246-WU4

E-2288G

32GB RAM

P3600 SSD

X550-T2 NIC

Netgear XS505M

I've tried adding the 10Gb tunable settings from this page, no change/

Building a NAS

Similar results with Windows explorer copier and Teracopy

Results (44GB single file)

From Windows desktop to FreeNAS

169 MB/s

From FreeNAS to Windows desktop

547 MB/s

Also tried to my Synology DS1812 (6-disk SHR-2)

From Windows desktop to Synology

325 MB/s

From Synology to Windows desktop

439 MB/s

Here is my setup

FreeNAS-9.10.2-U6

X9SRL-F

E5-1620v2

96GB RAM

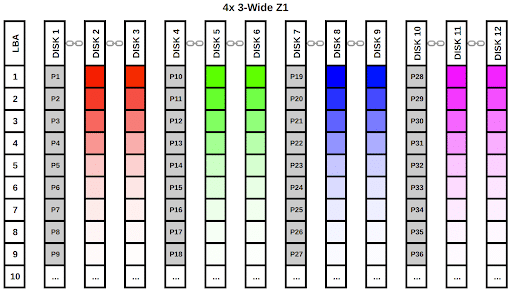

12-disk 8TB RAIDZ3 w/ 1MiB recordsize

X540-T2 NIC

Windows 10 build 1809

C246-WU4

E-2288G

32GB RAM

P3600 SSD

X550-T2 NIC

Netgear XS505M