Heyo!

I'm running a Proxmox server with TrueNAS Scale. The Hypervisor is running on an AMD Epyc 7662, 512GB of RAM and a 10G NIC.

However, I don't get anywhere near the 10G NIC speeds - but I do get faste speeds with an obscene amount of cores.

I did three quick tests to illustrate my issue:

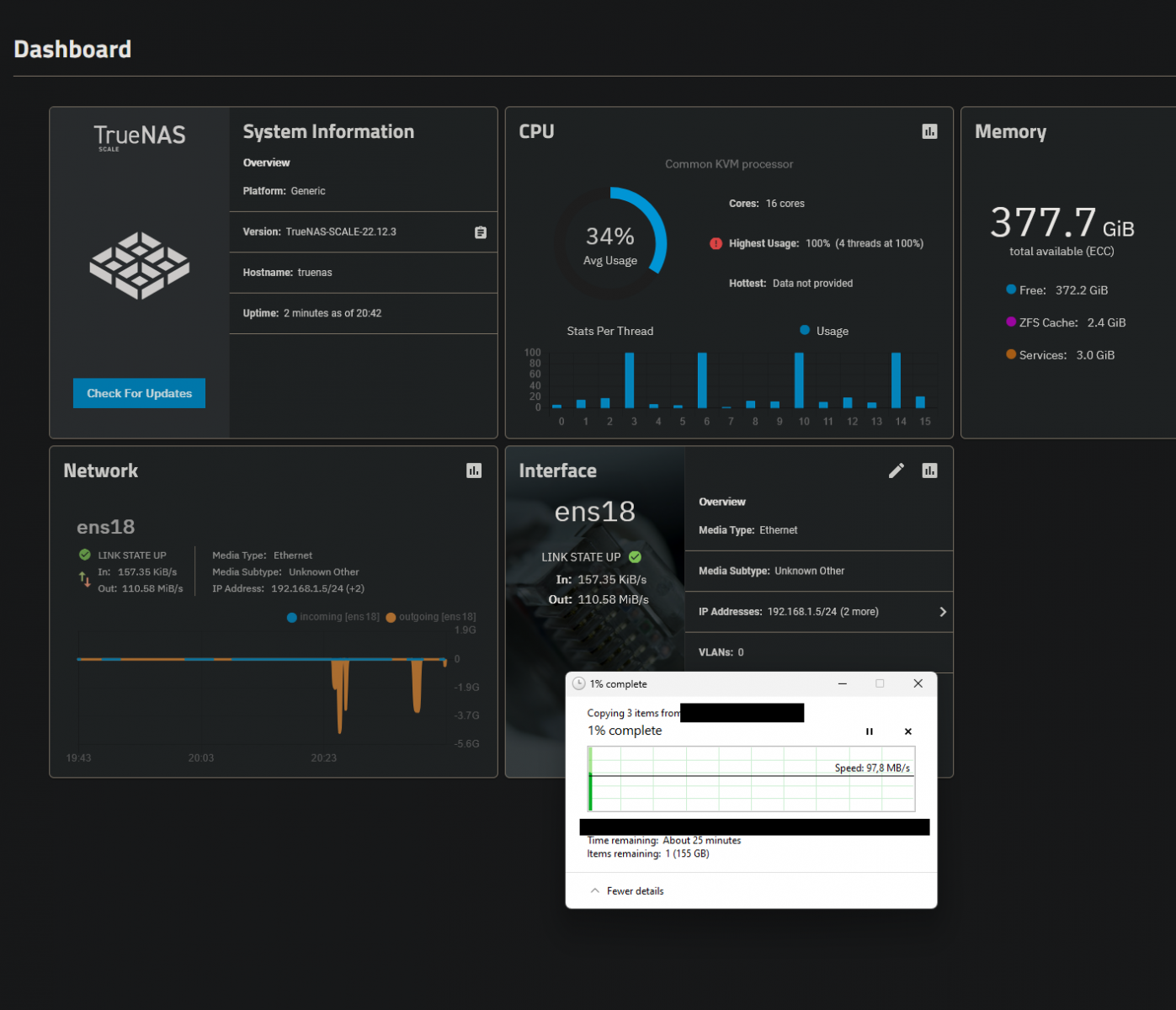

1. Allocating 16 threads to the VM.

This sees my SMB throughput limited to below 1G speeds, at around 95-100 MB/s.

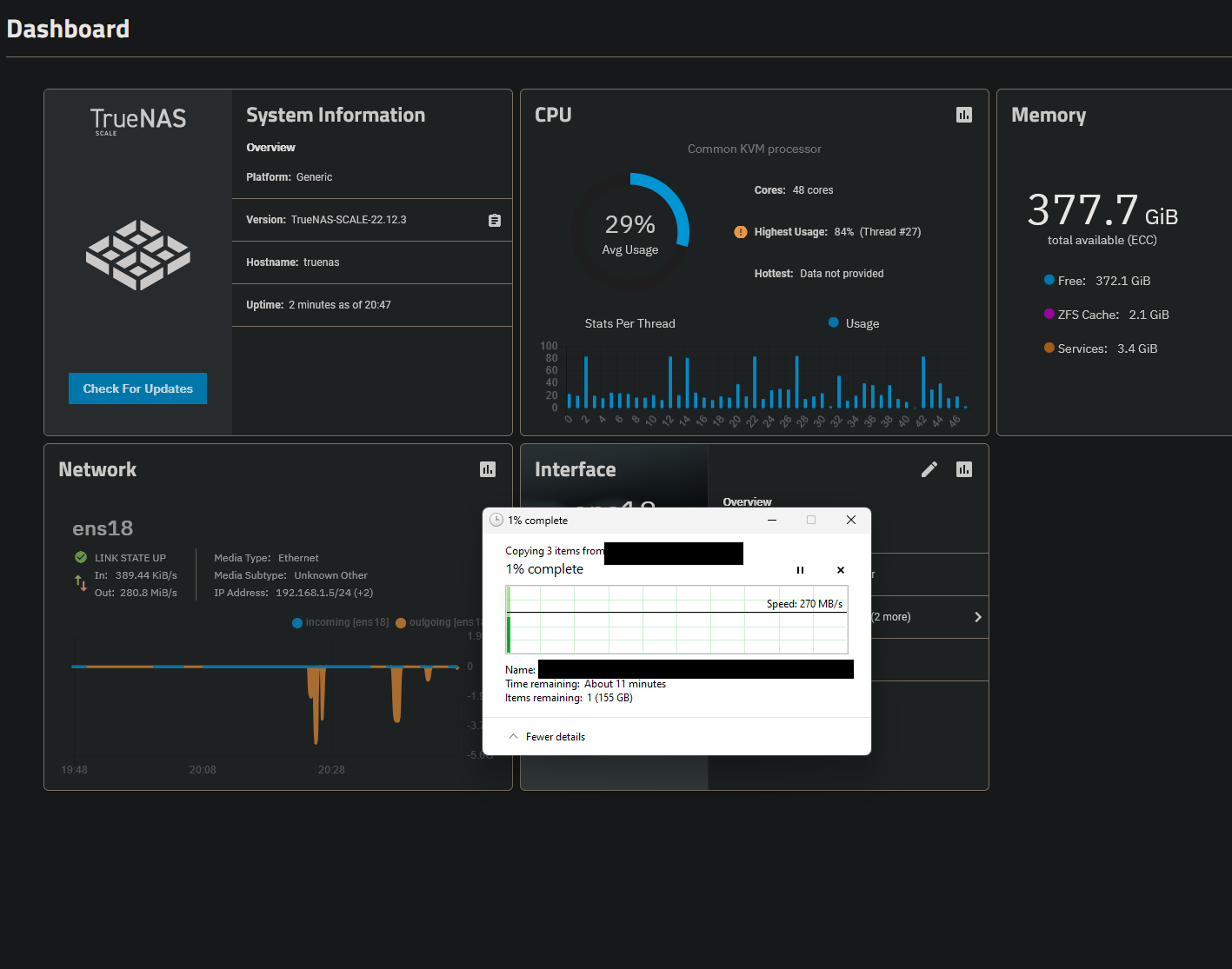

2. Allocating 48 threads.

This gets me up to around 270 MB/s.

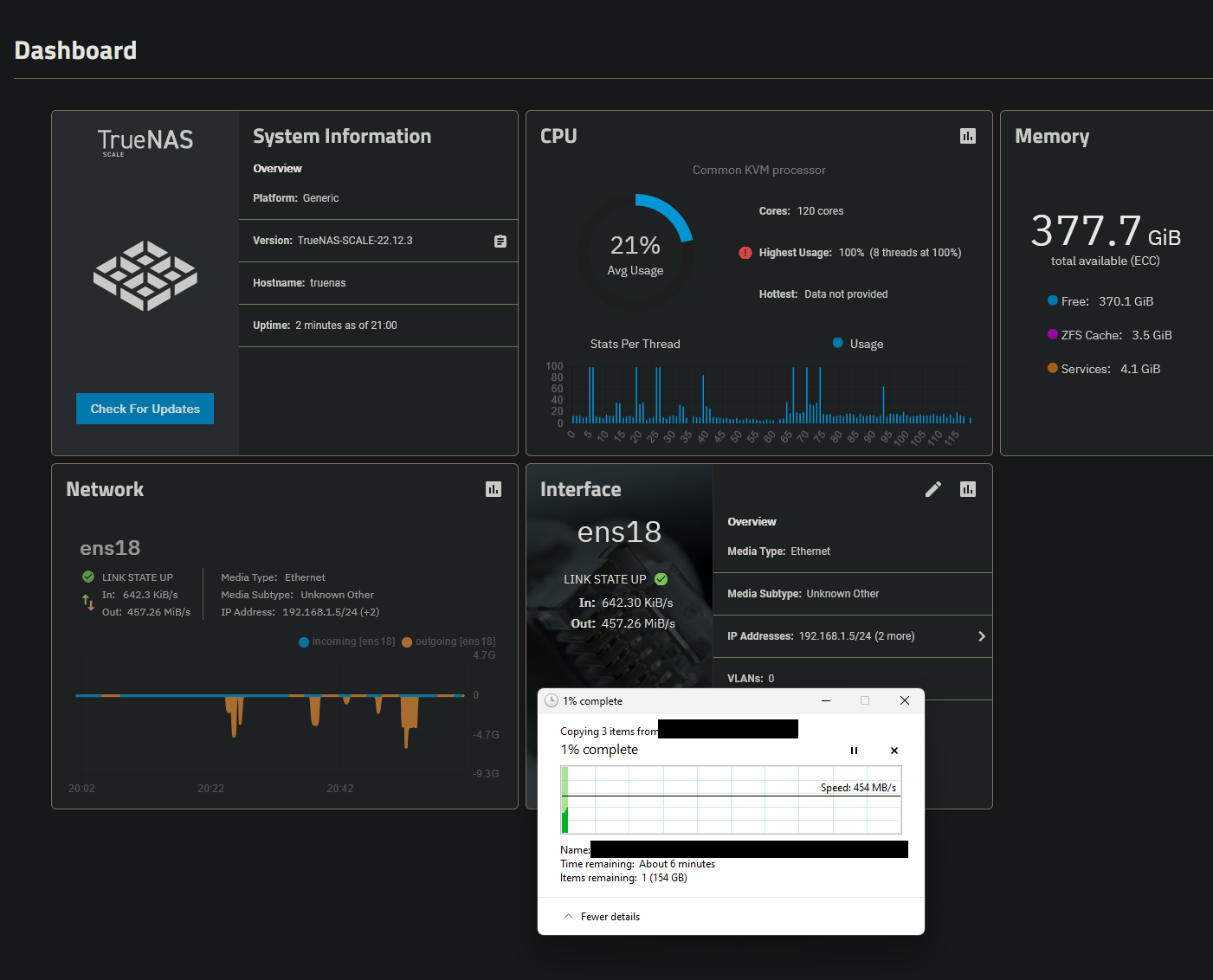

3. 120 threads.

Now sustaining just below 450-500/s - which is still way below the capabilities of the pool.

I also tried going from letting Proxmox handling the NIC to passing it through to the VM, but same result.

Any ideas how to not have to waste 100+ threads to get decent throughput? I assume I made some sort of config error to make this CPU perform so poorly.

And also why a beefy Epyc CPU can be beaten in throughput by way an Atom CPU?

I'm running a Proxmox server with TrueNAS Scale. The Hypervisor is running on an AMD Epyc 7662, 512GB of RAM and a 10G NIC.

However, I don't get anywhere near the 10G NIC speeds - but I do get faste speeds with an obscene amount of cores.

I did three quick tests to illustrate my issue:

1. Allocating 16 threads to the VM.

This sees my SMB throughput limited to below 1G speeds, at around 95-100 MB/s.

2. Allocating 48 threads.

This gets me up to around 270 MB/s.

3. 120 threads.

Now sustaining just below 450-500/s - which is still way below the capabilities of the pool.

I also tried going from letting Proxmox handling the NIC to passing it through to the VM, but same result.

Any ideas how to not have to waste 100+ threads to get decent throughput? I assume I made some sort of config error to make this CPU perform so poorly.

And also why a beefy Epyc CPU can be beaten in throughput by way an Atom CPU?

Last edited: