gzartman

Contributor

- Joined

- Nov 1, 2013

- Messages

- 105

If it shows each device averaging at least 25MB/sec, that's about what I'd expect for PCI-X.

It's a PCI-x slot PLUGGED into a PCI slot. I'm hamstrung by the limitations of the old PCI technology. I just bought the box a little over a weekago and didn't discover that some idiot put a PCI-X board on a motherboard with a PCIe interface. Once I get my PCIe raid card, let's have another look at the system.

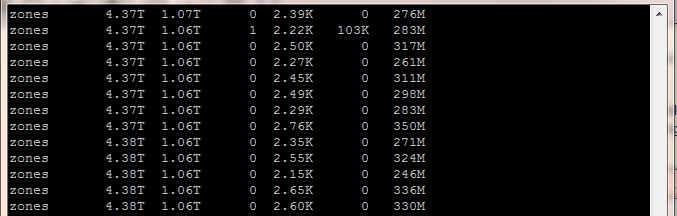

I plugged 6x1TB into the motherboard sata interface and created a 6 drive raidz pool and here's my iostat for a dd write to disk, not bad:

I want to utilize all 12 bays in my 2U box, so I need that IBM M1505 card. The PCI-x raid card is getting chucked.