Don't think his competence in systems engineering is in question.

Absolutely not in question. Now we

KNOW he's not competent as a systems engineer. Heh.

See, the problem here is that "developers" often confuse themselves for systems engineers, but they so rarely are. They write code. They're even good at it. But it is only one small cog of making large, complex systems that work well.

So since you've been hindered by an incompetent opinion, I will give you one clue as to what's going on. Then I'm off, because I don't really have the time this month, and I'm not going to force clue upon you.

ZFS is reliant on software to do things that hardware normally does. So. Your problem, I'm betting, is latency. A little latency dramatically reduces the speed at which things move.

We have seen this time and time again where users come in with a slowish platform (and my guess is that the L5639 has per-core performance around 2/3rds that of what we consider a reasonable CPU, the E3-1230). They complain that it is sooooo slow. They upgrade to a platform that is only 50% faster yet their speeds double.

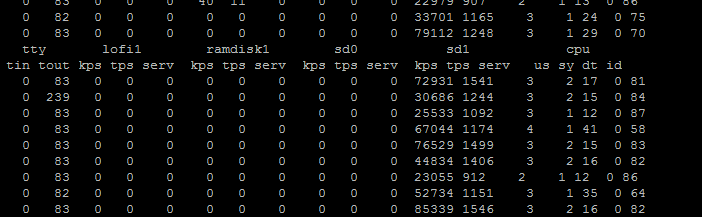

So why is that? Because when you look at their CPU stats, it may not LOOK like the CPU is the bottleneck... the trite and overly simplistic view you've taken...

For large amounts of data in from the net, it floods the TCP buffer and essentially becomes similar to a blocking operation. So let's simplify our discussion by pretending that network I/O is synchronous (which it most certainly isn't, but it /resembles/ being for this discussion).

So let's think about what happens here when some data arrives from the net. The CPU is idle. Data comes. CPU gets busy, finding blocks and calculating parity, preparing a transaction group. This should in theory max out CPU, but it is largely not threaded so it is basically maxxing out a single CPU core for a small chunk of time. Acknowledgement is sent back over the network. CPU is idle. At some future point, CPU will schedule a transaction group flush to the disk (once a txg is full).

This repeats many times a second, processing data coming in from the network. Without even considering the act of transaction group flushing, can you see that there are periods where the CPU is idle?

And then there's the transaction group flush. When writing large amounts of data, the CPU is forced to idle pending completion of the previous transaction group. CPU speed is mostly irrelevant here; flushing a txg is a lightweight operation in that it is just shuttling data out to disk.

So here's the thing. Your CPU not being 100% busy is no shocker. But a faster CPU means that the amount of work done between receiving data and being done processing that data is reduced, and handling the data faster means that your NAS goes faster. THAT's the thing that faster CPU helps with.

And in practice it is much more complicated than this... it isn't linear with the speed of the CPU, due to the clever layers of caching and buffering built in to multiple layers of UNIX and ZFS. I just wanted you to be able to have a chance to grasp what's going on, so this discussion is very simplified.

Remember, too, ZFS is replacing an expensive RAID controller with silicon designed specifically to do a task ... replacing that with a general-purpose CPU. Sun's bet was that a CPU was going to be cheaper than the specialized hardware. That does not mean that every CPU is fantastic for use with ZFS! One still has to pick one that's suited to the requirements, in much the way that if you buy a 4 port hardware RAID controller to run eight drives, that isn't going to work out so well for you. People just naturally GET that latter example, but it is harder for people to wrap their head around the qualities that make for a great ZFS CPU. Prefer clock speed over core count. Prefer turbo boost. Two cores is probably fine although four cores helps reduce contention. Most filers won't ever make good use of more than four cores. If you start adding in compression and encryption, increase the CPU.

So. Your CPU does not need to be pegged at 100% for me to estimate that it is likely to be introducing additional latency. It is hard to know how much, without actually doing some extensive testing. But it was one of two things I noticed that would obviously impact performance, the other of which was your choice to employ RAIDZ1 with six disks. And latency is a performance-killer. I wouldn't be shocked if a 5 disk RAIDZ1 array on an E3-1230 with 32GB was more than twice as fast.

Many of us here in the forum have seen this sort of problem. We had some very nice Opteron 240 based storage servers from the mid-2000's that were fully capable of gigabit goodness under FreeBSD+UFS, and when I upgraded one to FreeNAS, I was shocked at the horrible performance. It is because with ZFS, the CPU really does matter. It is

infuriating and makes planning more complex and difficult. I feel the pain. But it is what it is. I didn't write it. And I've said it before, ZFS is a resource PIG. But if you give it the resources to do the job, it will do an awesome job. It is just too bad that those resources are massively larger than your average NAS. Only you can make the call as to whether or not you wish to provide those resources. Hopefully this message will help you and your developer friend understand why it needs CPU resources.