Hi,

I have two TrueNAS SCALE builds, one running 22.12.3.3 and the other running 23.10.2. I want to copy all data from the 22.12.3.3 to the 23.10.2 build. Both servers are directly connected with a 10 Gb FibreChannel connection (Intel X710-DA2 on both sides). On both sides an alias/ip address is configured directly on that NIC.

I have configured a ssh connection with a ssh keypair on the sender and used that ip address configured on the (destination) 10 Gb NIC. I then configured a rsync task using that ssh connection which is PUSHING the data to the destination TrueNAS.

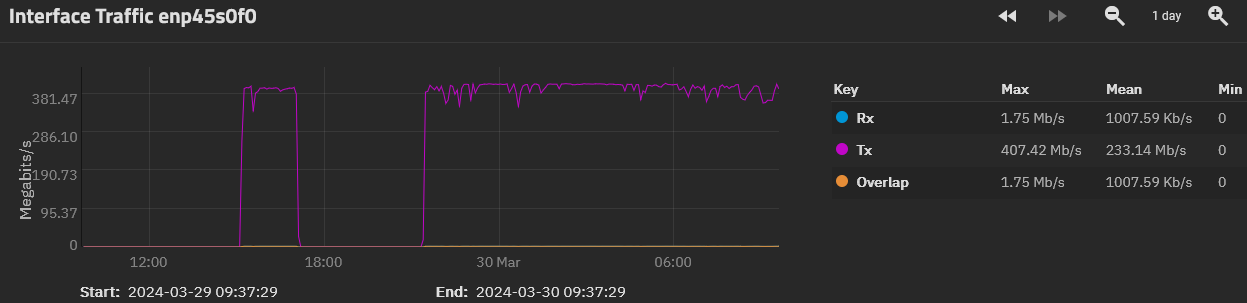

I can see on the sending system on the 10 Gb NIC that it is sending with 233 Mb/s avg and 407 Mb/s max:

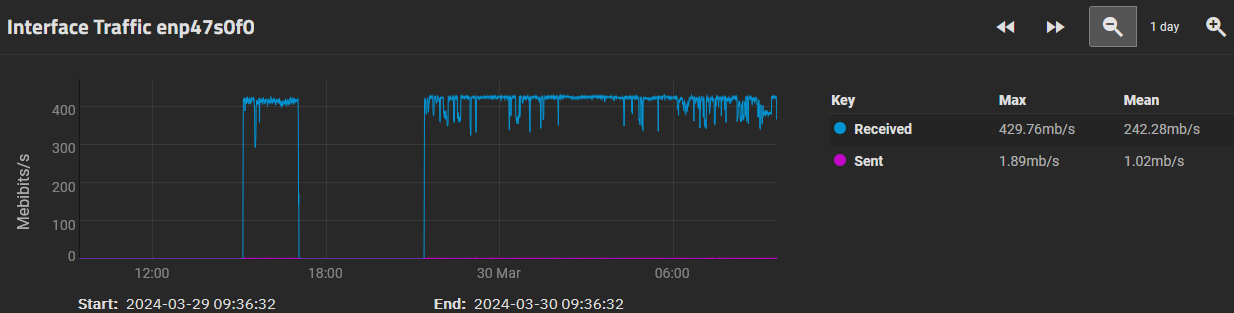

On the receiving system on the 10 Gb NIC can be seen that it is receiving with 242 Mb/s avg and 429 Mb/s avg

I have transferred a dataset of 2 TiB. I would have expected that when assuming a transfer rate of 230 Mb/s that this will take (2*1000*1000)/230/60/60 approximately 3 hours. But it actually took nearly 10 hours.

The disks in the destination TrueNAS are Seagate Exos X20 20TB, which should be fast enough to handle those 230 Mb/s.

Am I doing something wrong? Is there anything I can do to improve the speed? Otherwise transfering the bigger datasets will take weeks :(

Best regards,

AMiGAmann

I have two TrueNAS SCALE builds, one running 22.12.3.3 and the other running 23.10.2. I want to copy all data from the 22.12.3.3 to the 23.10.2 build. Both servers are directly connected with a 10 Gb FibreChannel connection (Intel X710-DA2 on both sides). On both sides an alias/ip address is configured directly on that NIC.

I have configured a ssh connection with a ssh keypair on the sender and used that ip address configured on the (destination) 10 Gb NIC. I then configured a rsync task using that ssh connection which is PUSHING the data to the destination TrueNAS.

I can see on the sending system on the 10 Gb NIC that it is sending with 233 Mb/s avg and 407 Mb/s max:

On the receiving system on the 10 Gb NIC can be seen that it is receiving with 242 Mb/s avg and 429 Mb/s avg

I have transferred a dataset of 2 TiB. I would have expected that when assuming a transfer rate of 230 Mb/s that this will take (2*1000*1000)/230/60/60 approximately 3 hours. But it actually took nearly 10 hours.

The disks in the destination TrueNAS are Seagate Exos X20 20TB, which should be fast enough to handle those 230 Mb/s.

Am I doing something wrong? Is there anything I can do to improve the speed? Otherwise transfering the bigger datasets will take weeks :(

Best regards,

AMiGAmann