jafin

Explorer

- Joined

- May 30, 2011

- Messages

- 51

I'm attempting to setup a new system for Freenas Build is

16GB RAM HP ML10 V2 Box 6 x 3TB RED

Booting off a 8GB USB Stick

One volume configured as ZFS2.

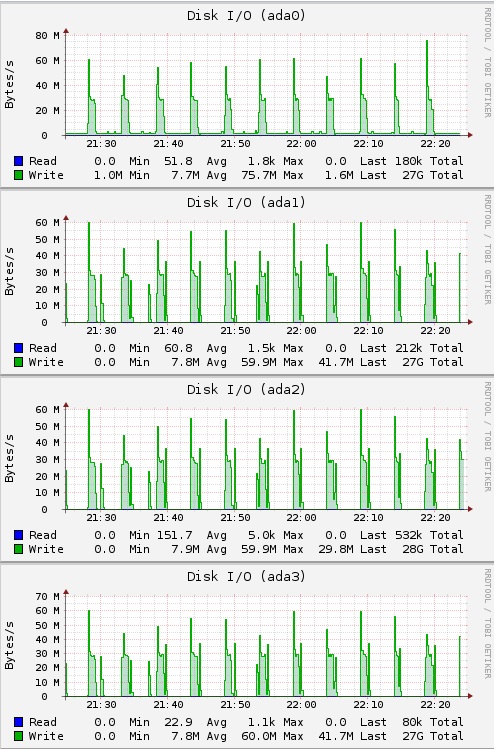

When i run a rsync from an existing freenas 9.10 over to this system I'm noticing spikes in disk performance. Reads shouw a similar pattern.

I also have attempted to transfer data from another system and noticed similar. (excluding the nas as a culprit)

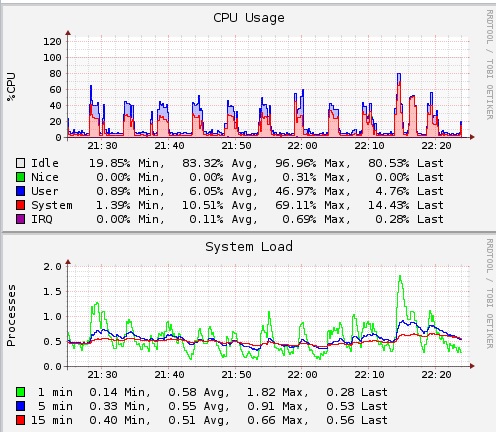

Graphs show minimal CPU activity and unsaturated network.

Files being copied are large >1gb files, no small files.

What I noticed is transfer will start and hover around the 100MB/s mark, it will then after approx 1 min dip to ~1MB/s transfer, sit there for a little while, then go back up to the 100MB/s mark.

I've run DD tests on the server, and they seem to get mixed results.

DMESG doesn't show anything interesting,.

I've enabled SMART and nothing is coming back with any errors.

The images are a rsync from the other nas, which when xfering to another system will saturate the gigabit lan without peaks.

I don't know what else to test/toggle to attempt to resolve the issue.

16GB RAM HP ML10 V2 Box 6 x 3TB RED

Booting off a 8GB USB Stick

One volume configured as ZFS2.

When i run a rsync from an existing freenas 9.10 over to this system I'm noticing spikes in disk performance. Reads shouw a similar pattern.

I also have attempted to transfer data from another system and noticed similar. (excluding the nas as a culprit)

Graphs show minimal CPU activity and unsaturated network.

Files being copied are large >1gb files, no small files.

What I noticed is transfer will start and hover around the 100MB/s mark, it will then after approx 1 min dip to ~1MB/s transfer, sit there for a little while, then go back up to the 100MB/s mark.

I've run DD tests on the server, and they seem to get mixed results.

Code:

[root@freenas] /mnt/tank1/temp# dd if=/dev/zero of=ddfile bs=8k count=4000000 4000000+0 records in 4000000+0 records out 32768000000 bytes transferred in 29.260153 secs (1119884778 bytes/sec) [root@nas2] /mnt/tank1/temp# dd if=ddfile of=/dev/zero bs=8k count=4000000 4000000+0 records in 4000000+0 records out 32768000000 bytes transferred in 60.502920 secs (541593694 bytes/sec)

DMESG doesn't show anything interesting,.

I've enabled SMART and nothing is coming back with any errors.

The images are a rsync from the other nas, which when xfering to another system will saturate the gigabit lan without peaks.

I don't know what else to test/toggle to attempt to resolve the issue.