JoeKickass

Dabbler

- Joined

- Nov 15, 2021

- Messages

- 12

After recovering data from my cloned snapshot, I went ahead and deleted the clone dataset and all my snapshots... yet my used space did not go down.

Every command I try says there is nothing using the space, no snapshots, no folder, it's like there is a 7TB dead space on the pool...

Please let me know if anyone has any ideas or has fixed a similar issue!

"zfs remap" seems to be deprecated so I wasn't able to try that, but here are the clues I've found so far:

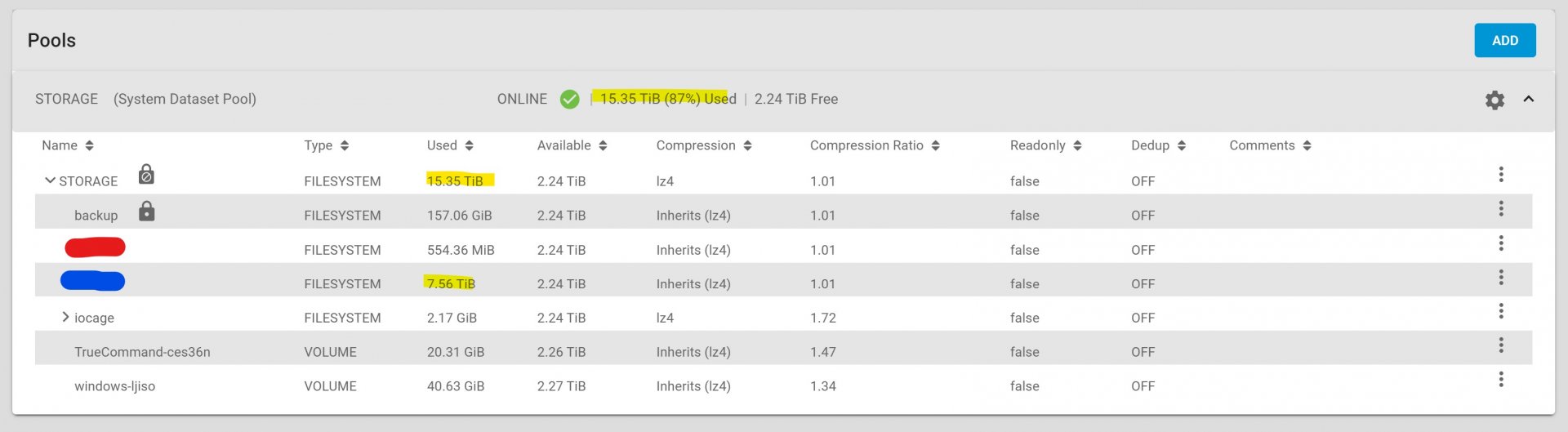

Used space too high (~7TB which seems like it was never reclaimed from cloned snapshot that was deleted)

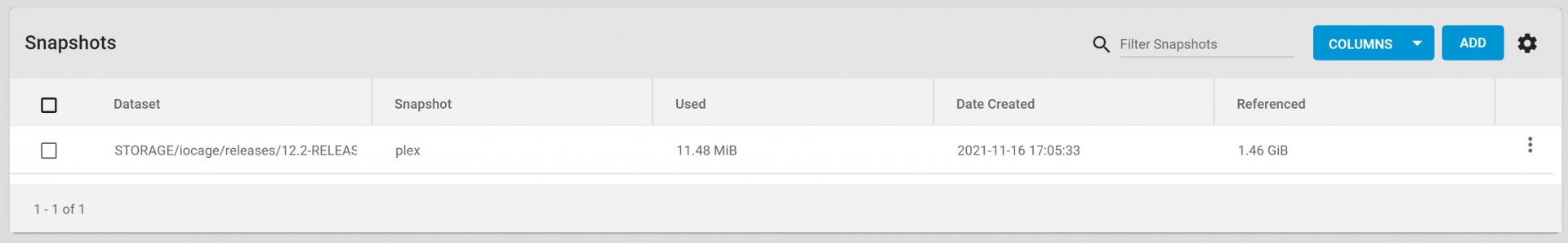

No snapshots:

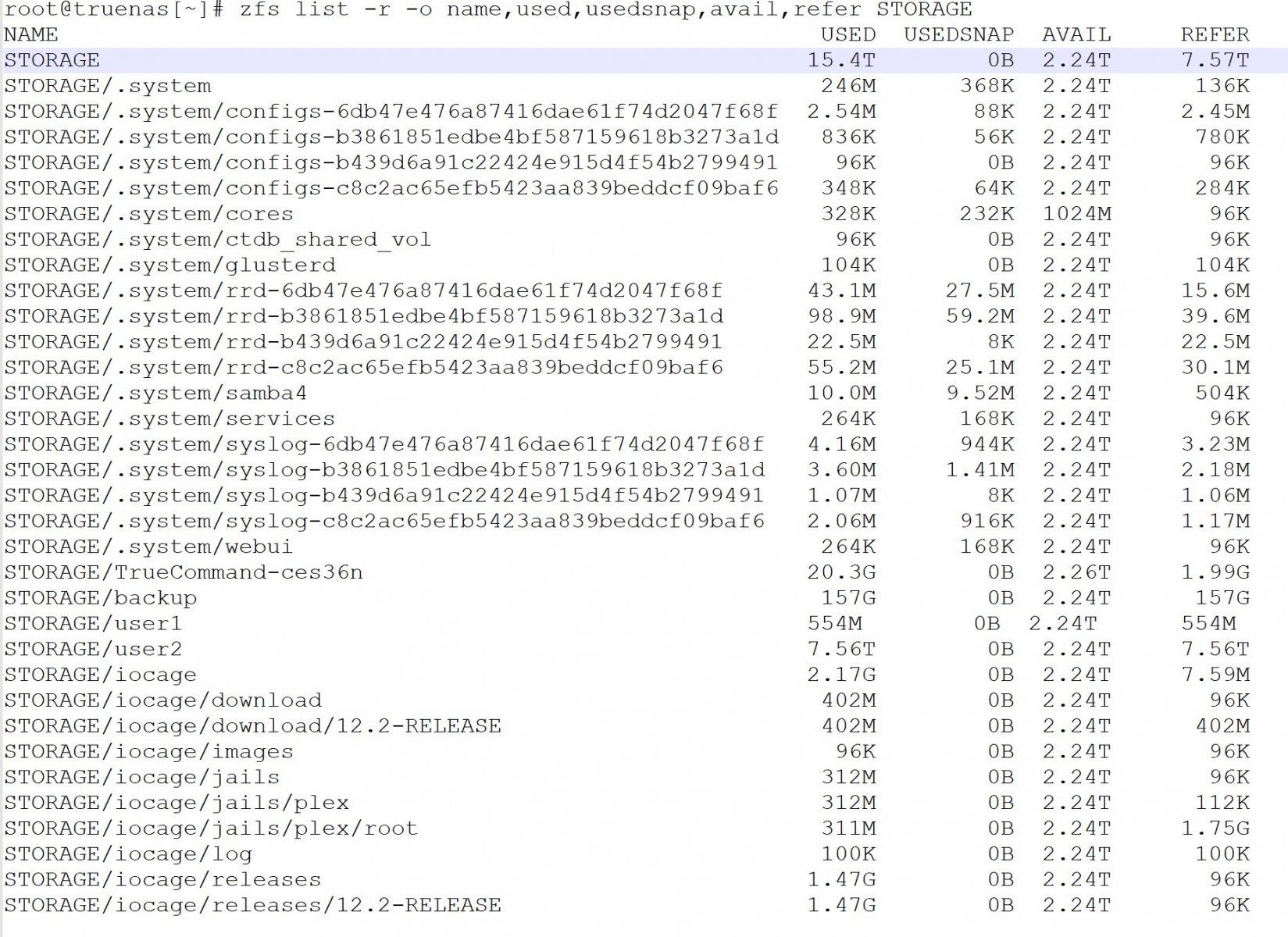

zfs list shows nothing using the space, 15TB used but only 7.5TB refer?

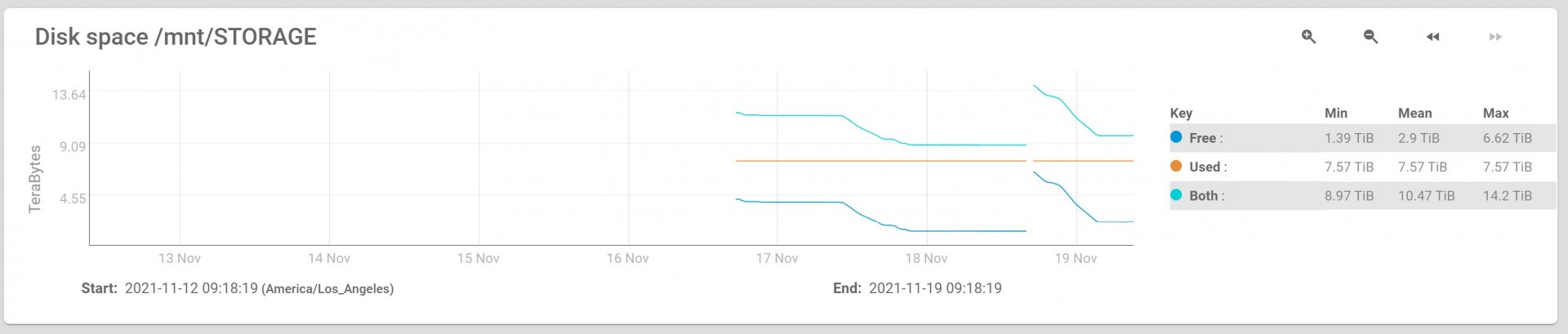

Here is the total space graph as I recovered the data from the clone, the jump is after I added new drives and finished transferring.

It looks like the total space goes down, while the used space remains the same, which doesn't seem right to me:

Thank you for any help or ideas!

Every command I try says there is nothing using the space, no snapshots, no folder, it's like there is a 7TB dead space on the pool...

Please let me know if anyone has any ideas or has fixed a similar issue!

"zfs remap" seems to be deprecated so I wasn't able to try that, but here are the clues I've found so far:

Used space too high (~7TB which seems like it was never reclaimed from cloned snapshot that was deleted)

No snapshots:

zfs list shows nothing using the space, 15TB used but only 7.5TB refer?

Here is the total space graph as I recovered the data from the clone, the jump is after I added new drives and finished transferring.

It looks like the total space goes down, while the used space remains the same, which doesn't seem right to me:

Thank you for any help or ideas!

Last edited: