Hello,

I'm facing this problem while importing a pool that has unfortunately lost all LOG disks.

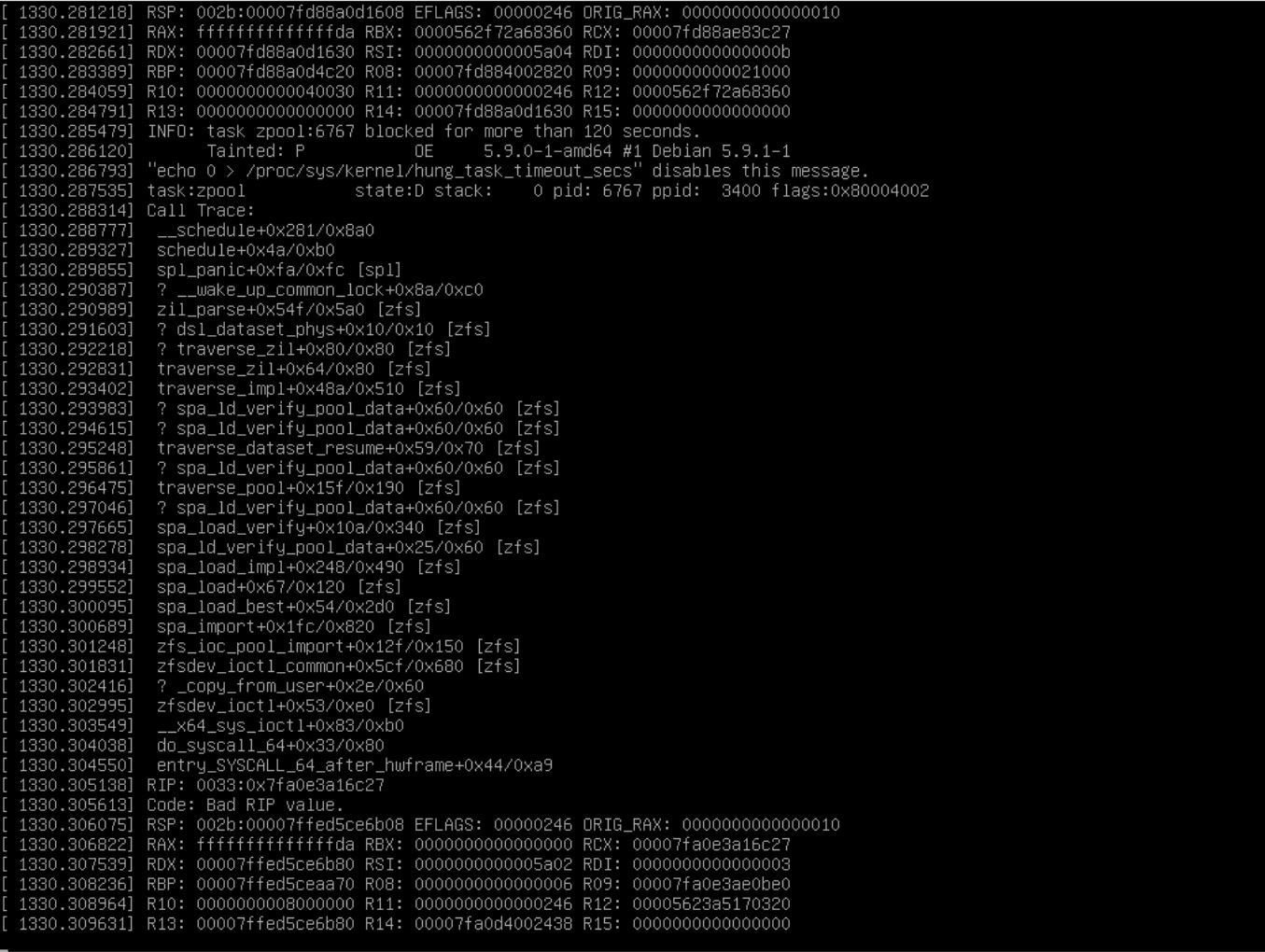

this is the output:

is it possible to save the content of the Pool ?

many many thanks

I'm facing this problem while importing a pool that has unfortunately lost all LOG disks.

this is the output:

Code:

---------------------------------------------------------------------------------------------------------------

truenas# zpool import Primary-Pool

cannot import 'Primary-Pool': pool was previously in use from another system.

Last accessed by lvmtruenas.localdomain (hostid=361b7376) at Sun Feb 21 13:53:04 2021

The pool can be imported, use 'zpool import -f' to import the pool.

---------------------------------------------------------------------------------------------------------------

truenas# zpool import Primary-Pool -f

The devices below are missing or corrupted, use '-m' to import the pool anyway:

mirror-2 [log]

6170d7e7-5025-11eb-8786-23dd6f7a6bf1

617847e3-5025-11eb-8786-23dd6f7a6bf1

307d3791-4562-492c-890e-538e470bb9e6

cannot import 'Primary-Pool': one or more devices is currently unavailable

---------------------------------------------------------------------------------------------------------------

truenas# zpool import Primary-Pool -f -m

2021 Feb 22 11:04:23 truenas VERIFY(!claimed || !(zh->zh_flags & ZIL_CLAIM_LR_SEQ_VALID) || (max_blk_seq == claim_blk_seq && max_lr_seq == claim_lr_seq) || (decrypt && error == EIO)) failed

2021 Feb 22 11:04:23 truenas PANIC at zil.c:423:zil_parse()

is it possible to save the content of the Pool ?

many many thanks