I'm looking for some assistance with troubleshooting poor read performance over NFS with my setup.

FreeNAS:

I serve the datastores on my SSD pool up to ESXI over NFS (no compression, no dedupe, sync enabled, 128k record size).

iPerf tests show full 10GB saturation between ESXI host and FreeNAS, as well as full saturation between VMs and FreeNAS.

Disk performance testing directly on FreeNAS for the SSD pool shows:

dd if=/dev/zero of=/mnt/vmtank1/test/testfile.2 bs=64M count=5000 1,787 mb/s writes

dd if=/mnt/vmtank1/test/testfile.2 of=/dev/zero bs=64M count=5000 3,269 mb/s reads

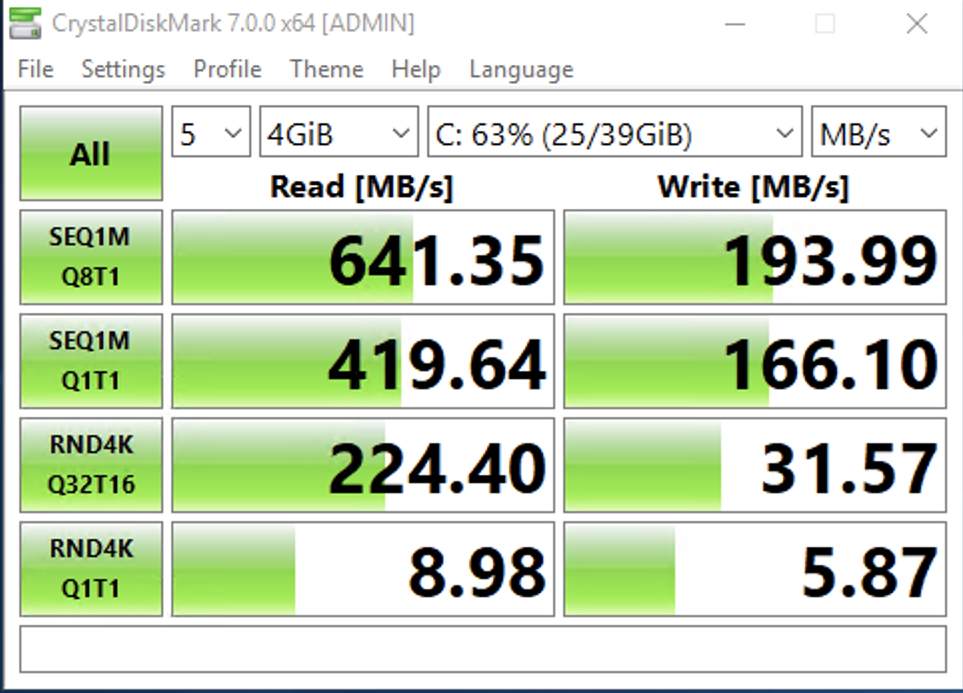

My test bed is a Windows 10VM with 12 vCPUs and 16GB RAM. My CrystalDisk scores look like the following:

What am I missing here? Am I running out of RAM? I understand the writes are due to SYNC which I currently have an Optane coming to replace my current SLOG, but I would think I should be maxing reads completely. What else can I do to narrow down the cause?

FreeNAS:

- Supermicro CSE846

- 2x E5-2620 Xeons

- 32GB ECC RAM

- BPN-SAS2-846EL1

- INTEL X520DA 10GBE NIC

- Pool1 - Movies/Storage: RAIDZ2 6x8TB WD REDs

- Pool2 - VM Datastores: 3xMIRRORED PAIRS Intel DC S3700 SSDs & Intel DC S3700 100G SLOG

- R720

- 2x E5-2697v2

- 64GB ECC RAM

- Dual Broadcom 10GBE NIC

- No local storage

I serve the datastores on my SSD pool up to ESXI over NFS (no compression, no dedupe, sync enabled, 128k record size).

iPerf tests show full 10GB saturation between ESXI host and FreeNAS, as well as full saturation between VMs and FreeNAS.

Disk performance testing directly on FreeNAS for the SSD pool shows:

dd if=/dev/zero of=/mnt/vmtank1/test/testfile.2 bs=64M count=5000 1,787 mb/s writes

dd if=/mnt/vmtank1/test/testfile.2 of=/dev/zero bs=64M count=5000 3,269 mb/s reads

My test bed is a Windows 10VM with 12 vCPUs and 16GB RAM. My CrystalDisk scores look like the following:

What am I missing here? Am I running out of RAM? I understand the writes are due to SYNC which I currently have an Optane coming to replace my current SLOG, but I would think I should be maxing reads completely. What else can I do to narrow down the cause?