Hi,

I'm looking at some low figures and I do not know what to make of them.

iperf gives me just under 15 GbE between two TrueNAS 12.0-U6 servers, but file transfers are 15 to 20 times slower.

In the chart below you see 5 bumps. They represent:

Any idea why the performance is this low?

Thanks!!

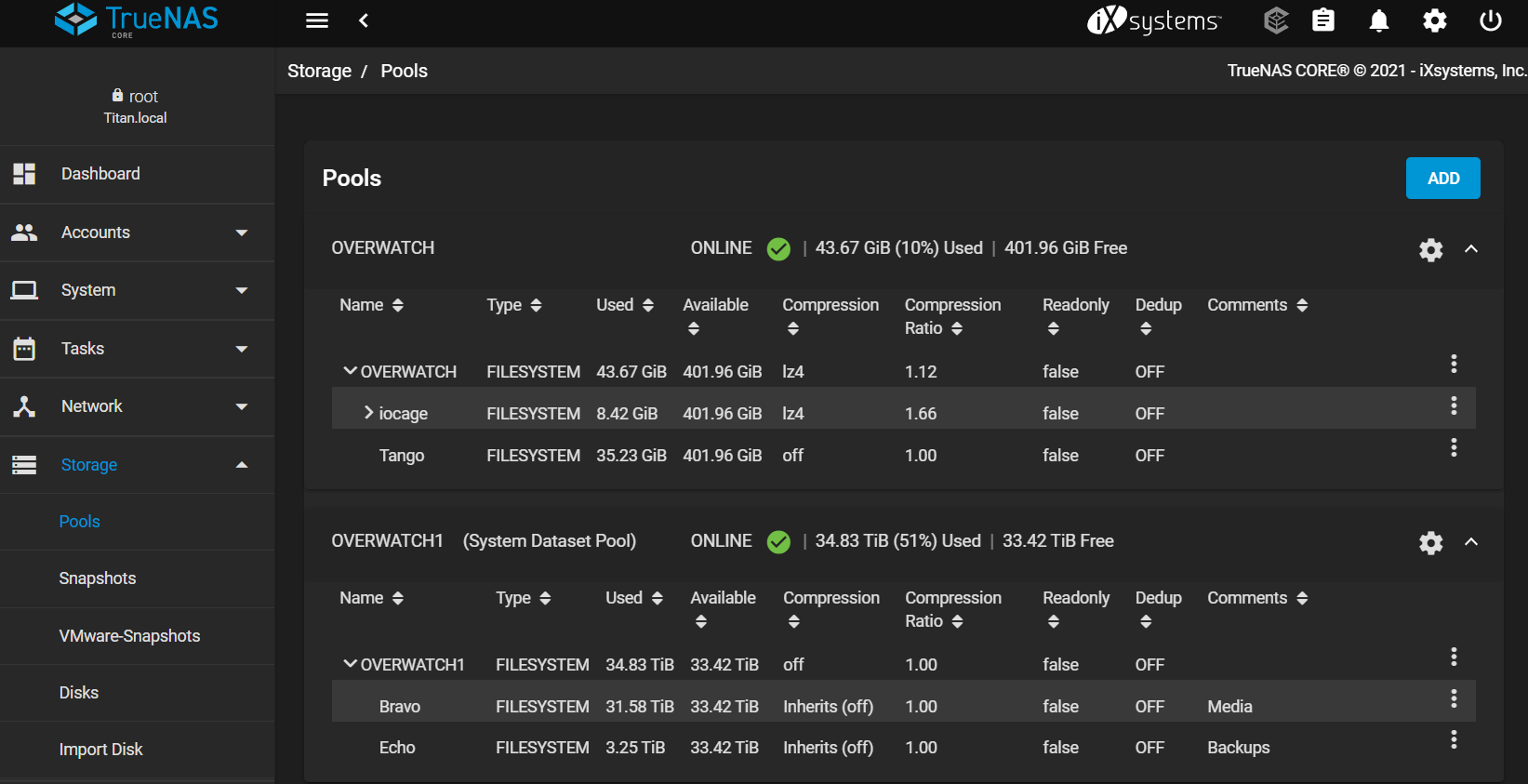

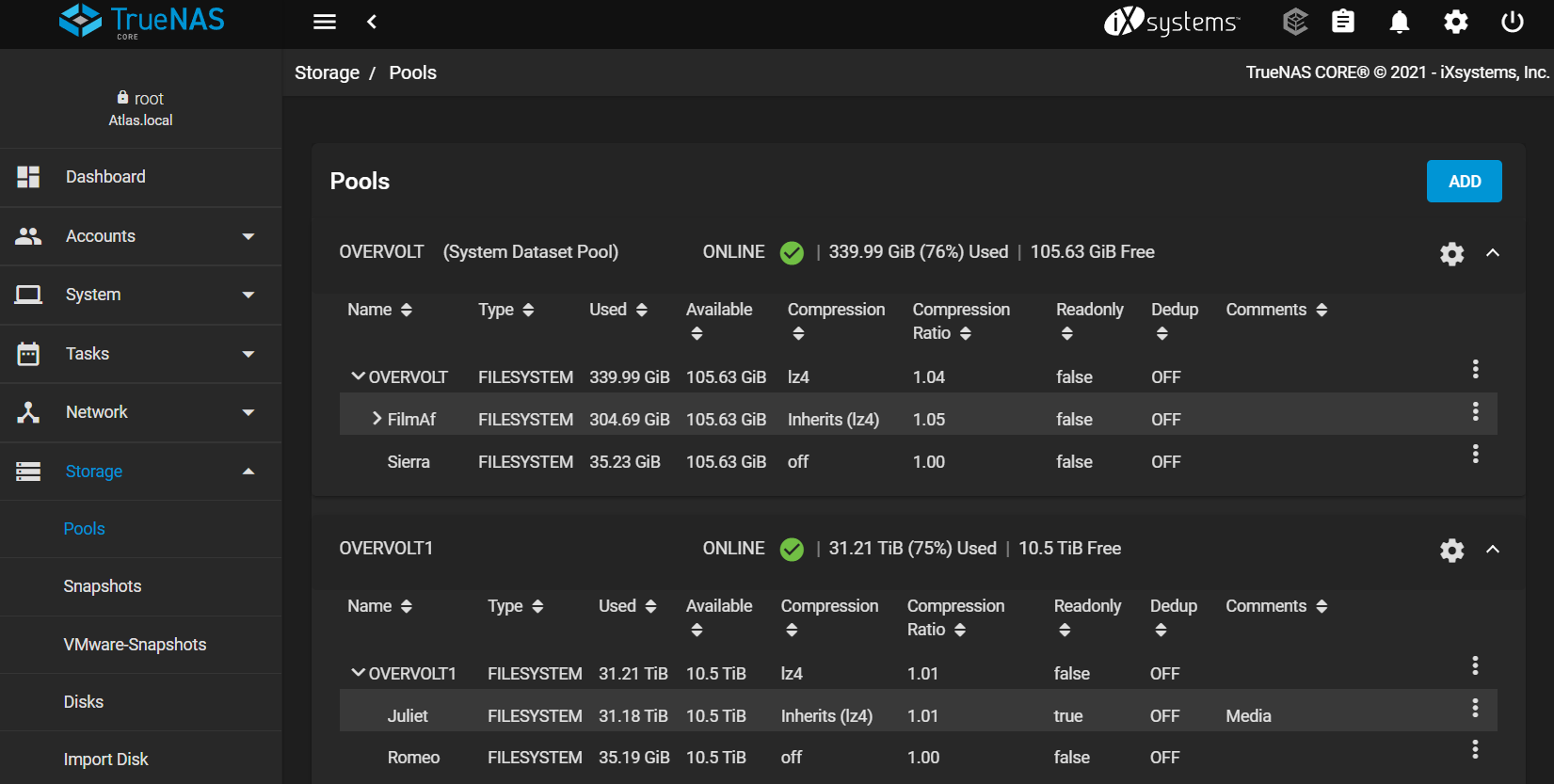

EDIT: Adding storage configuration for both servers. OVERWATCH and OVERVOLT are simple SSD mirrors. OVERWATCH1 and OVERVOLT1 are HDD in 9 x Z3 and 5 x Z2 respectively. Test #2 above is the transfer between the two SSD mirrors.

I'm looking at some low figures and I do not know what to make of them.

iperf gives me just under 15 GbE between two TrueNAS 12.0-U6 servers, but file transfers are 15 to 20 times slower.

Code:

iperf3 -i 10 -w 1M -t 60 -c 192.168.4.7 Connecting to host 192.168.4.7, port 5201 [ 5] local 192.168.4.5 port 62313 connected to 192.168.4.7 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-10.00 sec 16.7 GBytes 14.4 Gbits/sec 0 1.77 MBytes [ 5] 10.00-20.00 sec 16.2 GBytes 13.9 Gbits/sec 0 1.77 MBytes [ 5] 20.00-30.00 sec 17.3 GBytes 14.8 Gbits/sec 0 1.77 MBytes [ 5] 30.00-40.00 sec 17.1 GBytes 14.7 Gbits/sec 0 1.77 MBytes [ 5] 40.00-50.00 sec 16.3 GBytes 14.0 Gbits/sec 0 1.77 MBytes [ 5] 50.00-60.00 sec 16.5 GBytes 14.2 Gbits/sec 0 1.77 MBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-60.00 sec 100 GBytes 14.3 Gbits/sec 0 sender [ 5] 0.00-60.22 sec 100 GBytes 14.3 Gbits/sec receiver

In the chart below you see 5 bumps. They represent:

- iperf reporting just under 15 GbE between the two TrueNAS servers

- (2 SSDs mirrored) to (2 SSDs mirrored) -> 0.76 to 0.59 Gb

- (2 SSDs mirrored) to (5 14TB HDD in Z2) -> 1.10 to 0.98 Gb

- Repeat of #2 above -> 0.72 to 0.61 Gb

- (9 16TB HDD in Z3 ) to (5 14TB HDD in Z2) -> 0.77 to 0.62 Gb

- All datasets in the above have no compression

- All transfers were done over NFS via a cp command of a single 35GB file

- cc0 is a dedicated 40 GbE interface and was only used for communication between the two servers (card to card)

- em0 is the regular 1 GbE interface

- The HDDs use LSI SAS9211-8i card(s)

- The SSDs use motherboard SATA connectors

- Both servers have 32GB of memory, one has an i7-11700K and the other has an i7-3770K

- 14 GB drives are MG07ACA14TE (248 MiB/s)

- 16 GB drives are MG08ACA16TE (262 MiB/s)

Any idea why the performance is this low?

Thanks!!

EDIT: Adding storage configuration for both servers. OVERWATCH and OVERVOLT are simple SSD mirrors. OVERWATCH1 and OVERVOLT1 are HDD in 9 x Z3 and 5 x Z2 respectively. Test #2 above is the transfer between the two SSD mirrors.

Last edited: