I have 6 drives that were used in a previous zpool that seem to be holding onto old information (or the FreeNAS box is holding onto old information). I have destroyed all of the multipaths and I also ran dd in several ways. First I ran it on the first few hundred sectors then on entire disk until total completion. I even went into the /dev folder and delete all of the "da" devices. No matter what method or combination of methods I have used thus far I still get every one of the multipaths showing up.

What is interesting is that I get 12 da numbers for the 6 disks. I suppose that is because I previously had setup the zfs equivalent of RAID10 (a mirror of 2 striped sets). What am I doing wrong, or am I doing anything wrong at all? The multipaths do not hinder me from creating any type of vdev that I desire, but they are always there nagging me saying, "haha, you don't understand me". I truly thought that multipathing had to do with iSCSI and nothing else.

Among other things I have deleted all iSCSI configuration (portal, initiator, target, extent, and relationships), turned off iSCSI service, and restarted several times.

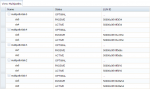

Here is copy of my multipath information (with 1 disk removed because I was trying to wipe it using alternative methods):

What is interesting is that I get 12 da numbers for the 6 disks. I suppose that is because I previously had setup the zfs equivalent of RAID10 (a mirror of 2 striped sets). What am I doing wrong, or am I doing anything wrong at all? The multipaths do not hinder me from creating any type of vdev that I desire, but they are always there nagging me saying, "haha, you don't understand me". I truly thought that multipathing had to do with iSCSI and nothing else.

Among other things I have deleted all iSCSI configuration (portal, initiator, target, extent, and relationships), turned off iSCSI service, and restarted several times.

Here is copy of my multipath information (with 1 disk removed because I was trying to wipe it using alternative methods):