sfcredfox

Patron

- Joined

- Aug 26, 2014

- Messages

- 340

Community,

I'm looking for a few opinions on the best way to gauge my systems max performance as a storage target for VMware. There's a lot of opinions about performance testing, some saying it's artificial and worthless, and others suggest it's useful for establishing a general idea of how your system is configured (good/bad). I'm hoping I can go with the ladder on this one and punish it to see how far I can go. I'm looking to test the systems total potential throughput (though it will likely be artificial).

I've seen a lot of people using crystal disk mark on an initiator system to test a target LUN on their FreeNAS system. I don't have any physical hosts not running ESX, so not really an option. I was running crystal disk mark on a single VM, but it seemed like the performance was more a virtual/hypervisor limit than a storage system issue, so I starting running the same test on multiple VMs simultaneously.

I've worked my way up to running 6 at once, across two disk pools. Each time I add two more, the numbers keep adding up higher than before, though they might go down in each single VM. I'm trying to sit back and see if there's any useful information here, or if I'm missing something key and in turn just being stupid and the data means nothing.

I'd like to someday get into tuning ARC/L2ARC performance if needed. I've been reading ARC/L2ARC related posts for a while trying to understand tuning it a little better, but I know I can't do that while blasting the system with an unrealistic work load, so I won't worry about it yet.

Your thoughts on trying to find the highest potential output in this scenario?

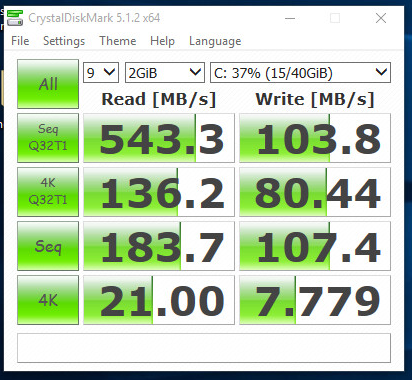

This was a test on a single VM

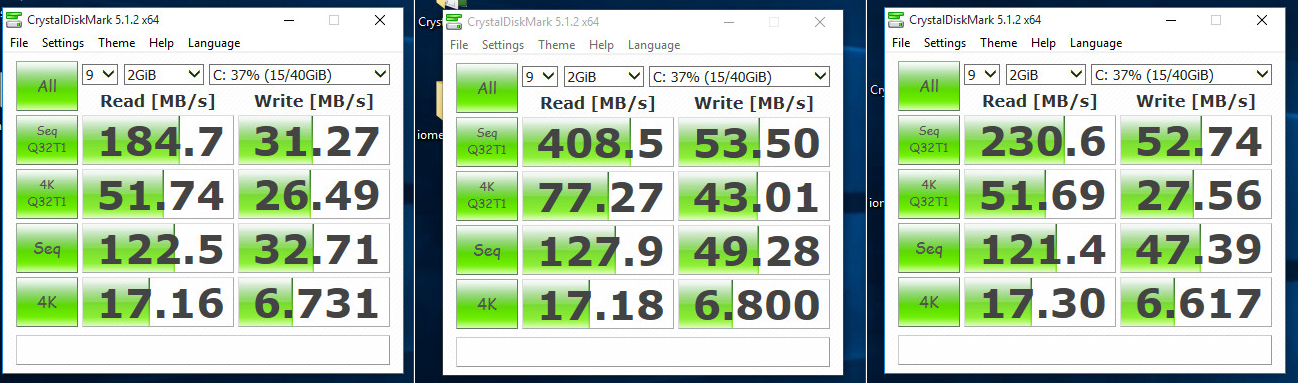

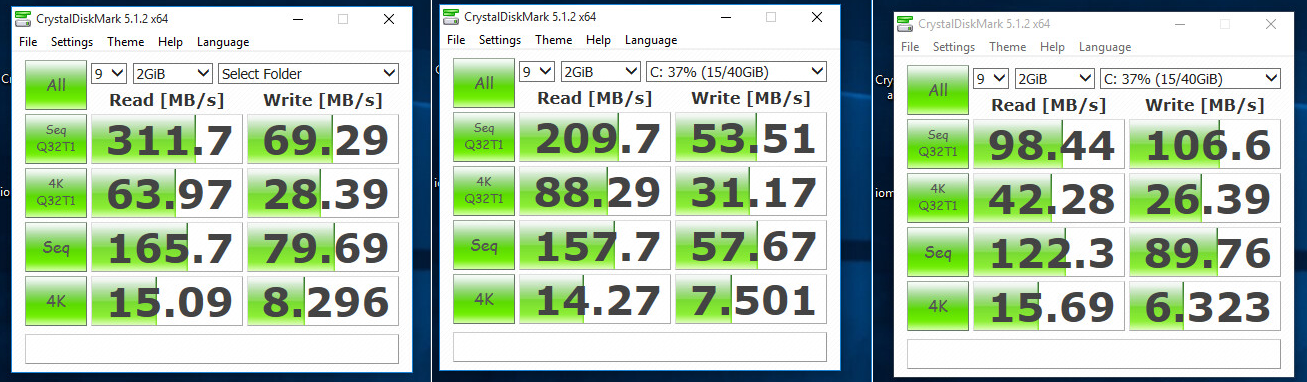

This was a group test on both ESX hosts connected to the FreeNAS system. These six tests ran simultaneously:

FreeNAS is FC connected to two fabrics @4gbps, each for an ESX host. Each host is configured for round robin, so both links are active for IO, each host has a potential ~8gbps minus overhead.

The FreeNAS system has two disk pools, each with their own SLOG/L2ARC. More details below:

FreeNAS 9.3-Stable 64-bit

I'm looking for a few opinions on the best way to gauge my systems max performance as a storage target for VMware. There's a lot of opinions about performance testing, some saying it's artificial and worthless, and others suggest it's useful for establishing a general idea of how your system is configured (good/bad). I'm hoping I can go with the ladder on this one and punish it to see how far I can go. I'm looking to test the systems total potential throughput (though it will likely be artificial).

I've seen a lot of people using crystal disk mark on an initiator system to test a target LUN on their FreeNAS system. I don't have any physical hosts not running ESX, so not really an option. I was running crystal disk mark on a single VM, but it seemed like the performance was more a virtual/hypervisor limit than a storage system issue, so I starting running the same test on multiple VMs simultaneously.

I've worked my way up to running 6 at once, across two disk pools. Each time I add two more, the numbers keep adding up higher than before, though they might go down in each single VM. I'm trying to sit back and see if there's any useful information here, or if I'm missing something key and in turn just being stupid and the data means nothing.

I'd like to someday get into tuning ARC/L2ARC performance if needed. I've been reading ARC/L2ARC related posts for a while trying to understand tuning it a little better, but I know I can't do that while blasting the system with an unrealistic work load, so I won't worry about it yet.

Your thoughts on trying to find the highest potential output in this scenario?

This was a test on a single VM

This was a group test on both ESX hosts connected to the FreeNAS system. These six tests ran simultaneously:

FreeNAS is FC connected to two fabrics @4gbps, each for an ESX host. Each host is configured for round robin, so both links are active for IO, each host has a potential ~8gbps minus overhead.

The FreeNAS system has two disk pools, each with their own SLOG/L2ARC. More details below:

FreeNAS 9.3-Stable 64-bit

FreeNAS Platform:

SuperMicro 826 (8XDTN+)

(x2) Intel(R) Xeon(R) CPU E5200 @ 2.27GHz

72GB RAM ECC (Always ECC!)

APC3000 UPS (Always a UPS!)

SuperMicro 826 (8XDTN+)

(x2) Intel(R) Xeon(R) CPU E5200 @ 2.27GHz

72GB RAM ECC (Always ECC!)

APC3000 UPS (Always a UPS!)

IBM M1015 (IT Mode) HBA (Port 0) -> BPN-SAS2-826EL1 (SuperMicro SAS expander backplane)

IBM M1015 (IT Mode) HBA (Port 1) -> SFF-8088 connected -> HP MSA70 3G 25 bay drive enclosure

HP H221 SAS HBA (Port 0) -> SFF-8088 connected -> HP D2700 6G 25 bay drive enclosure

QLogic QLE2462 Dual Port 4gb PCIe4x Fibre Channel HBA

Intel Pro 1000 MT Dual Port Card (2 total ports for iSCSI)

Intel Pro 1000 (integrated) for CIFS

SuperMicro IPMI

IBM M1015 (IT Mode) HBA (Port 1) -> SFF-8088 connected -> HP MSA70 3G 25 bay drive enclosure

HP H221 SAS HBA (Port 0) -> SFF-8088 connected -> HP D2700 6G 25 bay drive enclosure

QLogic QLE2462 Dual Port 4gb PCIe4x Fibre Channel HBA

Intel Pro 1000 MT Dual Port Card (2 total ports for iSCSI)

Intel Pro 1000 (integrated) for CIFS

SuperMicro IPMI

Pool1 (VM Datastore) -> 24x 3G 146GB 10K SAS into 12 vDev [Mirrors]

Pool2 (VM Datastore) -> 12x 6G 300GB 10K SAS into 6 vDev [Mirrors]

Pool3 (Media Storage) -> 8x 3G 2TB 7200 SATA into 1 vDev [Z2]

Two Intel 3500 SSD - SLOGS (one for each VMware Datastore pool)

Two SSD - L2ARC (one for each VMware Datastore pool)

Pool2 (VM Datastore) -> 12x 6G 300GB 10K SAS into 6 vDev [Mirrors]

Pool3 (Media Storage) -> 8x 3G 2TB 7200 SATA into 1 vDev [Z2]

Two Intel 3500 SSD - SLOGS (one for each VMware Datastore pool)

Two SSD - L2ARC (one for each VMware Datastore pool)

Network Infrastructure:

Cisco SG200-26 (26 Port Gigabit Switch)

Separated VLANs for CIFS and storage traffic:

Two vLANs/subnets for iSCSI

Cisco SG200-26 (26 Port Gigabit Switch)

Separated VLANs for CIFS and storage traffic:

Two vLANs/subnets for iSCSI

- em0 - x.x.101.7/24

- em1 - x.x.102.7/24

- itb - x.x.0.7/24