Is there a way to make GUI screen 'Pool Status' to show meaningful info about pool members and not just a disk that it resides on?

As it is now, this screen does not necessarily correspond with 'config' part of `zpool status` output.

Running TrueNAS-13.0-U3.1

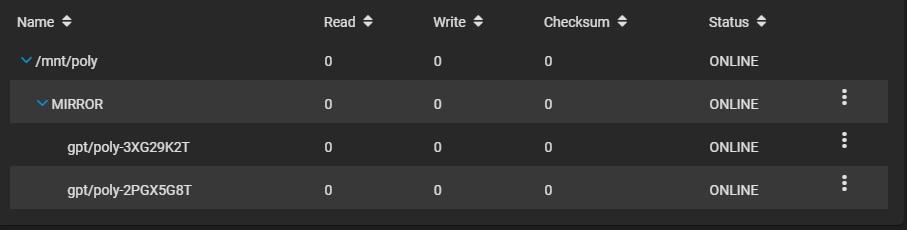

Yesterday I was experimenting with GPT labels and in the end had this pool:

And webUI was showing same info.

The following screenshot is only an example, because I had no reason to save it then, so I edited page code to match what I was seeing, the only difference might be prepending '/dev/gpt/' part of the path, not sure:

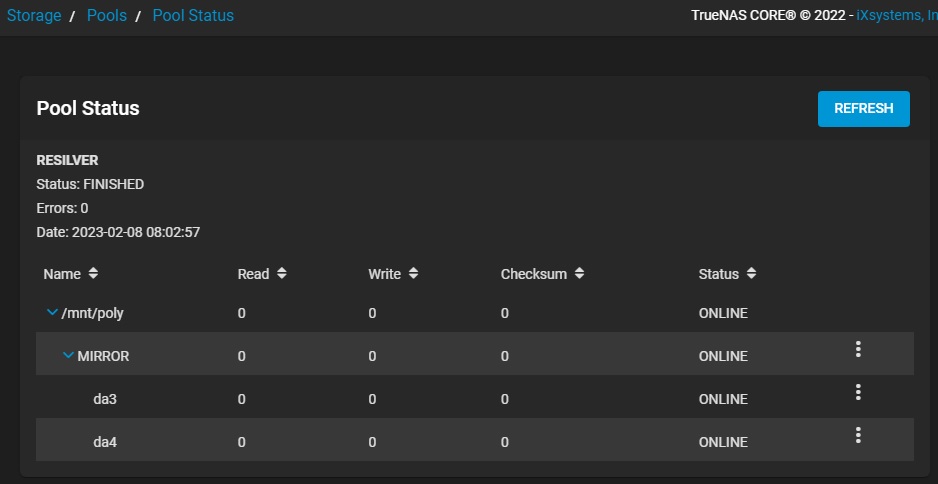

But today after a reboot, it I for some reason reverted to useless usual:

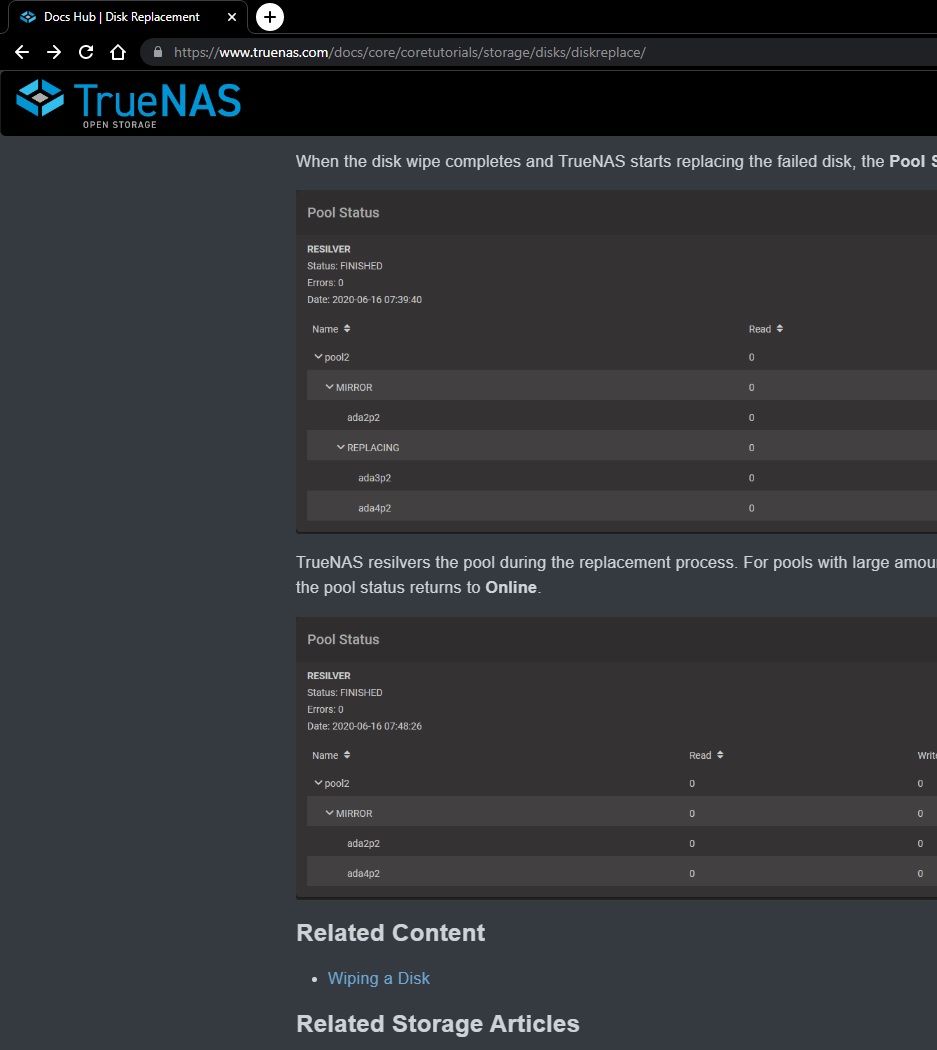

Also noticed that partitions are displaying in some cases as well, it is demonstrated on current Docs page:

which is a lot more informative that what I get on my instance.

Last days were spent in preparations to import multiple pools spanning same disks with custom partitioning scheme.

My goal was to have GPT labels with `poolname-Serial` naming schema on each partition to make CLI maintenance easier since webUI Disk screens are for some reason not partition aware despite the fact that middleware itself uses only partitions and not whole disks. Moreover, I can't point pool device replace dialog to partition instead of empty disk..

I get the appliance part, but the whole disk management section is contradicting itself in logic and is so limiting that it is not even funny. I mean, it is the core part of storage management solution and should be comprehensive and not beating users into submission for a single theoretical "best practice" storage architecture of the multitude possible ways to utilize ZFS. The amount of effort I am making at this point to not sound negative about my battles with the whole restrictive part of TrueNAS ideology is ridiculous.

As it is now, this screen does not necessarily correspond with 'config' part of `zpool status` output.

Running TrueNAS-13.0-U3.1

Yesterday I was experimenting with GPT labels and in the end had this pool:

Code:

root@nas:~ # zpool status poly

pool: poly

state: ONLINE

scan: scrub repaired 0B in 00:00:01 with 0 errors on Thu Feb 9 04:09:48 2023

config:

NAME STATE READ WRITE CKSUM

poly ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gpt/poly-3XG29K2T ONLINE 0 0 0

gpt/poly-2PGX5G8T ONLINE 0 0 0

errors: No known data errors

root@nas:~ #

And webUI was showing same info.

The following screenshot is only an example, because I had no reason to save it then, so I edited page code to match what I was seeing, the only difference might be prepending '/dev/gpt/' part of the path, not sure:

But today after a reboot, it I for some reason reverted to useless usual:

Also noticed that partitions are displaying in some cases as well, it is demonstrated on current Docs page:

which is a lot more informative that what I get on my instance.

Last days were spent in preparations to import multiple pools spanning same disks with custom partitioning scheme.

My goal was to have GPT labels with `poolname-Serial` naming schema on each partition to make CLI maintenance easier since webUI Disk screens are for some reason not partition aware despite the fact that middleware itself uses only partitions and not whole disks. Moreover, I can't point pool device replace dialog to partition instead of empty disk..

I get the appliance part, but the whole disk management section is contradicting itself in logic and is so limiting that it is not even funny. I mean, it is the core part of storage management solution and should be comprehensive and not beating users into submission for a single theoretical "best practice" storage architecture of the multitude possible ways to utilize ZFS. The amount of effort I am making at this point to not sound negative about my battles with the whole restrictive part of TrueNAS ideology is ridiculous.