VictorR

Contributor

- Joined

- Dec 9, 2015

- Messages

- 143

FreeNAS-11.2-U5

(Build Date: Jun 24, 2019 18:41)

45 Drives Storinator Q30 NAS:

SuperMicro X10DRL motherboard (Firmware Revision: 03.80 02/14/2019, BIOS Version: 3.1c 04/27/2019, Redfish Version : 1.0.1)

Dual Xeon E5-2620 v3 @ 2.4GHz

256GB RAM

2x 125GB SSD boot drives

3 x dual Intel X540T2BLK 10Gbe NICs

30 x 4TB WD Re drives (actually 25 Red, 5 Gold replacements)

Netgear XS728T 10 Gbe 24-port managed switch

Sonnet Twin 10G Thunderbolt to ethernet converters

Test network with only the NAS and single 27” iMac client (1TB SSD)

After a couple of years, I finally got a chance to wipe clean and reconfigure our NAS pools. We’d been running in a 3 x 10 RAIDZ2 pool for 2K/4K raw video editing on to six 2013 Mac Pros. We got smooth throughput to all stations with multiple simultaneous raw 4k streams, each, When the NAS was <30% full, speeds of 700-800MB/s both ways were not unusual. At 50% full, it was still possible to get steady 400-500MB/s speed.

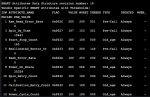

I deleted the pool to get rid of the 80TB of backed-up data, and recreated a 3 x10 pool with compression turned off. Nothing has popped up in SMART tests.

But, there is significant drop-off in read/write performance. So, I upgraded to FreeNAS-11.2-U5, flashed in latest BIOS/Firmware/IPMI. Still slower, I replaced one of the 8GB RAM chips, that was throwing up errors. But, that seems to have made no difference.

RAIDZ2 (3 x 10) from Jan 2016.. for comparison

[root@Q30] ~# dd if=/dev/zero of=/mnt/Q30/test.bin bs=2048k count=1024k

1048576+0 records in

1048576+0 records out

2199023255552 bytes transferred in 1098.190928 secs (2,002,405,228 bytes/sec) [commas added for ease of reading]

[root@q30 /mnt/Q30]# dd if=test.bin of=/dev/null bs=2048k count=1024k

1048576+0 records in

1048576+0 records out

2199023255552 bytes transferred in 1392.109668 secs (1,579,633,635 bytes/sec)

Jul 28, 2019:

root@freenas:~ # dd if=/dev/zero of=/mnt/Q30/Test/test.bin bs=2048k count=1024k

1048576+0 records in

1048576+0 records out

2199023255552 bytes transferred in 1593.191745 secs (1,380,262,773 bytes/sec)

root@freenas: dd if=/mnt/Q30/Test/test.bin of=/dev/null bs=2048k count=1024k

1048576+0 records in

1048576+0 records out

2199023255552 bytes transferred in 3142.254662 secs (699,823,373 bytes/sec)

I'd expect some minor degradation in speed. But, this is pretty big.

Can someone recommend other tests to run?

(Build Date: Jun 24, 2019 18:41)

45 Drives Storinator Q30 NAS:

SuperMicro X10DRL motherboard (Firmware Revision: 03.80 02/14/2019, BIOS Version: 3.1c 04/27/2019, Redfish Version : 1.0.1)

Dual Xeon E5-2620 v3 @ 2.4GHz

256GB RAM

2x 125GB SSD boot drives

3 x dual Intel X540T2BLK 10Gbe NICs

30 x 4TB WD Re drives (actually 25 Red, 5 Gold replacements)

Netgear XS728T 10 Gbe 24-port managed switch

Sonnet Twin 10G Thunderbolt to ethernet converters

Test network with only the NAS and single 27” iMac client (1TB SSD)

After a couple of years, I finally got a chance to wipe clean and reconfigure our NAS pools. We’d been running in a 3 x 10 RAIDZ2 pool for 2K/4K raw video editing on to six 2013 Mac Pros. We got smooth throughput to all stations with multiple simultaneous raw 4k streams, each, When the NAS was <30% full, speeds of 700-800MB/s both ways were not unusual. At 50% full, it was still possible to get steady 400-500MB/s speed.

I deleted the pool to get rid of the 80TB of backed-up data, and recreated a 3 x10 pool with compression turned off. Nothing has popped up in SMART tests.

But, there is significant drop-off in read/write performance. So, I upgraded to FreeNAS-11.2-U5, flashed in latest BIOS/Firmware/IPMI. Still slower, I replaced one of the 8GB RAM chips, that was throwing up errors. But, that seems to have made no difference.

RAIDZ2 (3 x 10) from Jan 2016.. for comparison

[root@Q30] ~# dd if=/dev/zero of=/mnt/Q30/test.bin bs=2048k count=1024k

1048576+0 records in

1048576+0 records out

2199023255552 bytes transferred in 1098.190928 secs (2,002,405,228 bytes/sec) [commas added for ease of reading]

[root@q30 /mnt/Q30]# dd if=test.bin of=/dev/null bs=2048k count=1024k

1048576+0 records in

1048576+0 records out

2199023255552 bytes transferred in 1392.109668 secs (1,579,633,635 bytes/sec)

Jul 28, 2019:

root@freenas:~ # dd if=/dev/zero of=/mnt/Q30/Test/test.bin bs=2048k count=1024k

1048576+0 records in

1048576+0 records out

2199023255552 bytes transferred in 1593.191745 secs (1,380,262,773 bytes/sec)

root@freenas: dd if=/mnt/Q30/Test/test.bin of=/dev/null bs=2048k count=1024k

1048576+0 records in

1048576+0 records out

2199023255552 bytes transferred in 3142.254662 secs (699,823,373 bytes/sec)

I'd expect some minor degradation in speed. But, this is pretty big.

Can someone recommend other tests to run?

Attachments

Last edited: