Hello gents,

As the title says, post here if you are unsure.

I am going to right to it so I don't waste people time.

The issue at hand i not getting the performance I should be getting from iscsi MPIO and I could use some else perspective.

example. Direct file copy is at 2.5Gigabyte /s

Iscsi performance is as 70MB/s

Can anyone shot some pointers, its like if the nics were operating at 100 mbit but they are not.

I changed RR path change to 1 from 1000 for testing.

Freenas 9.3

BuildFreeNAS-9.3-STABLE-201501241715

PlatformAMD FX(tm)-6300 Six-Core Processor

32GB ram

pool: zfs-pool8tb

state: ONLINE

scan: scrub repaired 0 in 6h21m with 0 errors on Sun Feb 1 09:21:25 2015

config:

NAME STATE READ WRITE CKSUM

zfs-pool8tb ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0 <--- I know I'm moving to raidz2 soon.

da5p1 ONLINE 0 0 0

da7p1 ONLINE 0 0 0

da4p1 ONLINE 0 0 0

da6p1 ONLINE 0 0 0

Seagate 7200 rpm drives with 32M cache on them and I have 2 spares. This is on a reflashed h200 to lsi firmware and no raid, device is pass through, 6Gbit card 8 port and 6Gbit drives

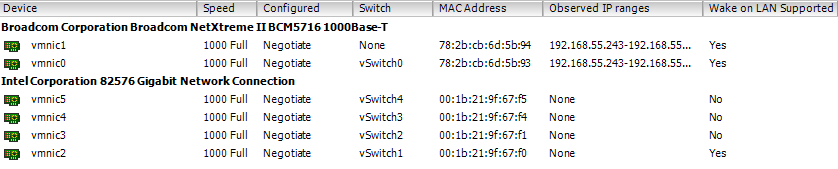

1 intel gbit for management and one quad port gbit for iscsi.

Each on its own subnet " 50.22 , 51.22, 52.22 53.22.

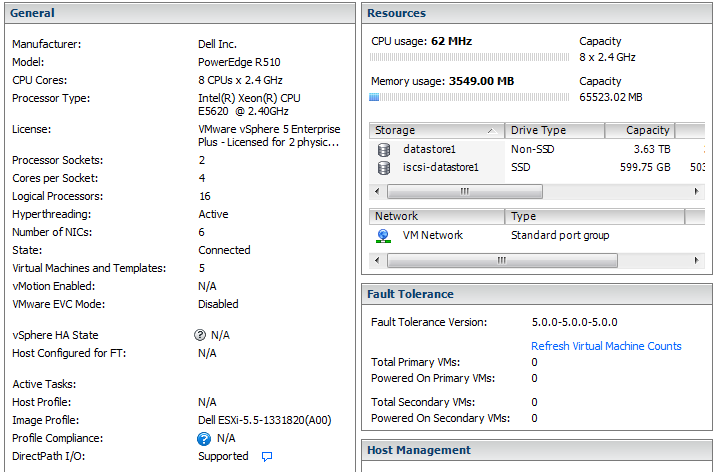

ESXi Host.

Networking

iscsi MPIO 8 nics on a vlan to isolate traffic and all 4 ip's of the iscsi nics are in the portal. Management on another link.

Lun presented to esxi is in round robin with 4 targets, 1 device and 4 paths.

cisco

sw1>show vlan brief

VLAN Name Status Ports

---- -------------------------------- --------- -------------------------------

1 default active Gi0/1, Gi0/2, Gi0/11, Gi0/12

Gi0/13, Gi0/14, Gi0/15, Gi0/16

Gi0/17, Gi0/18, Gi0/19, Gi0/20

Gi0/21, Gi0/22, Gi0/23, Gi0/24

Gi0/25, Gi0/26, Gi0/27, Gi0/28

Gi0/29, Gi0/30, Gi0/31, Gi0/32

Gi0/33, Gi0/34, Gi0/35, Gi0/36

Gi0/37, Gi0/38, Gi0/39, Gi0/40

Gi0/41, Gi0/42, Gi0/43, Gi0/44

Gi0/45, Gi0/46, Gi0/47, Gi0/48

Gi0/49, Gi0/50, Gi0/51, Gi0/52

2 esxi-iscsi active Gi0/3, Gi0/4, Gi0/5, Gi0/6

Gi0/7, Gi0/8, Gi0/9, Gi0/10

4 ports on each host making it 8 for iscsi on the same vlan.

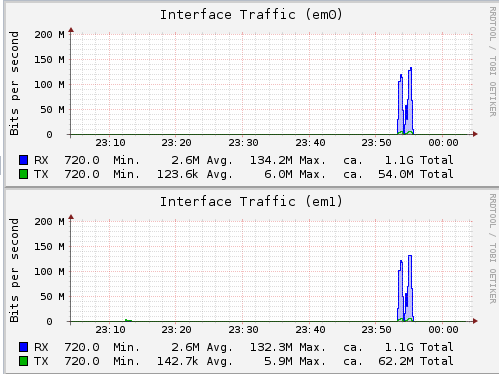

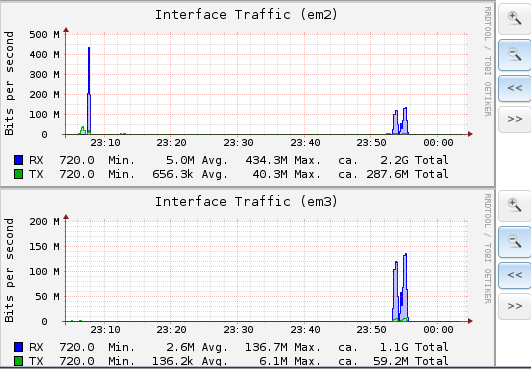

Iscsi Traffic

All interfaces are in (1000baseT <full-duplex>) I checked the switch and 1gbit link, checked esxi and 1gbit link.

What else should I be looking at?

Gstat says at 70MB drives are not even half busy.

Thanks in advance for any pointers.

As the title says, post here if you are unsure.

I am going to right to it so I don't waste people time.

The issue at hand i not getting the performance I should be getting from iscsi MPIO and I could use some else perspective.

example. Direct file copy is at 2.5Gigabyte /s

Iscsi performance is as 70MB/s

Can anyone shot some pointers, its like if the nics were operating at 100 mbit but they are not.

I changed RR path change to 1 from 1000 for testing.

Freenas 9.3

BuildFreeNAS-9.3-STABLE-201501241715

PlatformAMD FX(tm)-6300 Six-Core Processor

32GB ram

pool: zfs-pool8tb

state: ONLINE

scan: scrub repaired 0 in 6h21m with 0 errors on Sun Feb 1 09:21:25 2015

config:

NAME STATE READ WRITE CKSUM

zfs-pool8tb ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0 <--- I know I'm moving to raidz2 soon.

da5p1 ONLINE 0 0 0

da7p1 ONLINE 0 0 0

da4p1 ONLINE 0 0 0

da6p1 ONLINE 0 0 0

Seagate 7200 rpm drives with 32M cache on them and I have 2 spares. This is on a reflashed h200 to lsi firmware and no raid, device is pass through, 6Gbit card 8 port and 6Gbit drives

1 intel gbit for management and one quad port gbit for iscsi.

Each on its own subnet " 50.22 , 51.22, 52.22 53.22.

ESXi Host.

Networking

iscsi MPIO 8 nics on a vlan to isolate traffic and all 4 ip's of the iscsi nics are in the portal. Management on another link.

Lun presented to esxi is in round robin with 4 targets, 1 device and 4 paths.

cisco

sw1>show vlan brief

VLAN Name Status Ports

---- -------------------------------- --------- -------------------------------

1 default active Gi0/1, Gi0/2, Gi0/11, Gi0/12

Gi0/13, Gi0/14, Gi0/15, Gi0/16

Gi0/17, Gi0/18, Gi0/19, Gi0/20

Gi0/21, Gi0/22, Gi0/23, Gi0/24

Gi0/25, Gi0/26, Gi0/27, Gi0/28

Gi0/29, Gi0/30, Gi0/31, Gi0/32

Gi0/33, Gi0/34, Gi0/35, Gi0/36

Gi0/37, Gi0/38, Gi0/39, Gi0/40

Gi0/41, Gi0/42, Gi0/43, Gi0/44

Gi0/45, Gi0/46, Gi0/47, Gi0/48

Gi0/49, Gi0/50, Gi0/51, Gi0/52

2 esxi-iscsi active Gi0/3, Gi0/4, Gi0/5, Gi0/6

Gi0/7, Gi0/8, Gi0/9, Gi0/10

4 ports on each host making it 8 for iscsi on the same vlan.

Iscsi Traffic

All interfaces are in (1000baseT <full-duplex>) I checked the switch and 1gbit link, checked esxi and 1gbit link.

What else should I be looking at?

Gstat says at 70MB drives are not even half busy.

Thanks in advance for any pointers.