I should have to investigate in this problem and found out what is going on before I will report a bug anywhere.

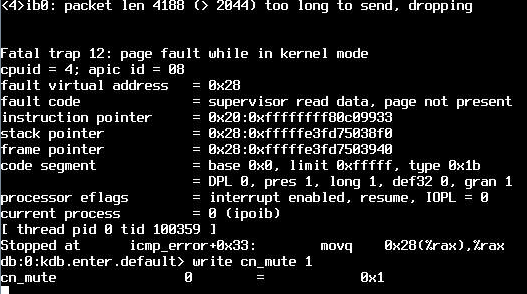

I analyzed the FreeNAS crash dumps in /data/crash/ and found out that the last what is happening before the kernel is crashing are events like that:

Code:

<118>Fri Sep 6 05:20:07 CEST 2019

<6>arp: 10.20.24.111 moved from 80:00:02:08:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:9f:c1 to 80:00:02:09:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:9f:c2 on ib0

<6>arp: 10.20.24.110 moved from 80:00:02:08:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:20:e3 to 80:00:02:09:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:20:e4 on ib0

<6>arp: 10.20.25.111 moved from 80:00:02:09:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:9f:c2 to 80:00:02:08:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:9f:c1 on ib1

<6>arp: 10.20.25.110 moved from 80:00:02:09:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:20:e4 to 80:00:02:08:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:20:e3 on ib1

<6>arp: 10.20.24.111 moved from 80:00:02:09:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:9f:c2 to 80:00:02:08:fe:80:00:00:00:00:00:00:00:02:c9:03:00:09:9f:c1 on ib0

<4>ib0: packet len 12380 (> 2044) too long to send, dropping

It is every time the same behavior. The both 20-octet IPoIB link-layer addresses on all three Proxmox clients are changing from time to time.

After that it looks like that the FreeBSD server sometimes is using the Datagram mode for the new connections and then it tries to send a large packet over this connection which could come from a previous client request/connection in Connected mode.

The root cause seems to be the changing of the IP addresses/link layer addresses on the Proxmox client side and secondary the Datagram mode behavior on the FreeBSD side.

I will report that also in the Proxmox forum. Maybe somebody has an idea what is happening here with the link layer addresses and how to avoid that.

Here are more details of the setup:

On the FreeNAS side I`m using an own subnet for each IB port and I have two portals in the iSCSI setup for each IP (10.20.24.100/24 & 10.20.25.100/24).

The kernel of FreeNAS:

Code:

root@freenas1[/data/crash]# uname -a

FreeBSD freenas1.getcom.de 11.2-STABLE FreeBSD 11.2-STABLE #0 r325575+6aad246318c(HEAD): Mon Jun 24 17:25:47 UTC 2019 root@nemesis:/freenas-releng/freenas/_BE/objs/freenas-releng/freenas/_BE/os/sys/FreeNAS.amd64 amd64

The modules loaded on FreeNAS side:

Code:

root@freenas1[/data/crash]# cat /boot/loader.conf.local

mlx4ib_load="YES" # Be sure that Kernel modul Melloanox 4 Infiniband will be loaded

ipoib_load="YES" # Be sure that Kernel modul IP over Infiniband will be loaded

kernel="kernel"

module_path="/boot/kernel;/boot/modules;/usr/local/modules"

kern.cam.ctl.ha_id=0

Code:

root@freenas1[/data/crash]# kldstat

Id Refs Address Size Name

1 72 0xffffffff80200000 25608a8 kernel

2 1 0xffffffff82762000 100eb0 ispfw.ko

3 1 0xffffffff82863000 f9f8 ipmi.ko

4 2 0xffffffff82873000 2d28 smbus.ko

5 1 0xffffffff82876000 8a10 freenas_sysctl.ko

6 1 0xffffffff8287f000 3aff0 mlx4ib.ko

7 1 0xffffffff828ba000 1a388 ipoib.ko

8 1 0xffffffff82d11000 32e048 vmm.ko

9 1 0xffffffff83040000 a74 nmdm.ko

10 1 0xffffffff83041000 e610 geom_mirror.ko

11 1 0xffffffff83050000 3a3c geom_multipath.ko

12 1 0xffffffff83054000 2ec dtraceall.ko

13 9 0xffffffff83055000 3acf8 dtrace.ko

14 1 0xffffffff83090000 5b8 dtmalloc.ko

15 1 0xffffffff83091000 1898 dtnfscl.ko

16 1 0xffffffff83093000 1d31 fbt.ko

17 1 0xffffffff83095000 53390 fasttrap.ko

18 1 0xffffffff830e9000 bfc sdt.ko

19 1 0xffffffff830ea000 6d80 systrace.ko

20 1 0xffffffff830f1000 6d48 systrace_freebsd32.ko

21 1 0xffffffff830f8000 f9c profile.ko

22 1 0xffffffff830f9000 13ec0 hwpmc.ko

23 1 0xffffffff8310d000 7340 t3_tom.ko

24 2 0xffffffff83115000 ab8 toecore.ko

25 1 0xffffffff83116000 ddac t4_tom.ko

Kernel running on Proxmox:

Code:

root@pvecn1:~# uname -a

Linux pvecn1 4.15.18-20-pve #1 SMP PVE 4.15.18-46 (Thu, 8 Aug 2019 10:42:06 +0200) x86_64 GNU/Linux

The modules loaded on Proxmox side:

Code:

root@pvecn1:~# cat /etc/modules-load.d/mellanox.conf

mlx4_core

mlx4_ib

mlx4_en

ib_cm

ib_core

ib_ipoib

ib_iser

ib_umad

Code:

root@pvecn1:~# lsmod

Module Size Used by

tcp_diag 16384 0

inet_diag 24576 1 tcp_diag

veth 16384 0

nfsv3 40960 2

nfs_acl 16384 1 nfsv3

nfs 262144 4 nfsv3

lockd 94208 2 nfsv3,nfs

grace 16384 1 lockd

fscache 65536 1 nfs

ip_set 40960 0

ip6table_filter 16384 0

ip6_tables 28672 1 ip6table_filter

iptable_filter 16384 0

bonding 163840 0

softdog 16384 2

nfnetlink_log 20480 1

nfnetlink 16384 3 ip_set,nfnetlink_log

ipmi_ssif 32768 0

intel_rapl 20480 0

sb_edac 24576 0

x86_pkg_temp_thermal 16384 0

intel_powerclamp 16384 0

coretemp 16384 0

kvm_intel 217088 12

kvm 598016 1 kvm_intel

irqbypass 16384 20 kvm

crct10dif_pclmul 16384 0

crc32_pclmul 16384 0

ghash_clmulni_intel 16384 0

pcbc 16384 0

vhost_net 24576 5

vhost 45056 1 vhost_net

tap 24576 1 vhost_net

iscsi_tcp 20480 129

aesni_intel 188416 0

libiscsi_tcp 20480 1 iscsi_tcp

ib_umad 24576 4

aes_x86_64 20480 1 aesni_intel

ib_iser 49152 0

crypto_simd 16384 1 aesni_intel

glue_helper 16384 1 aesni_intel

rdma_cm 61440 1 ib_iser

cryptd 24576 3 crypto_simd,ghash_clmulni_intel,aesni_intel

iw_cm 45056 1 rdma_cm

libiscsi 57344 3 libiscsi_tcp,iscsi_tcp,ib_iser

mgag200 45056 1

scsi_transport_iscsi 98304 4 iscsi_tcp,ib_iser,libiscsi

ttm 102400 1 mgag200

snd_pcm 98304 0

ib_ipoib 102400 0

snd_timer 32768 1 snd_pcm

drm_kms_helper 172032 1 mgag200

intel_cstate 20480 0

snd 81920 2 snd_timer,snd_pcm

ib_cm 53248 2 rdma_cm,ib_ipoib

soundcore 16384 1 snd

drm 401408 4 drm_kms_helper,mgag200,ttm

mlx4_en 114688 0

i2c_algo_bit 16384 1 mgag200

intel_rapl_perf 16384 0

pcspkr 16384 0

input_leds 16384 0

joydev 20480 0

fb_sys_fops 16384 1 drm_kms_helper

syscopyarea 16384 1 drm_kms_helper

sysfillrect 16384 1 drm_kms_helper

sysimgblt 16384 1 drm_kms_helper

mei_me 40960 0

mei 94208 1 mei_me

ioatdma 53248 0

lpc_ich 24576 0

shpchp 36864 0

wmi 24576 0

ipmi_si 61440 0

ipmi_devintf 20480 0

ipmi_msghandler 53248 3 ipmi_devintf,ipmi_si,ipmi_ssif

acpi_pad 180224 0

mac_hid 16384 0

dm_round_robin 16384 21

dm_multipath 28672 22 dm_round_robin

scsi_dh_rdac 16384 0

scsi_dh_emc 16384 0

scsi_dh_alua 20480 63

mlx4_ib 176128 0

ib_core 225280 7 rdma_cm,ib_ipoib,mlx4_ib,iw_cm,ib_iser,ib_umad,ib_cm

sunrpc 335872 22 lockd,nfsv3,nfs_acl,nfs

ip_tables 28672 1 iptable_filter

x_tables 40960 4 ip6table_filter,iptable_filter,ip6_tables,ip_tables

autofs4 40960 2

zfs 3452928 4

zunicode 331776 1 zfs

zavl 16384 1 zfs

icp 258048 1 zfs

zcommon 69632 1 zfs

znvpair 77824 2 zfs,zcommon

spl 106496 4 zfs,icp,znvpair,zcommon

btrfs 1130496 0

xor 24576 1 btrfs

zstd_compress 167936 1 btrfs

raid6_pq 114688 1 btrfs

hid_generic 16384 0

usbkbd 16384 0

usbmouse 16384 0

usbhid 49152 0

hid 118784 2 usbhid,hid_generic

ahci 40960 0

i2c_i801 28672 0

libahci 32768 1 ahci

isci 139264 2

libsas 77824 1 isci

scsi_transport_sas 40960 2 isci,libsas

i40e 331776 0

mlx4_core 294912 2 mlx4_ib,mlx4_en

igb 196608 0

dca 16384 2 igb,ioatdma

devlink 45056 3 mlx4_core,mlx4_ib,mlx4_en

ptp 20480 3 i40e,igb,mlx4_en

pps_core 20480 1 ptp

The network setup for example on the first Proxmox client:

Code:

# Mellanox Infiniband

auto ib0

iface ib0 inet static

address 10.20.24.110

netmask 255.255.255.0

pre-up echo connected > /sys/class/net/$IFACE/mode

#post-up /sbin/ifconfig $IFACE mtu 65520

post-up /sbin/ifconfig $IFACE mtu 40950

# Mellanox Infiniband

auto ib1

iface ib1 inet static

address 10.20.25.110

netmask 255.255.255.0

pre-up echo connected > /sys/class/net/$IFACE/mode

#post-up /sbin/ifconfig $IFACE mtu 65520

post-up /sbin/ifconfig $IFACE mtu 40950

On the Proxmox side I`m running a multipath setup.

This is the content of /etc/multipath.conf:

Code:

defaults {

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

uid_attribute ID_SERIAL

rr_min_io_rq 1

rr_weight uniform

failback immediate

no_path_retry queue

user_friendly_names yes

}

blacklist {

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^(td|hd)[a-z]"

devnode "^dcssblk[0-9]*"

devnode "^cciss!c[0-9]d[0-9]*"

device {

vendor "DGC"

product "LUNZ"

}

device {

vendor "EMC"

product "LUNZ"

}

device {

vendor "IBM"

product "Universal Xport"

}

device {

vendor "IBM"

product "S/390.*"

}

device {

vendor "DELL"

product "Universal Xport"

}

device {

vendor "SGI"

product "Universal Xport"

}

device {

vendor "STK"

product "Universal Xport"

}

device {

vendor "SUN"

product "Universal Xport"

}

device {

vendor "(NETAPP|LSI|ENGENIO)"

product "Universal Xport"

}

}

blacklist_exceptions {

wwid "36589cfc000000ba043795f1aaa24a764"

wwid "36589cfc0000001dd62faaaaa75d7c679"

wwid "36589cfc0000007aea1572aaef3aec823"

wwid "36589cfc0000009e1e93487c97aae9a54"

wwid "36589cfc000000f635efd89cb87f03890"

wwid "36589cfc000000563c0c9bb4a2e354f25"

wwid "36589cfc0000008bae6fc06d5e0c88172"

wwid "36589cfc0000005a963aa288b259f58e4"

wwid "36589cfc000000a4054637fc225b8e98f"

wwid "36589cfc000000b7d308a80c43d99bcf1"

wwid "36589cfc000000ecc18d003644fc0e791"

wwid "36589cfc0000008e202c2cd920e0eae8d"

wwid "36589cfc0000001c5c48ed15790210293"

wwid "36589cfc0000003dff6c09aea0a3dde3f"

wwid "36589cfc000000ac16a0f04a4d0b9b80c"

wwid "36589cfc0000008b9453ba6f4f7208a08"

wwid "36589cfc000000bef6e9ccda0bfeedc7e"

wwid "36589cfc000000f62a06da4e537faf8a5"

wwid "36589cfc000000a1c16d9b9b4fd27cf45"

wwid "36589cfc0000001d5698dd929a4db723b"

wwid "36589cfc000000fa534d1f6243e35b48a"

}

multipaths {

multipath {

wwid "36589cfc000000ba043795f1aaa24a764"

alias mpath100d0

}

multipath {

wwid "36589cfc0000001dd62faaaaa75d7c679"

alias mpath101d0

}

multipath {

wwid "36589cfc0000007aea1572aaef3aec823"

alias mpath102d0

}

multipath {

wwid "36589cfc0000009e1e93487c97aae9a54"

alias mpath103d0

}

multipath {

wwid "36589cfc000000f635efd89cb87f03890"

alias mpath104d0

}

multipath {

wwid "36589cfc000000563c0c9bb4a2e354f25"

alias mpath105d0

}

multipath {

wwid "36589cfc0000008bae6fc06d5e0c88172"

alias mpath106d0

}

multipath {

wwid "36589cfc0000005a963aa288b259f58e4"

alias mpath107d0

}

multipath {

wwid "36589cfc000000a4054637fc225b8e98f"

alias mpath108d0

}

multipath {

wwid "36589cfc000000b7d308a80c43d99bcf1"

alias mpath108d1

}

multipath {

wwid "36589cfc000000ecc18d003644fc0e791"

alias mpath109d1

}

multipath {

wwid "36589cfc0000008e202c2cd920e0eae8d"

alias mpath109d2

}

multipath {

wwid "36589cfc0000001c5c48ed15790210293"

alias mpath109d3

}

multipath {

wwid "36589cfc0000003dff6c09aea0a3dde3f"

alias mpath109d4

}

multipath {

wwid "36589cfc000000ac16a0f04a4d0b9b80c"

alias mpath110d0

}

multipath {

wwid "36589cfc0000008b9453ba6f4f7208a08"

alias mpath110d1

}

multipath {

wwid "36589cfc000000bef6e9ccda0bfeedc7e"

alias mpath110d2

}

multipath {

wwid "36589cfc000000f62a06da4e537faf8a5"

alias mpath111d0

}

multipath {

wwid "36589cfc000000a1c16d9b9b4fd27cf45"

alias mpath111d1

}

multipath {

wwid "36589cfc0000001d5698dd929a4db723b"

alias mpath112d0

}

multipath {

wwid "36589cfc000000fa534d1f6243e35b48a"

alias mpath112d1

}

}

ifconfig for the both Infiniband ports on the FreeNAS server looks like that:

Code:

ib0: flags=8043<UP,BROADCAST,RUNNING,MULTICAST> metric 0 mtu 40950

options=80018<VLAN_MTU,VLAN_HWTAGGING,LINKSTATE>

lladdr 80.0.2.8.fe.80.0.0.0.0.0.0.0.2.c9.3.0.3a.ed.41

inet 10.20.24.210 netmask 0xffffff00 broadcast 10.20.24.255

nd6 options=9<PERFORMNUD,IFDISABLED>

ib1: flags=8043<UP,BROADCAST,RUNNING,MULTICAST> metric 0 mtu 40950

options=80018<VLAN_MTU,VLAN_HWTAGGING,LINKSTATE>

lladdr 80.0.2.9.fe.80.0.0.0.0.0.0.0.2.c9.3.0.3a.ed.42

inet 10.20.25.210 netmask 0xffffff00 broadcast 10.20.25.255

nd6 options=9<PERFORMNUD,IFDISABLED>

ifconfig on the first Proxmox client:

Code:

ib0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 40950

inet 10.20.24.110 netmask 255.255.255.0 broadcast 10.20.24.255

inet6 fe80::202:c903:9:20e3 prefixlen 64 scopeid 0x20<link>

unspec 80-00-02-08-FE-80-00-00-00-00-00-00-00-00-00-00 txqueuelen 256 (UNSPEC)

RX packets 5596912 bytes 10293861835 (9.5 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3744669 bytes 48471009082 (45.1 GiB)

TX errors 0 dropped 125 overruns 0 carrier 0 collisions 0

ib1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 40950

inet 10.20.25.110 netmask 255.255.255.0 broadcast 10.20.25.255

inet6 fe80::202:c903:9:20e4 prefixlen 64 scopeid 0x20<link>

unspec 80-00-02-09-FE-80-00-00-00-00-00-00-00-00-00-00 txqueuelen 256 (UNSPEC)

RX packets 6863837 bytes 8858149718 (8.2 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6197948 bytes 96516756048 (89.8 GiB)

TX errors 0 dropped 257 overruns 0 carrier 0 collisions 0