- Joined

- Nov 21, 2017

- Messages

- 37

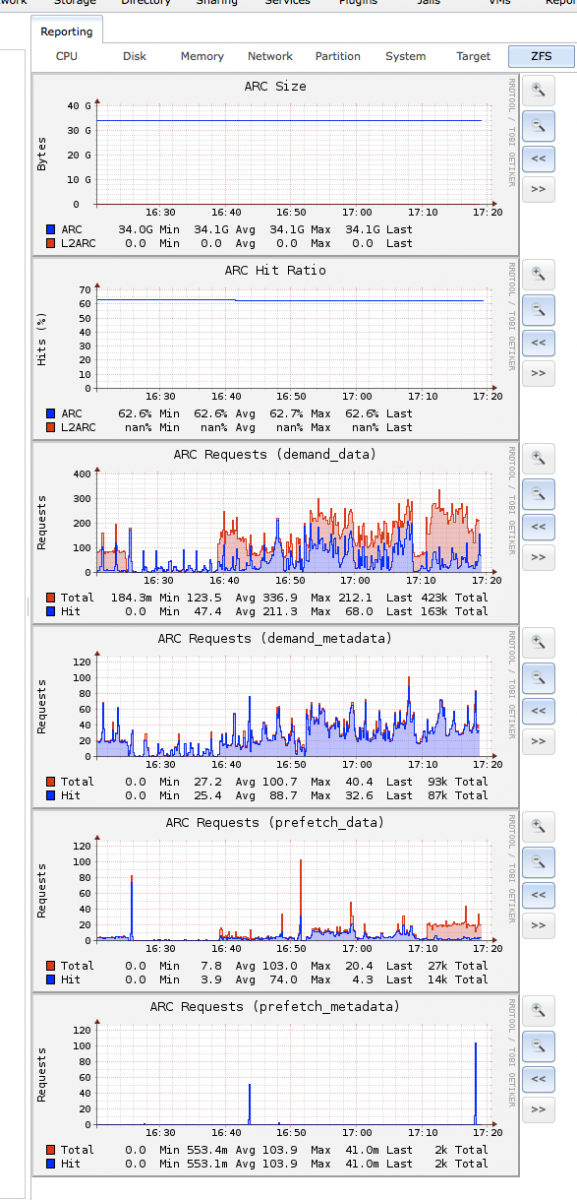

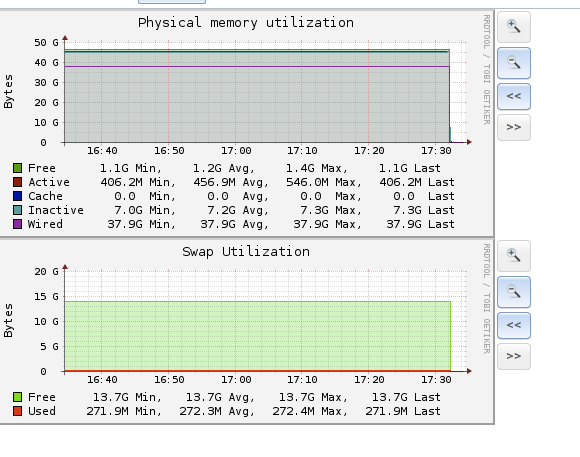

How does one go about interpreting the ARC graphs on the reporting page when trying to decide if there would be a benefit to adding more ram?

I currently have 48GB of RAM and a raidz2 with seven 8TB drives (more hardware details in signature). I could quite easily and cheaply add another 24GB of RAM since the general consensus seems to be "the more RAM the better" but for all I know, we're barely using what we have already. The graphs below represent a typical afternoon where both of us are in full production mode culling folders with many thousands of Canon RAW files, designing albums, batching JPEG's and other photo post production type activities.

Based on what you see here, would there be any tangible benefit to more RAM or would I just be wasting my time and money and the system is barely breaking a sweat as it is?

I currently have 48GB of RAM and a raidz2 with seven 8TB drives (more hardware details in signature). I could quite easily and cheaply add another 24GB of RAM since the general consensus seems to be "the more RAM the better" but for all I know, we're barely using what we have already. The graphs below represent a typical afternoon where both of us are in full production mode culling folders with many thousands of Canon RAW files, designing albums, batching JPEG's and other photo post production type activities.

Based on what you see here, would there be any tangible benefit to more RAM or would I just be wasting my time and money and the system is barely breaking a sweat as it is?