CheeryFlame

Contributor

- Joined

- Nov 21, 2022

- Messages

- 184

I got a smaller off-site server to backup my main server every day at midnight.

I can't afford lots of space on the backup server. Currently if I choose Same as Source it'll be too much data

= 1 month which I don't have enough space for

= 1 month which I don't have enough space for

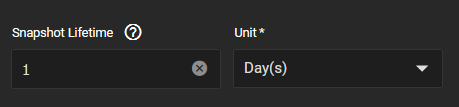

I'm not sure what to do in order that TrueNAS keeps the latest snapshot on the backup server. If I set it up to 1 day does it mean that once a new snapshot has been successfully replicated, snapshots older than 1 day will be deleted? In this case it would delete only one snapshot. OR will the data in the snapshot get deleted after one day no matter if there's a new replication or not?

= what I think would be optimal

= what I think would be optimal

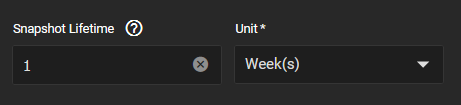

Since I was afraid of the latter so I've setup the snapshot retention to 1 week since I have 48TB to transfer at this time.

= what's currently setup for the initial transfer

= what's currently setup for the initial transfer

Now after the transfer can I set the retention to 1 day and on the next replication snapshots older than 1 day will be getting rid of?

I can't afford lots of space on the backup server. Currently if I choose Same as Source it'll be too much data

I'm not sure what to do in order that TrueNAS keeps the latest snapshot on the backup server. If I set it up to 1 day does it mean that once a new snapshot has been successfully replicated, snapshots older than 1 day will be deleted? In this case it would delete only one snapshot. OR will the data in the snapshot get deleted after one day no matter if there's a new replication or not?

Since I was afraid of the latter so I've setup the snapshot retention to 1 week since I have 48TB to transfer at this time.

Now after the transfer can I set the retention to 1 day and on the next replication snapshots older than 1 day will be getting rid of?

Unnecessary backstory of how I ended up in this situation:

- The backup server filled up to 100% in one night

- I deleted all the snapshots but the datasets were empty even if shown as mounted in GUI

- I manually mounted all datasets in CLI and my data was back

- Pool size reduced from 56tb (full) to 48tb

- I started the replication task again on the main server

- Everything got deleted everything and now the whole 48TB is transferring all over again