internat_user

Cadet

- Joined

- Apr 19, 2022

- Messages

- 8

System:

TrueNAS-12.0-U8

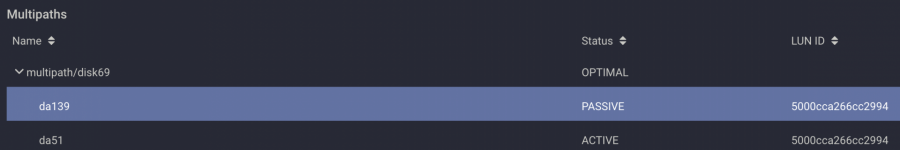

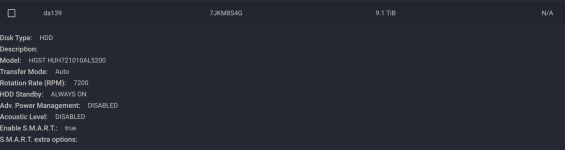

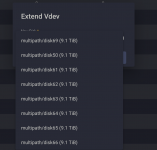

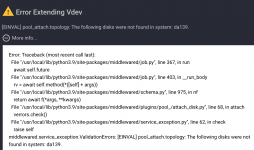

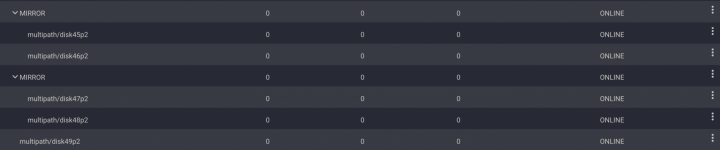

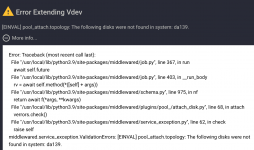

I was doing some cleanup / maintenance on my TrueNAS system and noticed there is a single vdev in my mirrored pool. There are no known errors on the pool or on the drive. When attempting to extend the vdev to a mirror I receive an error that the disk cannot be found although I can see the disk under 'Disks' and both devices under 'Multipaths'. I am unable to remove the disk from the pool.

Multipath/disk49p2 is the disk in questions. Ideally I want to extend this so that it is a mirror and not a single vdev.

I have tried replacing the vdev and retrying the process but I get the same error. I have attempted to wipe the disks before adding them as well.

Is there a way to extend this vdev via cli or somehow transfer the data off of the vdev and drop it?

I am new, if any additional information is required please let me know, happy to provide. I have attached several screenshots as well.

TrueNAS-12.0-U8

I was doing some cleanup / maintenance on my TrueNAS system and noticed there is a single vdev in my mirrored pool. There are no known errors on the pool or on the drive. When attempting to extend the vdev to a mirror I receive an error that the disk cannot be found although I can see the disk under 'Disks' and both devices under 'Multipaths'. I am unable to remove the disk from the pool.

Multipath/disk49p2 is the disk in questions. Ideally I want to extend this so that it is a mirror and not a single vdev.

I have tried replacing the vdev and retrying the process but I get the same error. I have attempted to wipe the disks before adding them as well.

Is there a way to extend this vdev via cli or somehow transfer the data off of the vdev and drop it?

I am new, if any additional information is required please let me know, happy to provide. I have attached several screenshots as well.

Attachments

-

Screen Shot 2022-04-19 at 4.53.56 PM.png233.9 KB · Views: 552

Screen Shot 2022-04-19 at 4.53.56 PM.png233.9 KB · Views: 552 -

Screen Shot 2022-04-19 at 4.53.45 PM.png570.8 KB · Views: 542

Screen Shot 2022-04-19 at 4.53.45 PM.png570.8 KB · Views: 542 -

Screen Shot 2022-04-19 at 4.33.48 PM.png107.4 KB · Views: 521

Screen Shot 2022-04-19 at 4.33.48 PM.png107.4 KB · Views: 521 -

Screen Shot 2022-04-19 at 4.52.17 PM.png185.6 KB · Views: 476

Screen Shot 2022-04-19 at 4.52.17 PM.png185.6 KB · Views: 476 -

Screen Shot 2022-04-19 at 4.50.24 PM.png204.5 KB · Views: 488

Screen Shot 2022-04-19 at 4.50.24 PM.png204.5 KB · Views: 488 -

1650402245971.png185.6 KB · Views: 524

1650402245971.png185.6 KB · Views: 524