I am hoping that someone can help me improve my read speeds over my network from my TrueNAS installation. I have an SMB share mounted on a linux server which has terrible read performance (15.2MB/s) so I am trying to figure out what I can do to improve the performance of the system.

My install is a TrueNAS Core TrueNAS-12.0-U6.1 installation running with the following configuration:

- 72G RAM

- 4 x 14TB Seagate Exos X16 7200 RPM (ST14000NM001G)

- Dual XEON CPU X5670 @ 2.93GHz (24 Threads)

To test, I ran a read/write baseline on the TrueNAS shell itself to ensure that it wasn't the NAS hardware which was problematic which has decent numbers:

eric@truenas:/mnt/HomeNAS/media/eric $ dd if=/dev/zero of=./test.dat bs=2048k count=50000

50000+0 records in

50000+0 records out

104857600000 bytes transferred in 43.785081 secs (2394824848 bytes/sec)

eric@truenas:/mnt/HomeNAS/media/eric $ dd of=/dev/null if=./test.dat bs=2048k count=50000

50000+0 records in

50000+0 records out

104857600000 bytes transferred in 18.638570 secs (5625839373 bytes/sec)

Then I ran the same read test from my server with the media share mounted with an abysmal result.

[eric@docker1 eric]$ dd of=/dev/null if=./test.dat bs=2048k count=50000

50000+0 records in

50000+0 records out

104857600000 bytes (105 GB) copied, 6876.65 s, 15.2 MB/s

So I am going from 5.6GB/s (local) to 15.2MB/s (over network).

Running iperf3 between the two servers gives me full gigabit transmission speeds:

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bandwidth Retr

[ 4] 0.00-10.00 sec 1.10 GBytes 942 Mbits/sec 0 sender

[ 4] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver

iperf Done.

So I presume it is either how my share is configured or how my share is mounted.

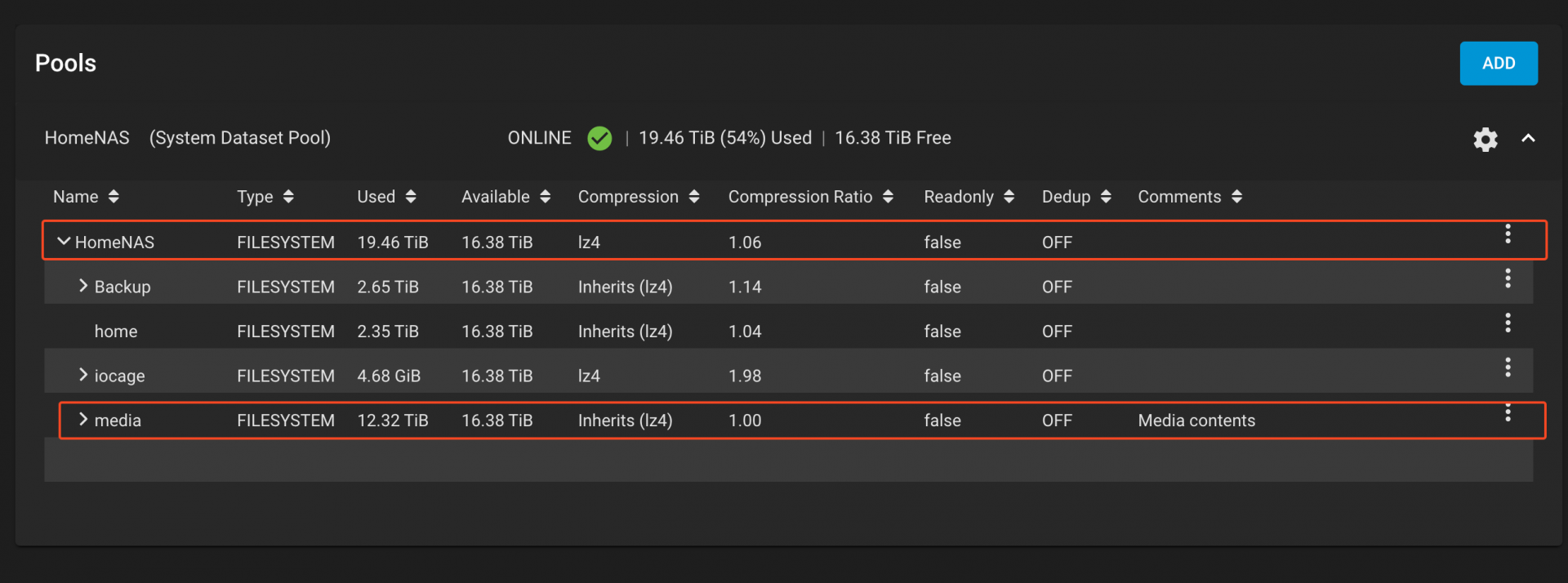

I have one Pool configured for the full space with lz4 compression and then a dataset within the pool configured to inherit the main storage pool compression.

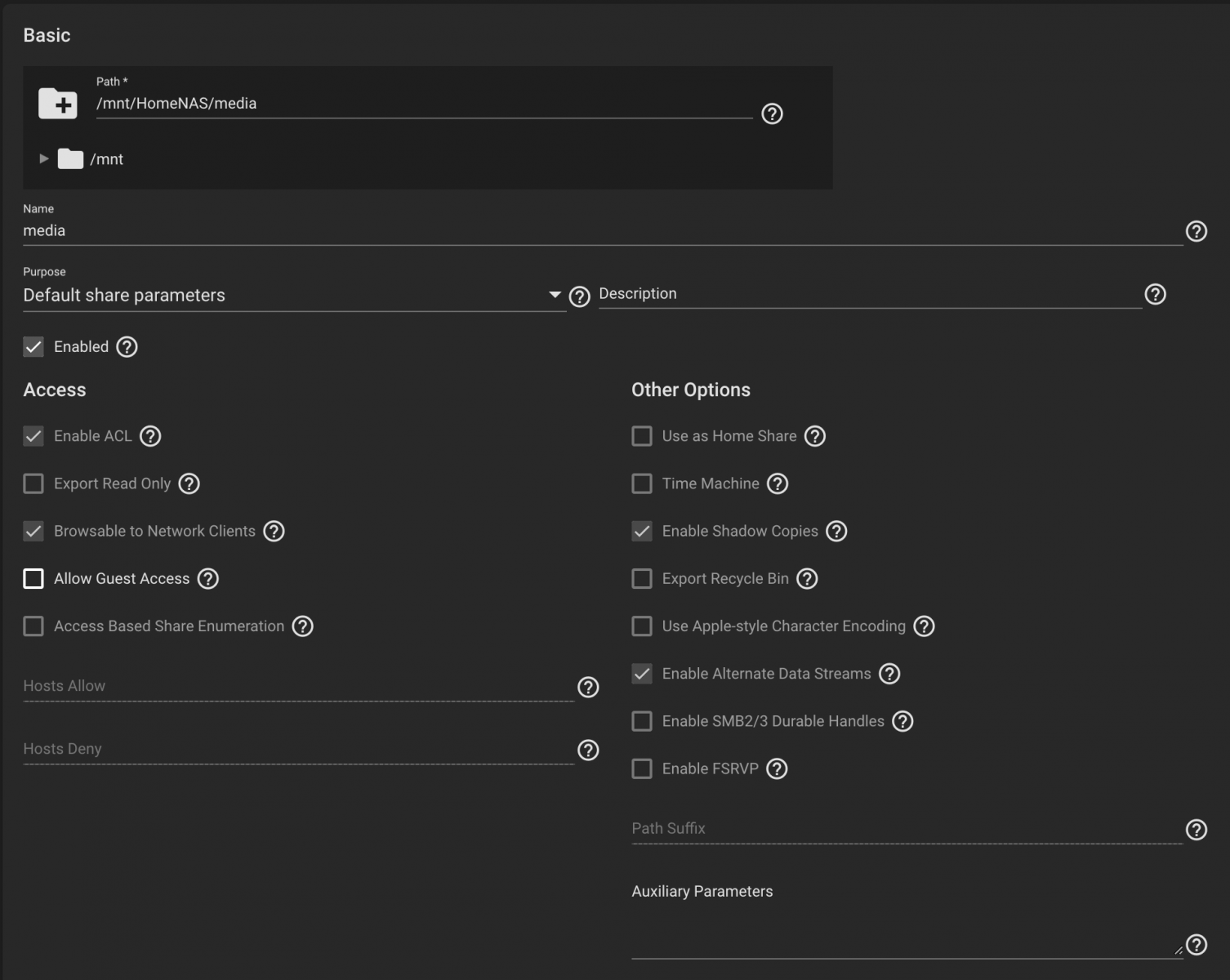

I have an SMB share defined as:

And my mount in my server defined as a CIFS mount:

/etc/fstab:

//nas.domain.ca/media /mnt/media cifs rw,user,credentials=/etc/fstab.credentials.docker,vers=3.0,_netdev,soft,uid=bittorrent,gid=media,noperm,noacl,file_mode=0775,dir_mode=02775 0 0

Offhand I don't see anything inherently incorrect about this, although I am forcing CIFS v3.

Any suggestions what can I do to improve performance over the wire? I find that 15MB/s is abysmal on a GigE network connection for my needs. I would have expected something in the 100-150MB/s speeds. Or am I expecting too much?

Thanks!

Eric

My install is a TrueNAS Core TrueNAS-12.0-U6.1 installation running with the following configuration:

- 72G RAM

- 4 x 14TB Seagate Exos X16 7200 RPM (ST14000NM001G)

- Dual XEON CPU X5670 @ 2.93GHz (24 Threads)

To test, I ran a read/write baseline on the TrueNAS shell itself to ensure that it wasn't the NAS hardware which was problematic which has decent numbers:

eric@truenas:/mnt/HomeNAS/media/eric $ dd if=/dev/zero of=./test.dat bs=2048k count=50000

50000+0 records in

50000+0 records out

104857600000 bytes transferred in 43.785081 secs (2394824848 bytes/sec)

eric@truenas:/mnt/HomeNAS/media/eric $ dd of=/dev/null if=./test.dat bs=2048k count=50000

50000+0 records in

50000+0 records out

104857600000 bytes transferred in 18.638570 secs (5625839373 bytes/sec)

Then I ran the same read test from my server with the media share mounted with an abysmal result.

[eric@docker1 eric]$ dd of=/dev/null if=./test.dat bs=2048k count=50000

50000+0 records in

50000+0 records out

104857600000 bytes (105 GB) copied, 6876.65 s, 15.2 MB/s

So I am going from 5.6GB/s (local) to 15.2MB/s (over network).

Running iperf3 between the two servers gives me full gigabit transmission speeds:

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bandwidth Retr

[ 4] 0.00-10.00 sec 1.10 GBytes 942 Mbits/sec 0 sender

[ 4] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver

iperf Done.

So I presume it is either how my share is configured or how my share is mounted.

I have one Pool configured for the full space with lz4 compression and then a dataset within the pool configured to inherit the main storage pool compression.

I have an SMB share defined as:

And my mount in my server defined as a CIFS mount:

/etc/fstab:

//nas.domain.ca/media /mnt/media cifs rw,user,credentials=/etc/fstab.credentials.docker,vers=3.0,_netdev,soft,uid=bittorrent,gid=media,noperm,noacl,file_mode=0775,dir_mode=02775 0 0

Offhand I don't see anything inherently incorrect about this, although I am forcing CIFS v3.

Any suggestions what can I do to improve performance over the wire? I find that 15MB/s is abysmal on a GigE network connection for my needs. I would have expected something in the 100-150MB/s speeds. Or am I expecting too much?

Thanks!

Eric

Last edited: