viniciusferrao

Contributor

- Joined

- Mar 30, 2013

- Messages

- 192

Hello guys,

I'm not satisfied with my storage performance, and I would like to do some debugging to find where's the bottleneck. The question is: how to do this?

Here are my setup:

Supermicro X9SCM with Xeon E3-1240V2

32GB DDR3 1600MHz with ECC

Intel RAID Controller flashed to LSI in IT Mode

24 x 3TB Seagate SATA Disks

2x Kingston SSDV300 128GB for SLOG

We have three ZFS pools, one with 4 disks in RAID-Z1 with forced async writes just for useless data and the two others are in RAID-Z2 with 10 disks each and independent zpools. Each SSD acts as SLOG devices to the 10 disk pools. This pools have sync=always enabled.

Here are the setup:

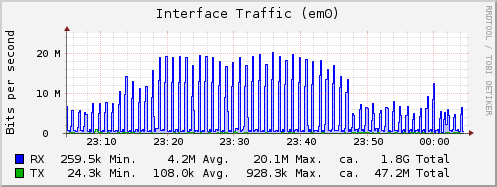

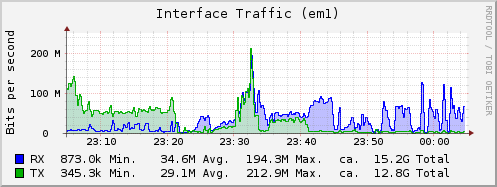

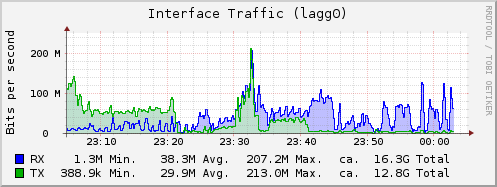

And finally here are the performance graphs. It's two intel NICs in lagg mode:

As you can see the Max Throughput is something like 200Mbits, which is very slow.

Any information is welcome.

Thanks in advance,

I'm not satisfied with my storage performance, and I would like to do some debugging to find where's the bottleneck. The question is: how to do this?

Here are my setup:

Supermicro X9SCM with Xeon E3-1240V2

32GB DDR3 1600MHz with ECC

Intel RAID Controller flashed to LSI in IT Mode

24 x 3TB Seagate SATA Disks

2x Kingston SSDV300 128GB for SLOG

We have three ZFS pools, one with 4 disks in RAID-Z1 with forced async writes just for useless data and the two others are in RAID-Z2 with 10 disks each and independent zpools. Each SSD acts as SLOG devices to the 10 disk pools. This pools have sync=always enabled.

Here are the setup:

Code:

storage# zpool list NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT intpool0 10.9T 3.74T 7.14T 34% 1.00x ONLINE /mnt storagepool0 27.2T 2.95T 24.3T 10% 1.00x ONLINE /mnt storagepool1 27.2T 11.5T 15.7T 42% 1.00x ONLINE /mnt storage# zpool status pool: intpool0 state: ONLINE scan: scrub repaired 0 in 2h13m with 0 errors on Sun Oct 20 03:13:21 2013 config: NAME STATE READ WRITE CKSUM intpool0 ONLINE 0 0 0 raidz1-0 ONLINE 0 0 0 gptid/345d97a2-f960-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/34e503e5-f960-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/3569dbe2-f960-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/35f7b991-f960-11e2-8474-001018427ad4 ONLINE 0 0 0 errors: No known data errors pool: storagepool0 state: ONLINE scan: scrub repaired 0 in 7h45m with 0 errors on Sun Oct 20 08:45:59 2013 config: NAME STATE READ WRITE CKSUM storagepool0 ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 gptid/5b0acab8-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5b8bf65a-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5c0bcbf2-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5c8ef3b1-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5d14fa55-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5d971bea-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5e1f2120-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5ea100f0-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5f252675-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/5fac6691-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 logs gptid/601457a3-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 errors: No known data errors pool: storagepool1 state: ONLINE scan: scrub repaired 0 in 3h35m with 0 errors on Sun Oct 20 04:35:36 2013 config: NAME STATE READ WRITE CKSUM storagepool1 ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 gptid/8e7889e1-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/8efb2430-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/8f7c685f-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/9001a059-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/907eefbe-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/90fbcbf8-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/9184701e-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/9207f18b-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/928bcc13-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 gptid/93106513-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 logs gptid/937a3a6a-f95f-11e2-8474-001018427ad4 ONLINE 0 0 0 errors: No known data errors

Code:

storage# zfs get sync NAME PROPERTY VALUE SOURCE intpool0 sync standard local storagepool0 sync always local storagepool0/lvm0 sync always inherited from storagepool0 storagepool1 sync always local storagepool1/lvm1 sync always inherited from storagepool1

And finally here are the performance graphs. It's two intel NICs in lagg mode:

As you can see the Max Throughput is something like 200Mbits, which is very slow.

Any information is welcome.

Thanks in advance,