Chocofate

Dabbler

- Joined

- Mar 21, 2022

- Messages

- 10

System Version:TrueNAS-12.0-U8

Hardware:

CPU,AMD Ryzen Pro 5 5650GE

Mobo,Asrock X570 Pro 4

Dram,Samsung ECC UDIMM DDR4 3200MHz 32G × 4

Graphics,GeForce RTX 3050

Network,X540-T1

SSD,Crucial P2 2T × 2

HDD,WestDigital HC550 18T × 6

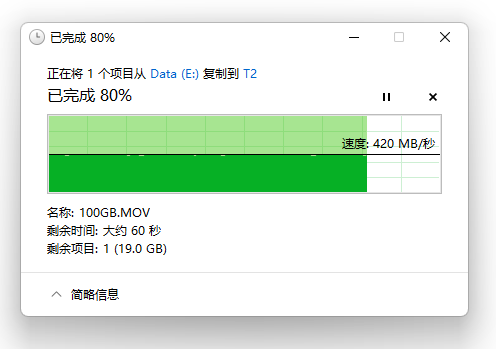

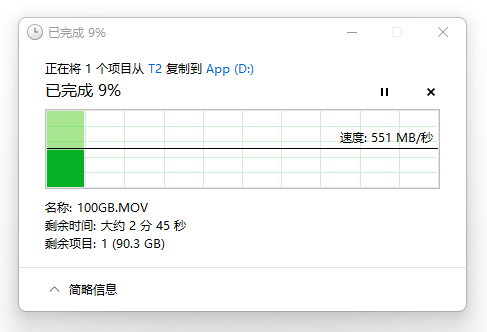

Question:After staring samba service,write speed only up to 425MB/s,read only up to 550MB/s,how can copy speed up to 10Gb/s ?

Description:

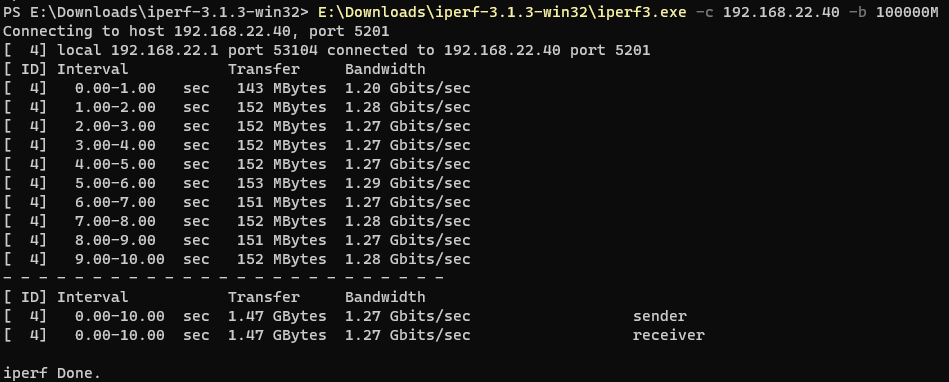

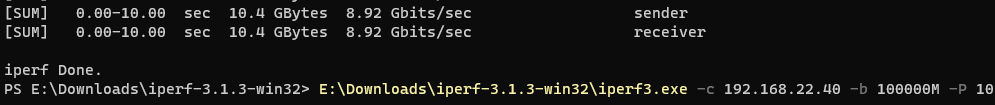

1、iPerf3 test speed on one thread can only reach 1.25Gb/s,10 threads can reach 10 gigabit bandwidth;

2、6 HDDs on stripe mode or Raidz2 can only reach 425MB/s;

3、try to set MTU as 9000 , but no use;

4、try to copy to different HDDs,total rate can only up to 425MB/s.

Other:

1、test files store in 980Pro 2T disk

2、X540 is on PCIe 4.0 x4

3、ifconfig

thanks for your reading and helping ~

Hardware:

CPU,AMD Ryzen Pro 5 5650GE

Mobo,Asrock X570 Pro 4

Dram,Samsung ECC UDIMM DDR4 3200MHz 32G × 4

Graphics,GeForce RTX 3050

Network,X540-T1

SSD,Crucial P2 2T × 2

HDD,WestDigital HC550 18T × 6

Question:After staring samba service,write speed only up to 425MB/s,read only up to 550MB/s,how can copy speed up to 10Gb/s ?

Description:

1、iPerf3 test speed on one thread can only reach 1.25Gb/s,10 threads can reach 10 gigabit bandwidth;

2、6 HDDs on stripe mode or Raidz2 can only reach 425MB/s;

3、try to set MTU as 9000 , but no use;

4、try to copy to different HDDs,total rate can only up to 425MB/s.

Other:

1、test files store in 980Pro 2T disk

2、X540 is on PCIe 4.0 x4

3、ifconfig

ix0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9000

options=e53fbb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_UCAST,WOL_MCAST,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,TXCSUM_IPV6>

ether a0:36:9f:5e:eb:eb

inet 192.168.22.40 netmask 0xffffff00 broadcast 192.168.22.255

media: Ethernet autoselect (10Gbase-T <full-duplex,rxpause,txpause>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb0: flags=8802<BROADCAST,SIMPLEX,MULTICAST> metric 0 mtu 1500

options=e527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCS UM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,TXCSUM_IPV6>

ether 70:85:c2:db:e5:3b

media: Ethernet autoselect

status: no carrier

nd6 options=1<PERFORMNUD>

lo0: flags=8049<UP,LOOPBACK,RUNNING,MULTICAST> metric 0 mtu 16384

options=680003<RXCSUM,TXCSUM,LINKSTATE,RXCSUM_IPV6,TXCSUM_IPV6>

inet6 ::1 prefixlen 128

inet6 fe80::1%lo0 prefixlen 64 scopeid 0x3

inet 127.0.0.1 netmask 0xff000000

groups: lo

nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL>

pflog0: flags=0<> metri

thanks for your reading and helping ~