aran kaspar

Explorer

- Joined

- Mar 24, 2014

- Messages

- 68

Special thanks to mav@ and jpaetzel ...who should definitely know they've helped me considerably to complete this. Thanks for your posts/replies on the forums.

The ESXi/FreeNAS lab is complete.

This can be used as either Direct Attached Storage in Point-to-Point or Arbitrated-loop (2/3 nodes)

Should also be able to use it in a SAN environment with a Fabric.

Here is a short guide for anyone who is looking to set this up.

----------------------------------------------------------------------------------------------------------------------------------------

It's recommended by Qlogic to manual set port speed in your HBA BIOS

(yes, reboot and press the Alt+Q screen when prompted)

Go to the "System" section and enter these Tunables...

5. Enable iSCSI and then configure LUNs

enable iscsi service and create the following...

create portal (do not select an IP, select 0.0.0.0)

create initiator (ALL, ALL)

create target (select your only portal and your only initiator) give it a name...(doesn't quite matter what)

create extent (device will be a physical disk, file will be a file on zfs vol of your choice) Research these!

create associated target (chose any LUN # from the list, link the target and extent)

If creating File extent...

Choose "File" Select a pool, dataset or zvol from the drop down tree and tag on to the end

You must tag on a slash in the path and type in the name of your file-extent, to be created.

e.g. "Vol1/data/extents/CSV1"

If creating Device extent...

Choose "Device" and select a zvol (must be zvol - not a datastore)

BE SURE TO SELECT "Disable Physical Block Size Reporting"

[ Took me days to figure out why I could not move my VM's folders over to the new ESXi FC datastore... ]

[ They always failed halfway through and it was due to the block size of the disk. Unchecking this fixed it. _ ]

REBOOT!

now...sit back and relax - your Direct Attached Storage is setup as a target. The hard part is done.

---------------------------------------------------------------------------------------------------------------------------------------------

1. Check that your FC card is installed and shows up

Configuration > Storage Adapters and click Rescan All to check it's availability by selecting your fibre card.

If you don't see your card, make sure you have installed the drivers for it on ESXi. (Total PIA if manual - google it)

2. Adding the storage to ESXi in vSphere ( VMFS vs. RAW SCSI )

You can now use your FC extent to create a VMFS Datastore (formatted with V.M.ware F.ile S.ystem)

As you know this lets you store multiple VM files and such, like a directly connected drive... but now with greater capacity & IO.

Just "Add Storage" as usual and use the fiber channel disk it should find during the scan and your done.

I was fine with this but I personally think, the less file systems involved between the server and the storage, the better. If I can eliminate one the performance should theoretically be somewhat less taxed.

(Your input and experience here is always welcome.)

If you want to use true block level access to the FC iSCSI LUN, you can use a pass-through feature VMware calls "RAW SCSI".

This way, you present that LUN you made to a single VM as a Raw SCSI hard disk.

To the best of my knowledge this is much like the connection of a SATA drive to the bus on a motherboard.

Unfortunately you can only present it to one VM using this method but it should allow for a more direct route to get more of a block-level access.

Using this method, I would rinse and repeat the steps above and dice up your FreeNAS ZVols to make LUNs for each additional VM as needed.

- Adding a RAW disk in ESXi (optional)

Edit Settings for your VM and when you Add a new Hard Drive you will now see the Disk type "Raw Device Mappings" is no longer grayed out. Use this for your VM.

Remember it will be dedicated to only this VM Guest.

Multi-Port HBAs

Research MPIO for a performance advantages and redundancy.

ESXi also has load-balancing for VMFS Datastores. I'm not entirely sure how advantageous this is but feel free to experiment. I think you must have extremely fast SSDs for it matter. Then just Create a ESXi Datastore and right-click, select "Properties..." and click "Manage Paths..."

Please reply if this helped you! I've been trying to get this working for almost 6 months! Thanks again to everyone on the forum.

fiber channel direct attached storage storage area network DAS SAN FC LUN

The ESXi/FreeNAS lab is complete.

This can be used as either Direct Attached Storage in Point-to-Point or Arbitrated-loop (2/3 nodes)

Should also be able to use it in a SAN environment with a Fabric.

Here is a short guide for anyone who is looking to set this up.

----------------------------------------------------------------------------------------------------------------------------------------

FreeNAS Target side

1. Install the right version

- FreeNAS 9.3 BETA (as of writing) --- install instructions here!

It's recommended by Qlogic to manual set port speed in your HBA BIOS

(yes, reboot and press the Alt+Q screen when prompted)

- I'm using two Qlogic QLE2462 HBA cards (1 for each server) 4 Gbps max.

- I have 2 ports on each of my cards, both cables plugged in (not required)

- Check that Firmware / Driver loaded for card, shown by solid port status light after full bootup

- I have the Qlogic-2462 [solid link orange=4Gbps] check your HBA manual for color codes

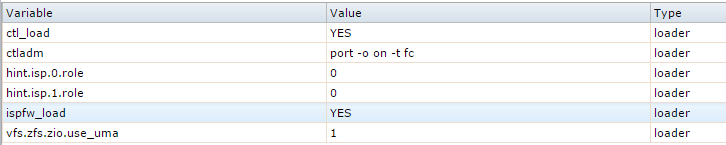

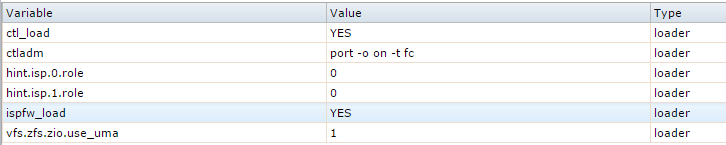

Go to the "System" section and enter these Tunables...

- variable:ispfw_load ____value:YES_________type:loader___(start HBA firmware)?

- variable:ctl_load ______value:YES _________type:loader___(start ctl service)?

- variable:hint.isp.0.role__value:0 (zero)______type:loader___(target mode FC port 1)?

- variable:hint.isp.1.role __value:0 (zero)______type:loader___(target mode FC port 2)?

- variable:ctladm _______value:port -o on -t fc _type:loader___(bind the ports)?

Go to the "Tasks" section and add in this Script.- Type:command________command:ctladm port -o on -t fc____When:Post Init

5. Enable iSCSI and then configure LUNs

enable iscsi service and create the following...

create portal (do not select an IP, select 0.0.0.0)

create initiator (ALL, ALL)

create target (select your only portal and your only initiator) give it a name...(doesn't quite matter what)

create extent (device will be a physical disk, file will be a file on zfs vol of your choice) Research these!

create associated target (chose any LUN # from the list, link the target and extent)

If creating File extent...

Choose "File" Select a pool, dataset or zvol from the drop down tree and tag on to the end

You must tag on a slash in the path and type in the name of your file-extent, to be created.

e.g. "Vol1/data/extents/CSV1"

If creating Device extent...

Choose "Device" and select a zvol (must be zvol - not a datastore)

BE SURE TO SELECT "Disable Physical Block Size Reporting"

[ Took me days to figure out why I could not move my VM's folders over to the new ESXi FC datastore... ]

[ They always failed halfway through and it was due to the block size of the disk. Unchecking this fixed it. _ ]

REBOOT!

now...sit back and relax - your Direct Attached Storage is setup as a target. The hard part is done.

---------------------------------------------------------------------------------------------------------------------------------------------

ESXi Hypervisor Initiator side

1. Check that your FC card is installed and shows up

Configuration > Storage Adapters and click Rescan All to check it's availability by selecting your fibre card.

If you don't see your card, make sure you have installed the drivers for it on ESXi. (Total PIA if manual - google it)

2. Adding the storage to ESXi in vSphere ( VMFS vs. RAW SCSI )

You can now use your FC extent to create a VMFS Datastore (formatted with V.M.ware F.ile S.ystem)

As you know this lets you store multiple VM files and such, like a directly connected drive... but now with greater capacity & IO.

Just "Add Storage" as usual and use the fiber channel disk it should find during the scan and your done.

I was fine with this but I personally think, the less file systems involved between the server and the storage, the better. If I can eliminate one the performance should theoretically be somewhat less taxed.

(Your input and experience here is always welcome.)

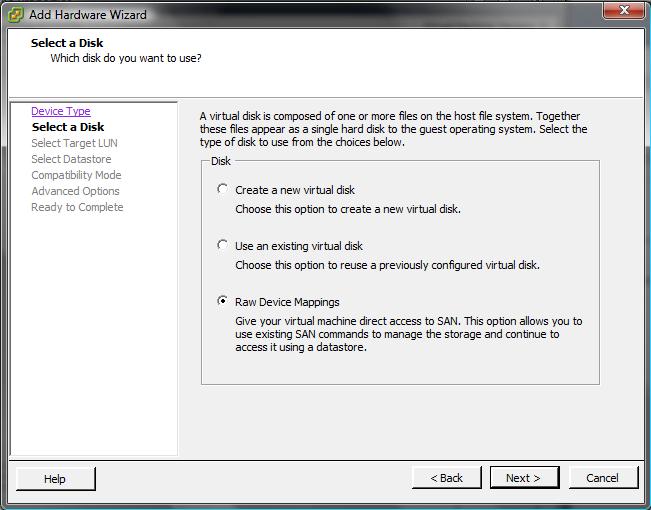

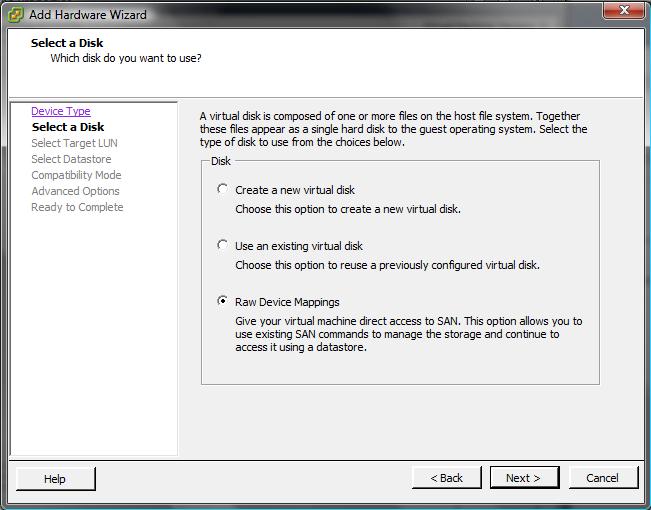

If you want to use true block level access to the FC iSCSI LUN, you can use a pass-through feature VMware calls "RAW SCSI".

This way, you present that LUN you made to a single VM as a Raw SCSI hard disk.

To the best of my knowledge this is much like the connection of a SATA drive to the bus on a motherboard.

Unfortunately you can only present it to one VM using this method but it should allow for a more direct route to get more of a block-level access.

Using this method, I would rinse and repeat the steps above and dice up your FreeNAS ZVols to make LUNs for each additional VM as needed.

- Adding a RAW disk in ESXi (optional)

Edit Settings for your VM and when you Add a new Hard Drive you will now see the Disk type "Raw Device Mappings" is no longer grayed out. Use this for your VM.

Remember it will be dedicated to only this VM Guest.

Multi-Port HBAs

Research MPIO for a performance advantages and redundancy.

ESXi also has load-balancing for VMFS Datastores. I'm not entirely sure how advantageous this is but feel free to experiment. I think you must have extremely fast SSDs for it matter. Then just Create a ESXi Datastore and right-click, select "Properties..." and click "Manage Paths..."

- Change the "Path Selection" menu for Round-Robin to load balance with fail-over on both ports.

- Click "Change" button and "OK" out of everything.

Please reply if this helped you! I've been trying to get this working for almost 6 months! Thanks again to everyone on the forum.

fiber channel direct attached storage storage area network DAS SAN FC LUN

Last edited: