skyline65

Explorer

- Joined

- Jul 18, 2014

- Messages

- 95

A quick question I hope.

I have a Freenas 24 bay server comprised of:

Media 1 6x WD Red 3Tb RAIDZ2

Media 2 4x WD Red 3Tb RAIDZ2

Server 4x WD Red 3Tb RAIDZ2 - General stuff that I have backed up elsewhere and don't want to clutter my Mac.

Backup 4x WD Red 3Tb RAIDZ2 - Backup of my computer and all my work

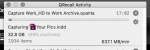

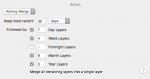

I use QRecall backup software on my Mac which creates large archives of my 4 work drives between 400Gb - 1Tb. Backing up is pretty quick as it de-dupes on the fly, the issue is that when I’m doing a verify or compact it takes ages... although much quicker now I’m on 10Gbe! Is there anyway of adding any extra speed to the process? I’m not in desperate need as it can run when I’m asleep however as with all Freenas users there is an itch to see if I can improve the performance without breaking the bank?

X11SSL-CF Motherboard, i3 6100, 64GB ECC Ram, Chelsio T420, LSI 9201

I have a Freenas 24 bay server comprised of:

Media 1 6x WD Red 3Tb RAIDZ2

Media 2 4x WD Red 3Tb RAIDZ2

Server 4x WD Red 3Tb RAIDZ2 - General stuff that I have backed up elsewhere and don't want to clutter my Mac.

Backup 4x WD Red 3Tb RAIDZ2 - Backup of my computer and all my work

I use QRecall backup software on my Mac which creates large archives of my 4 work drives between 400Gb - 1Tb. Backing up is pretty quick as it de-dupes on the fly, the issue is that when I’m doing a verify or compact it takes ages... although much quicker now I’m on 10Gbe! Is there anyway of adding any extra speed to the process? I’m not in desperate need as it can run when I’m asleep however as with all Freenas users there is an itch to see if I can improve the performance without breaking the bank?

X11SSL-CF Motherboard, i3 6100, 64GB ECC Ram, Chelsio T420, LSI 9201