leenux_tux

Patron

- Joined

- Sep 3, 2011

- Messages

- 238

Hello forum,

I have been getting the dreaded "unreadable (pending) sectors" for two of my hard drives (10 disks, RAIDZ2) so am in the process of making sure I have my data backed up via sending zfs snapshots to a second server. The second server is running Proxmox but has 3X3TB drives using ZFS and I backup regularly to these drives from my FreeNAS system. Here is the (possibly?) strange thing. File access time has dropped significantly. I recently did a scrub on the FreeNAS box and it took 113 hours !! Normally this is like single digits, 8 hours or so. Plus the amount of time it is taking to "zfs send -i" (incremental snapshots) over to my backup machine is taking much more time than normal.

Does "unreadable (pending) sectors" reduce file system read/write speed ?

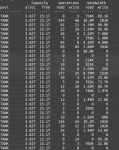

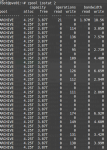

I have attached a couple of "zpool iostat 2" output screen grabs which sow how dismal the read speed is (FREENAS_SOURCE) and how bad the write speed is (DESTINATION)

Also, I forgot to mention that these two systems are connected via d-link switch and according to the connectivity lights on the switch are connected at 1GB.

I have been getting the dreaded "unreadable (pending) sectors" for two of my hard drives (10 disks, RAIDZ2) so am in the process of making sure I have my data backed up via sending zfs snapshots to a second server. The second server is running Proxmox but has 3X3TB drives using ZFS and I backup regularly to these drives from my FreeNAS system. Here is the (possibly?) strange thing. File access time has dropped significantly. I recently did a scrub on the FreeNAS box and it took 113 hours !! Normally this is like single digits, 8 hours or so. Plus the amount of time it is taking to "zfs send -i" (incremental snapshots) over to my backup machine is taking much more time than normal.

Does "unreadable (pending) sectors" reduce file system read/write speed ?

I have attached a couple of "zpool iostat 2" output screen grabs which sow how dismal the read speed is (FREENAS_SOURCE) and how bad the write speed is (DESTINATION)

Also, I forgot to mention that these two systems are connected via d-link switch and according to the connectivity lights on the switch are connected at 1GB.

Attachments

Last edited: