outofspace

Cadet

- Joined

- Dec 13, 2021

- Messages

- 5

So I was moving a Truenas VM from one server to another, and physically broke a drive connector. Not to worry, the pool was raidz2 and I had a cold spare. When I booted up, I was waiting on an sfp module, so I was using novnc through proxmox to get ssh session to rebuild the pool (so, no access to the web interface at the time). I used zpool replace to add the new disk (I used /dev/da1 to identify the new drive). The resilvering went well, the zpool status command shows the pool as healthy.

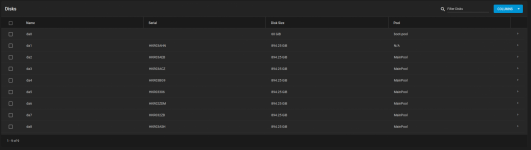

But when I got the network up and running, and checked the web interface under disks that da1 was not listed as being part of the pool. (It lists it as N/A Pool).

I think maybe I borked something, unless its normal for the disk to not say its part of the pool?

How can I fix this?

Output of zpool status:

pool: MainPool

state: ONLINE

scan: resilvered 166G in 01:10:41 with 0 errors on Tue Jan 11 23:27:08 2022

config:

NAME STATE READ WRITE CKSUM

MainPool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/06f08580-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/06fe6b30-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/072902d8-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

da1 ONLINE 0 0 0

gptid/075f67f5-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/07ab9c36-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/07b4b593-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/07b575ff-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

But when I got the network up and running, and checked the web interface under disks that da1 was not listed as being part of the pool. (It lists it as N/A Pool).

I think maybe I borked something, unless its normal for the disk to not say its part of the pool?

How can I fix this?

Output of zpool status:

pool: MainPool

state: ONLINE

scan: resilvered 166G in 01:10:41 with 0 errors on Tue Jan 11 23:27:08 2022

config:

NAME STATE READ WRITE CKSUM

MainPool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/06f08580-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/06fe6b30-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/072902d8-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

da1 ONLINE 0 0 0

gptid/075f67f5-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/07ab9c36-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/07b4b593-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0

gptid/07b575ff-1b1f-11ec-93e9-c4e90a32f196 ONLINE 0 0 0