I’ve changed the pool setup on my Truenas Scale box from Spinning to Flash. The main pool will consist of 2x Optane 900p 280GB mirrored for Metadata and 6x Samsung QVO 8TB configured as 3 mirrors. Given the cost of the drives I’ve started out with single disks in each of the vdevs with the intention of converting them to full mirrors as the system matures.

In preparation of this I wanted to make sure that I have the correct method for attaching drives in SCALE and converting them to mirrors. I’ve created a test setup in VMware workstation to test the process out and have the following method. It appears to work however I get a funny result at the end with I’m unsure about.

Method:

First I establish the disk names in the pool using zpool status and get the following:

Using the disk names I run the following commands to attach the disks to each vdev and convert them into mirrors:

When I run zpool status again I get the following:

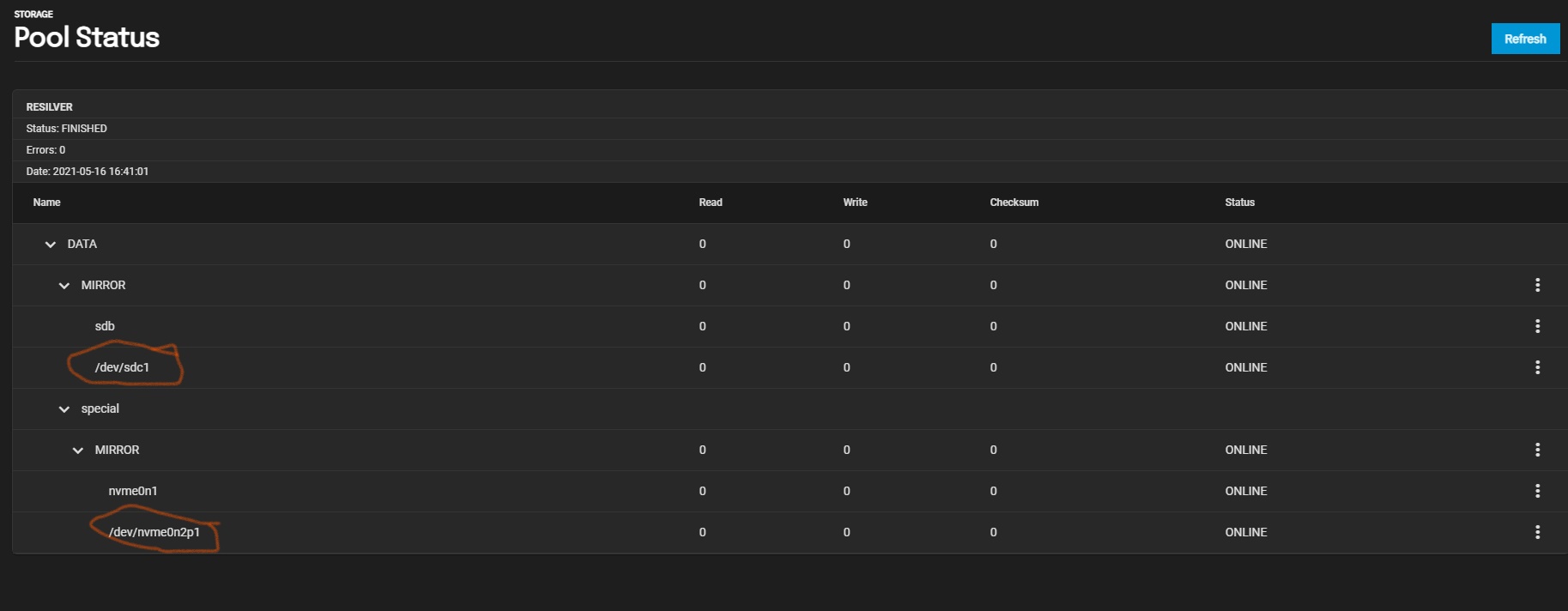

When looking in Pool Status I can see two disks under each vdev and they are described as mirrors, however they have /dev/ before them in the description. Have a screwed something up here?

System as follows:

In preparation of this I wanted to make sure that I have the correct method for attaching drives in SCALE and converting them to mirrors. I’ve created a test setup in VMware workstation to test the process out and have the following method. It appears to work however I get a funny result at the end with I’m unsure about.

Method:

First I establish the disk names in the pool using zpool status and get the following:

Code:

truenas# zpool status

pool: DATA

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

DATA ONLINE 0 0 0

f158728d-26dd-436f-bb4b-254d70ec1140 ONLINE 0 0 0

special

64bf4f72-d834-4c21-8361-315908bb0ade ONLINE 0 0 0

errors: No known data errorsUsing the disk names I run the following commands to attach the disks to each vdev and convert them into mirrors:

Code:

zpool attach DATA f158728d-26dd-436f-bb4b-254d70ec1140 sdc zpool attach DATA 64bf4f72-d834-4c21-8361-315908bb0ade nvme0n2

When I run zpool status again I get the following:

Code:

truenas# zpool status

pool: DATA

state: ONLINE

scan: resilvered 8.22M in 00:00:01 with 0 errors on Sun May 16 08:41:02 2021

config:

NAME STATE READ WRITE CKSUM

DATA ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

f158728d-26dd-436f-bb4b-254d70ec1140 ONLINE 0 0 0

sdc ONLINE 0 0 0

special

mirror-1 ONLINE 0 0 0

64bf4f72-d834-4c21-8361-315908bb0ade ONLINE 0 0 0

nvme0n2 ONLINE 0 0 0

errors: No known data errorsWhen looking in Pool Status I can see two disks under each vdev and they are described as mirrors, however they have /dev/ before them in the description. Have a screwed something up here?

System as follows:

Code:

OS: TrueNAS-SCALE-21.04-ALPHA.1 HPE ML30 Gen10 CPU: Intel(R) Xeon(R) CPU E-2224 MEM: 64GB (2x32GB ECC) OS Drive: 1x Intel® Optane 800p 118Gb Storage: 3x 8TB Samsung QVO 8TB (3x Single) Storage: 1x Intel® P4600 1.6TB META: 2x Intel® Optane 900p 280GB NET: Mellanox CX5 25GbE, 2 ports (10GB fibre link, 25GbE DAC to workstation)