wintermuted

Cadet

- Joined

- Aug 1, 2023

- Messages

- 2

After doing some furious searching through various different forums I’ve come to a conclusion that there’s not an easy answer to this question. If there’s a thread already for this, I apologize I couldn’t find it.

ASROCK Rack D1541D4U-2t8r (Flashed to ASrock Bios)

LSI SAS 3008 Controller (Flashed to IT Mode and latest firmware recommended)

CSE-826 Chassis

BPN-SAS3-EL1-N4 Backplane

10gbe via Integrated Intel NIC

TrueNas Core 13.0-U5.3

Currently only a few 2.5” SATA SSDs Samsung 850 EVO for testing with drives but considering:

8x 8TB HGST 7200 SAS 12gb Drives for Large slow storage

4x 3.84TB U.2 NVME SSDs (or fallback to SAS SSDS if this isn‘t an option given platform)

Available PCI-E on Mobo is 2 x8 Lanes without bifurcation supported. Both PCI-E 3.0

When I started out I had intended to probably build from a supermicro platform but ended up in a windfall with getting this board in it’s original chassis for a pretty low sum off craigslist.

I have an ASROCK Rack Mobo D1541D4U-2t8r. So far I’ve connected dual link (unnecessary I know but I’ve got the ports and cables are cheap) from my LSI SAS 3008 to the EL1 backplane I installed into a CSE-826 chassis with the above backplane (BPN-SAS3-EL1-N4).

Drive bays all appear to function normally and I haven’t had any issue with standard SAS port to SATA Connections thus far.

But I wanted something in the territory of 4 SSDs to get a balance of capacity and performance. Likely in different pools for different tasks.

3.84tb SSDS - 4 wide in Raidz1

8tb HGST HDD - 8 wide in Raidz2

Most of my work will be sporadic large sequential writes and then more consistently smaller sequential reads; but latency is a factor and I’d like to not rely on the ARC or L2ARC to speed up spinning drives outside of some specific tasks. (I might use the second PCI-E Port for a pair of 110mm m.2 drives for this purpose)

After doing some intense searching for SAS-3 SSDs I came to the conclusion that the majority available in my price range were likely 40k+ hours into production and would still cost me the equivalent of some U.2 SSDs with 600-1000 hours and significantly less TBw under their belt.

Which brings me to this question; What’s the best option here for U.2?

1.

I’ve seen 9400-16i LSI cards; even some from server supplies at the tune of 300 USD but needing broadcom branded U.2 enabler cables to take the 8643 SAS connectors to round trip to the white 8643s on the backplane. (another 80-100 dollars each x2) With the added bonus of seeing people claim that 9400 just isn’t reliable and I don’t need tri-mode at all at the moment. I just need NVME. I’d rather not spend 500 on a system that provides functionality I simply don’t need.

IF someone has experience with U.2 on 9400 being reliable on Truenas; I’m all ears.

2.

I’ve seen the Supermicro AOC-SLG3-4e2p (150-300 depending on where from but i’ve seen some insane deals) which seems like the best option as it uses oculink to 8643 connectors straight from supermicro at about 26 dollars each; so even at full price it saves me 100 and it would be set out of the box to work. And iirc I’ve seen good experiences with this card from people doing what I’m attempting.

This all works with PCI-E switching on an x8 slot which is ideal for my current build… The problem being supermicro CLAIMS these devices only work on Supermicro approved boards. Is that accurate? Or are they only supported on supermicro platforms?

3.

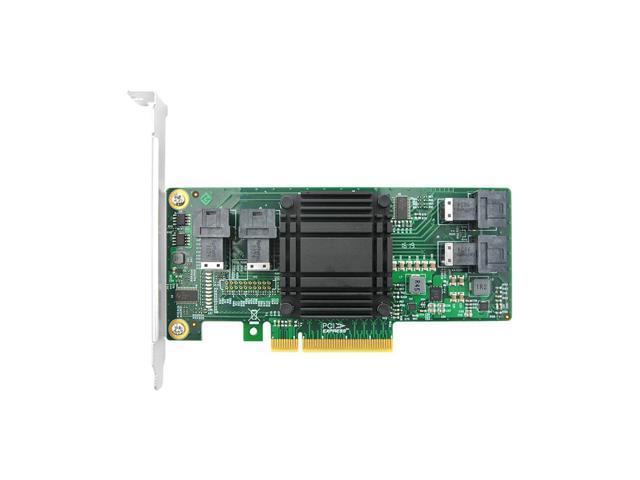

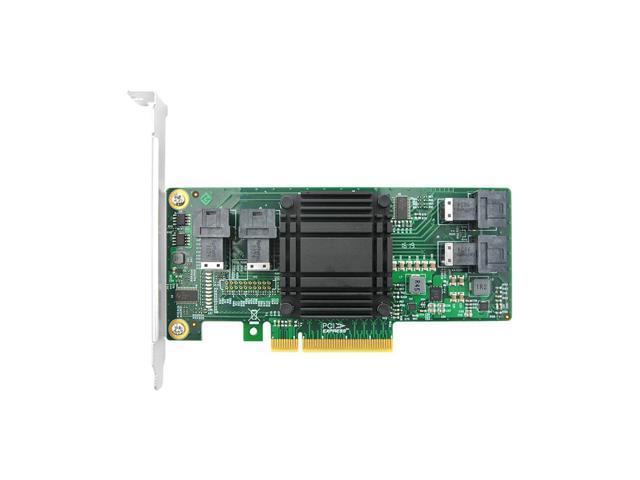

The final option; which I know will bring ire, Is to purchase a Linkreal 4 port U.2 NVMe PCIE card that should offer U.2 connections but I can’t be certain what cables to use or if it would even recognize when connected to the supermicro backplane; but I‘ve seen a ton of people using similar hardware with these controllers and from this vendor with success… just not necessarily in Truenas or with this Backplane.

It uses a PLX pci-e switch controller and appears to boast support for Linux and Free BSD 9/10/11. I’m running the latest stable build of 13.0-U5.3. I wouldn’t be opposed to looking into Scale if I could use U.2 NVMe storage reliably.

www.newegg.com

www.newegg.com

The last issue is that my two x8 slots do not support bifurcation. So standard x8 to dual occulink *simple* cards without pci-e switching are unfortunately out of the question.

If it were a matter of simply spending less money; sure I could get used SAS SSDs as at the moment I’m only trying to saturate a 10gbe link to a single client; perhaps two. And it may not even be necessary to have that amount of bandwidth at all times for my usecase.

But as we all know hardware is expensive and trying to build and equitable solution with parts that can withstand time would be ideal; even if I upgrade my motherboard and adopt a different LSI controller for my spinning drives the U.2 solution ideally would be current enough to carry over to whatever platform at that time.

TLDR; I’d like to look into U.2 but don’t have a supermicro motherboard. I’d like to avoid 50k working hours on a SAS SSD and as these are quickly being replaced by NVMe I don’t want to find myself with a failed drive without any options for replacement and U.2 seems to be abundant and shows no signs of slowing down.

And unless someone has a great suggestion for SATA SSDs that don’t slow down to 70MB/s after a couple gigs of writes; I’d really rather not go down that road. I’m trying to avoid consumer hardware as much as possible when putting this system into production.

ASROCK Rack D1541D4U-2t8r (Flashed to ASrock Bios)

LSI SAS 3008 Controller (Flashed to IT Mode and latest firmware recommended)

CSE-826 Chassis

BPN-SAS3-EL1-N4 Backplane

10gbe via Integrated Intel NIC

TrueNas Core 13.0-U5.3

Currently only a few 2.5” SATA SSDs Samsung 850 EVO for testing with drives but considering:

8x 8TB HGST 7200 SAS 12gb Drives for Large slow storage

4x 3.84TB U.2 NVME SSDs (or fallback to SAS SSDS if this isn‘t an option given platform)

Available PCI-E on Mobo is 2 x8 Lanes without bifurcation supported. Both PCI-E 3.0

When I started out I had intended to probably build from a supermicro platform but ended up in a windfall with getting this board in it’s original chassis for a pretty low sum off craigslist.

I have an ASROCK Rack Mobo D1541D4U-2t8r. So far I’ve connected dual link (unnecessary I know but I’ve got the ports and cables are cheap) from my LSI SAS 3008 to the EL1 backplane I installed into a CSE-826 chassis with the above backplane (BPN-SAS3-EL1-N4).

Drive bays all appear to function normally and I haven’t had any issue with standard SAS port to SATA Connections thus far.

But I wanted something in the territory of 4 SSDs to get a balance of capacity and performance. Likely in different pools for different tasks.

3.84tb SSDS - 4 wide in Raidz1

8tb HGST HDD - 8 wide in Raidz2

Most of my work will be sporadic large sequential writes and then more consistently smaller sequential reads; but latency is a factor and I’d like to not rely on the ARC or L2ARC to speed up spinning drives outside of some specific tasks. (I might use the second PCI-E Port for a pair of 110mm m.2 drives for this purpose)

After doing some intense searching for SAS-3 SSDs I came to the conclusion that the majority available in my price range were likely 40k+ hours into production and would still cost me the equivalent of some U.2 SSDs with 600-1000 hours and significantly less TBw under their belt.

Which brings me to this question; What’s the best option here for U.2?

1.

I’ve seen 9400-16i LSI cards; even some from server supplies at the tune of 300 USD but needing broadcom branded U.2 enabler cables to take the 8643 SAS connectors to round trip to the white 8643s on the backplane. (another 80-100 dollars each x2) With the added bonus of seeing people claim that 9400 just isn’t reliable and I don’t need tri-mode at all at the moment. I just need NVME. I’d rather not spend 500 on a system that provides functionality I simply don’t need.

IF someone has experience with U.2 on 9400 being reliable on Truenas; I’m all ears.

2.

I’ve seen the Supermicro AOC-SLG3-4e2p (150-300 depending on where from but i’ve seen some insane deals) which seems like the best option as it uses oculink to 8643 connectors straight from supermicro at about 26 dollars each; so even at full price it saves me 100 and it would be set out of the box to work. And iirc I’ve seen good experiences with this card from people doing what I’m attempting.

This all works with PCI-E switching on an x8 slot which is ideal for my current build… The problem being supermicro CLAIMS these devices only work on Supermicro approved boards. Is that accurate? Or are they only supported on supermicro platforms?

3.

The final option; which I know will bring ire, Is to purchase a Linkreal 4 port U.2 NVMe PCIE card that should offer U.2 connections but I can’t be certain what cables to use or if it would even recognize when connected to the supermicro backplane; but I‘ve seen a ton of people using similar hardware with these controllers and from this vendor with success… just not necessarily in Truenas or with this Backplane.

It uses a PLX pci-e switch controller and appears to boast support for Linux and Free BSD 9/10/11. I’m running the latest stable build of 13.0-U5.3. I wouldn’t be opposed to looking into Scale if I could use U.2 NVMe storage reliably.

Linkreal 4 Port PCI Express x8 to U.2 NVMe SSD Adapter Riser Card with SFF-8643 Mini-SAS HD 36 Pin Connector and Chipset PLX8725 Support 2.5" U.2 NVMe SSD - Newegg.com

Buy Linkreal 4 Port PCI Express x8 to U.2 NVMe SSD Adapter Riser Card with SFF-8643 Mini-SAS HD 36 Pin Connector and Chipset PLX8725 Support 2.5" U.2 NVMe SSD with fast shipping and top-rated customer service. Once you know, you Newegg!

The last issue is that my two x8 slots do not support bifurcation. So standard x8 to dual occulink *simple* cards without pci-e switching are unfortunately out of the question.

If it were a matter of simply spending less money; sure I could get used SAS SSDs as at the moment I’m only trying to saturate a 10gbe link to a single client; perhaps two. And it may not even be necessary to have that amount of bandwidth at all times for my usecase.

But as we all know hardware is expensive and trying to build and equitable solution with parts that can withstand time would be ideal; even if I upgrade my motherboard and adopt a different LSI controller for my spinning drives the U.2 solution ideally would be current enough to carry over to whatever platform at that time.

TLDR; I’d like to look into U.2 but don’t have a supermicro motherboard. I’d like to avoid 50k working hours on a SAS SSD and as these are quickly being replaced by NVMe I don’t want to find myself with a failed drive without any options for replacement and U.2 seems to be abundant and shows no signs of slowing down.

And unless someone has a great suggestion for SATA SSDs that don’t slow down to 70MB/s after a couple gigs of writes; I’d really rather not go down that road. I’m trying to avoid consumer hardware as much as possible when putting this system into production.