zetoniak

Dabbler

- Joined

- May 14, 2015

- Messages

- 27

Hello everyone,

I made some benchmarking tests to my new system, this is my hardware setup:

Any recommendations/opinions about benchmarking will be welcomed.

-Motherboard: X10SRi-F

-CPU: E5-1650v3

-RAM: 32GB DDR4 PC4-17000

-M1015 - Connected to backplane via a sff--8087

-7 WD Red (Raidz2 + Spare)

-6 SAS 10k without pooling them yet, intended for future NFS for vmware use

"Sata" is my Raidz2 + Spare with lz4 compression enabled

Benchmarking is a datastore without compression enabled

DD Testing, zpool speed

WRITE

First noticed a dd can´t be done on compressed datastore because of compression, so i made a datastore without compression.

Command used -- dd if=/dev/zero of=tmp.dat bs=2048k count=50k

RESULTS

lz4 compressed

dd = 4,5 GB/S

RE-dd = 9,3 GB/S

Without compression

dd = 446 MB/S

RE-dd = 452 MB/s

READ

Before do this test i made bigger the files with 333GB on "Benchmarking"(uncompressed) and 367GB on "Sata"(lz4 Compressed)

Command dd if=tmp.dat of=/dev/null (Read)

lz4 compressed

Around 40MB/s

Without compression

Around 315MB/s

Why this difference on read?

ARC Testing

Command used from root directory (Don't know if this is the good way for test, but here is my output)

Maybe when the server is on production will be better to test this.

ls -R /mnt/Sata | wc -l

output: 905262

While performing the above command i looked on my ARC with the next command:

arcstat.py -f read,hits,miss,hit%,arcsz 1

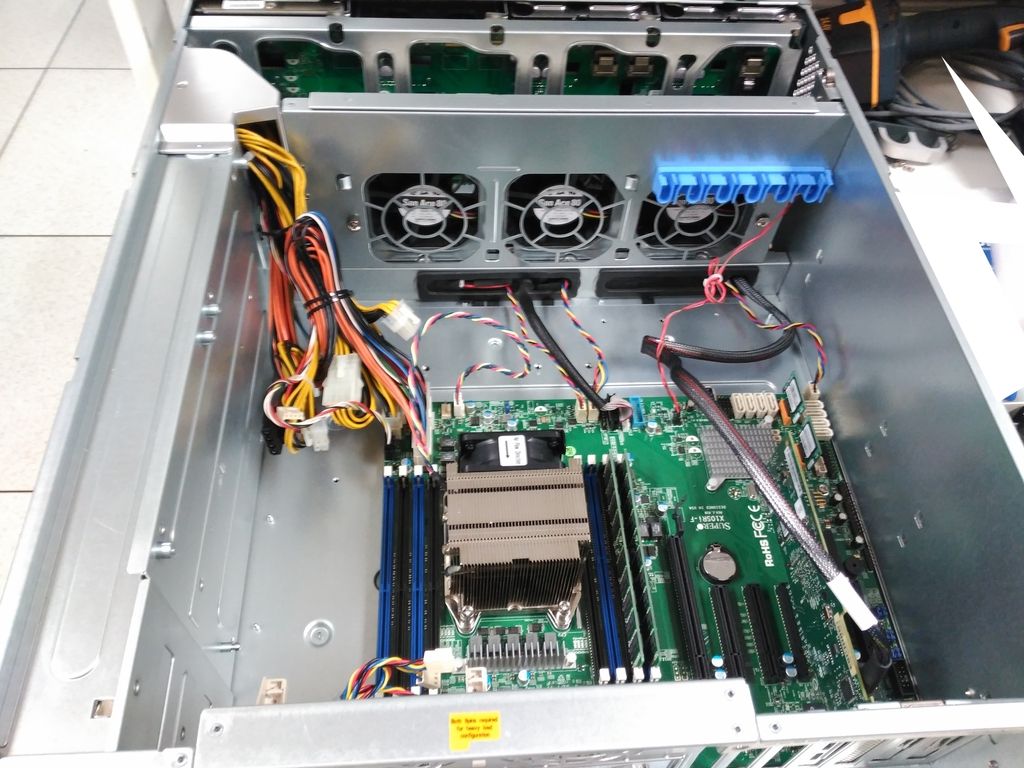

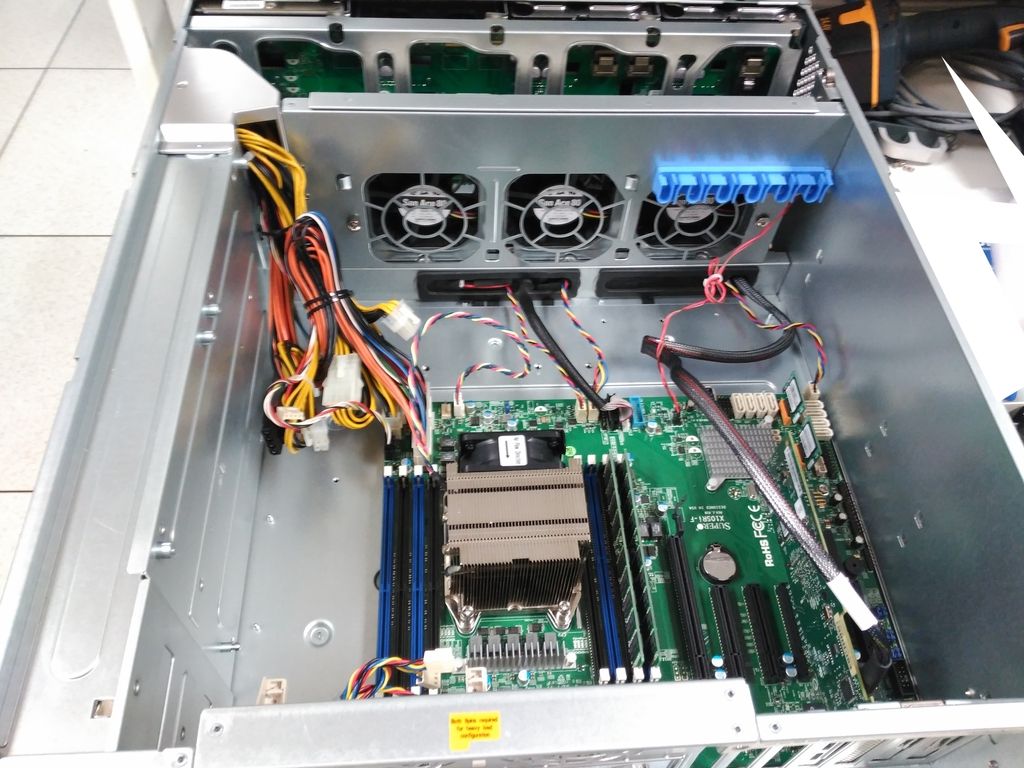

Bonus! Picture of my hardware assembled! (I had to change the sff-8087 connector to the left ports, 7 and 8 now i have both connected) soon i'll post pictures on my building post.

Thanks!

I made some benchmarking tests to my new system, this is my hardware setup:

Any recommendations/opinions about benchmarking will be welcomed.

-Motherboard: X10SRi-F

-CPU: E5-1650v3

-RAM: 32GB DDR4 PC4-17000

-M1015 - Connected to backplane via a sff--8087

-7 WD Red (Raidz2 + Spare)

-6 SAS 10k without pooling them yet, intended for future NFS for vmware use

"Sata" is my Raidz2 + Spare with lz4 compression enabled

Benchmarking is a datastore without compression enabled

DD Testing, zpool speed

WRITE

First noticed a dd can´t be done on compressed datastore because of compression, so i made a datastore without compression.

Command used -- dd if=/dev/zero of=tmp.dat bs=2048k count=50k

Code:

[root@freenas] /mnt/Sata# dd if=/dev/zero of=tmp.dat bs=2048k count=50k 51200+0 records in 51200+0 records out 107374182400 bytes transferred in 23.840734 secs (4503811937 bytes/sec) [root@freenas] /mnt/Sata# dd of=/dev/zero if=tmp.dat bs=2048k count=50k 51200+0 records in 51200+0 records out 107374182400 bytes transferred in 11.511164 secs (9327830171 bytes/sec) [root@freenas] /mnt/Sata/Benchmarking# dd if=/dev/zero of=tmp.dat bs=2048k count=50k 51200+0 records in 51200+0 records out 107374182400 bytes transferred in 240.280683 secs (446869807 bytes/sec) [root@freenas] /mnt/Sata/Benchmarking# dd if=/dev/zero of=tmp.dat bs=2048k count=50k 51200+0 records in 51200+0 records out 107374182400 bytes transferred in 237.112166 secs (452841304 bytes/sec)

RESULTS

lz4 compressed

dd = 4,5 GB/S

RE-dd = 9,3 GB/S

Without compression

dd = 446 MB/S

RE-dd = 452 MB/s

READ

Before do this test i made bigger the files with 333GB on "Benchmarking"(uncompressed) and 367GB on "Sata"(lz4 Compressed)

Command dd if=tmp.dat of=/dev/null (Read)

lz4 compressed

Around 40MB/s

Without compression

Around 315MB/s

Code:

/mnt/Sata# dd if=tmp.dat of=/dev/null ^C11577901+0 records in 11577901+0 records out 5927885312 bytes transferred in 151.593031 secs (39103943 bytes/sec) /mnt/Sata/Benchmarking# dd if=tmp.dat of=/dev/null ^C143432600+0 records in 143432600+0 records out 73437491200 bytes transferred in 232.133846 secs (316358396 bytes/sec)

Why this difference on read?

ARC Testing

Command used from root directory (Don't know if this is the good way for test, but here is my output)

Maybe when the server is on production will be better to test this.

ls -R /mnt/Sata | wc -l

output: 905262

While performing the above command i looked on my ARC with the next command:

arcstat.py -f read,hits,miss,hit%,arcsz 1

Code:

read hits miss hit% arcsz 0 0 0 0 20G 154K 149K 5.2K 96 20G 195K 181K 14K 92 20G 178K 156K 21K 87 20G 260K 254K 5.7K 97 20G 242K 240K 2.0K 99 20G 107K 97K 9.8K 90 20G 86K 75K 11K 87 20G 0 0 0 0 20G

Bonus! Picture of my hardware assembled! (I had to change the sff-8087 connector to the left ports, 7 and 8 now i have both connected) soon i'll post pictures on my building post.

Thanks!