I finally managed to setup a TrueNAS as my new homelab ESX datstore these says. My setup is as follows:

- Dual-Xeon E5-2690 v3 @ 2.60GHz

- 260GB RAM

- LSI SAS controller

- JBOD connected by 6Gb SAS

- 24x 400GB IBM Enterprise SSD

- lz4 compression, no dedup

- NFS4.1 share connected by 10Gbit ethernet to ESX hosts

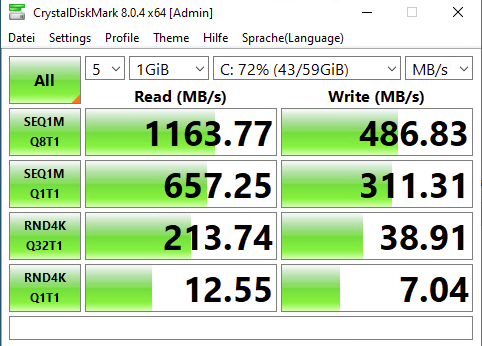

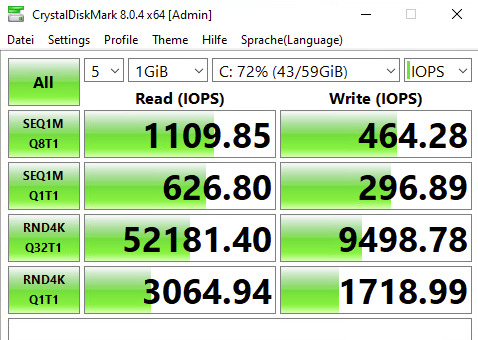

Even though IOPS are okay, especially the random write througput seems pretty low for this setup.

Unfortunately the reporting section of my trueNAS doesn't show anything. Just empty graphs, which makes it quite difficult to locate a bottleneck. CPU and memory is pretty idle even during benchmarking.

Is there any simple tweak i may forgot which could explain this performance? Do you guys have any idea why my reports section doesn't shows any graphs?

Best thanks in advance!

- Dual-Xeon E5-2690 v3 @ 2.60GHz

- 260GB RAM

- LSI SAS controller

- JBOD connected by 6Gb SAS

- 24x 400GB IBM Enterprise SSD

- lz4 compression, no dedup

- NFS4.1 share connected by 10Gbit ethernet to ESX hosts

Even though IOPS are okay, especially the random write througput seems pretty low for this setup.

Unfortunately the reporting section of my trueNAS doesn't show anything. Just empty graphs, which makes it quite difficult to locate a bottleneck. CPU and memory is pretty idle even during benchmarking.

Is there any simple tweak i may forgot which could explain this performance? Do you guys have any idea why my reports section doesn't shows any graphs?

Best thanks in advance!