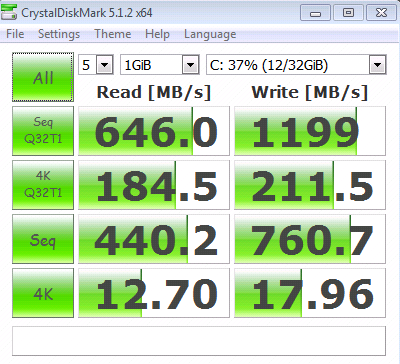

Testing 10gbe recently, I discovered that there may be a serious problem with ARC performance when paired with a 10gbe NIC. I'm running VMWare ESXi 5.1U2 over iSCSI against FreeNAS 9.3 Stable. I'm able to get full 10gbps write, read is still only half. ARC hit is as ideal as possible (99.17%) CrystalDiskMark numbers show a good picture. It's almost like ARC is slow in reading and pushing that data to the NIC? I originally started this thread here: https://forums.freenas.org/index.php?threads/10gb-tunables-on-9-10.42548/ my posts start at #40

Here's another thread where someone else reported a similar issue.

https://forums.freenas.org/index.php?threads/nfs-writes-blasting-fast-nfs-read-extremely-slow.19342/

I'm starting to think the bottleneck is now with some sort of tuning on FreeNAS end. Any FreeBSD/ZFS experts that can shed light on why ARC performance is suffering?

Here's another thread where someone else reported a similar issue.

https://forums.freenas.org/index.php?threads/nfs-writes-blasting-fast-nfs-read-extremely-slow.19342/

I'm starting to think the bottleneck is now with some sort of tuning on FreeNAS end. Any FreeBSD/ZFS experts that can shed light on why ARC performance is suffering?

- Windows 7 VM over 10Gbe

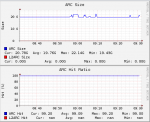

- 19.43GiB (MRU: 15.78GiB, MFU: 6.48GiB) / 32.00GiB

- Hit ratio -> 99.17% (higher is better)

- Prefetch -> 62.82% (higher is better)

- Hit MFU:MRU -> 90.07%:9.32% (higher ratio is better)

- Hit MRU Ghost -> 0.00% (lower is better)

- Hit MFU Ghost -> 0.00% (lower is better)

Last edited: