Hi,

In recent weeks two (/dev/ada3 & /dev/ada5) of my eight drives started to report more and more bad sectors and errors so I've decided to replace them.

I followed official instructions for replacing a failed disk https://www.ixsystems.com/documentation/freenas/11.3-U1/storage.html#replacing-a-failed-disk

I've done all of those steps via GUI:

* changed the faulty /dev/ada3 disk status to OFFLINE

* shutdown the system

* physically replaced the failing drive with a new one

* then I replaced the OFFLINE drive with the new one and then resilvering started

* resilvering took roughly 10 hours and finished @ Sun Apr 26 05:58:46 2020

* during the resilvering process a short SMART test ran and it found two new issues with the other faulty drive /dev/ada5 (which I want to replace next)

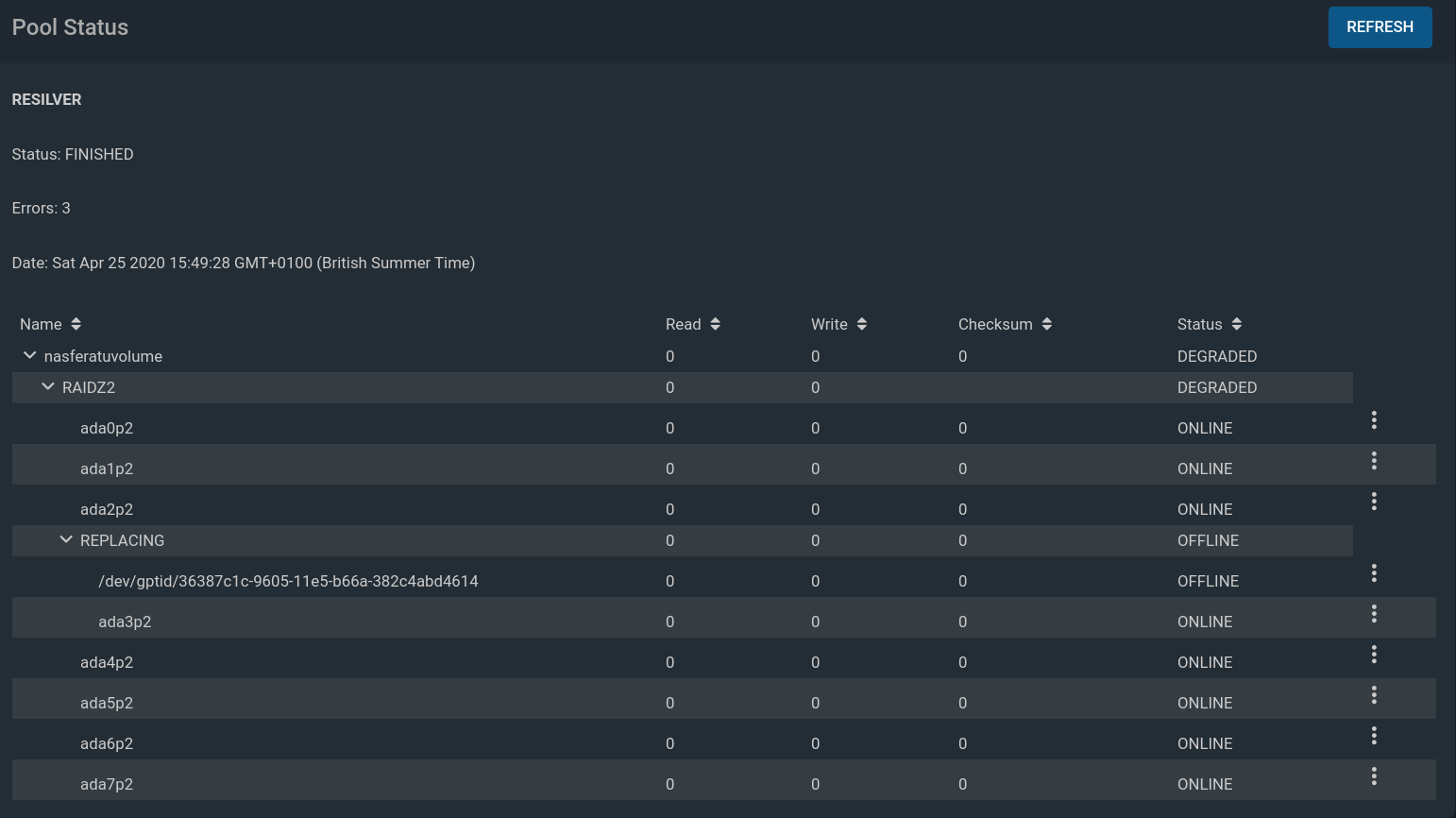

Once the resilvering finished (with 3 errors) the status of new drive changed to ONLINE but I the drive is now in strange "REPLACING/OFFLINE" mode.

I tried to detach that OFFLINE " /dev/gptid/36387c1c-9605-11e5-b66a-382c4abd4614" but I got this error:

PS. The full stacktrace for that error at the end of this post.

I then checked pool status via CLI and it showed me the same 3 permanent errors as before I replaced the drive.

PS. I don't care much about loosing those files.

I did a lot if googling but could not find a clear set of instructions of what to do next :(

Full stacktrace for "ZFSException: Can detach disks from mirrors and spares only"

In recent weeks two (/dev/ada3 & /dev/ada5) of my eight drives started to report more and more bad sectors and errors so I've decided to replace them.

I followed official instructions for replacing a failed disk https://www.ixsystems.com/documentation/freenas/11.3-U1/storage.html#replacing-a-failed-disk

I've done all of those steps via GUI:

* changed the faulty /dev/ada3 disk status to OFFLINE

* shutdown the system

* physically replaced the failing drive with a new one

* then I replaced the OFFLINE drive with the new one and then resilvering started

* resilvering took roughly 10 hours and finished @ Sun Apr 26 05:58:46 2020

* during the resilvering process a short SMART test ran and it found two new issues with the other faulty drive /dev/ada5 (which I want to replace next)

Code:

CRITICAL Device: /dev/ada5, ATA error count increased from 65 to 70. Sun, 26 Apr 2020 12:20:08 AM (Europe/London)

Code:

CRITICAL Device: /dev/ada5, Self-Test Log error count increased from 8 to 9. Sun, 26 Apr 2020 12:50:07 AM (Europe/London)

Once the resilvering finished (with 3 errors) the status of new drive changed to ONLINE but I the drive is now in strange "REPLACING/OFFLINE" mode.

I tried to detach that OFFLINE " /dev/gptid/36387c1c-9605-11e5-b66a-382c4abd4614" but I got this error:

Code:

[EZFS_NOTSUP] Can detach disks from mirrors and spares only

PS. The full stacktrace for that error at the end of this post.

I then checked pool status via CLI and it showed me the same 3 permanent errors as before I replaced the drive.

PS. I don't care much about loosing those files.

Code:

nasferatu# zpool status -v nasferatuvolume

pool: nasferatuvolume

state: DEGRADED

status: One or more devices has experienced an error resulting in data

corruption. Applications may be affected.

action: Restore the file in question if possible. Otherwise restore the

entire pool from backup.

see: http://illumos.org/msg/ZFS-8000-8A

scan: resilvered 3.09T in 0 days 14:09:18 with 3 errors on Sun Apr 26 05:58:46 2020

config:

NAME STATE READ WRITE CKSUM

nasferatuvolume DEGRADED 0 0 3

raidz2-0 DEGRADED 0 0 6

gptid/271e756e-9605-11e5-b66a-382c4abd4614 ONLINE 0 0 0

gptid/2c32e27b-9605-11e5-b66a-382c4abd4614 ONLINE 0 0 0

gptid/31362dba-9605-11e5-b66a-382c4abd4614 ONLINE 0 0 0

replacing-3 OFFLINE 0 0 0

854069389795236902 OFFLINE 0 0 0 was /dev/gptid/36387c1c-9605-11e5-b66a-382c4abd4614

gptid/f60625cd-8703-11ea-9f29-001b21275bb9 ONLINE 0 0 0

gptid/3b7b207e-9605-11e5-b66a-382c4abd4614 ONLINE 0 0 0

gptid/40a65355-9605-11e5-b66a-382c4abd4614 ONLINE 0 0 0

gptid/45e1321a-9605-11e5-b66a-382c4abd4614 ONLINE 0 0 0

gptid/4b337f7c-9605-11e5-b66a-382c4abd4614 ONLINE 0 0 0

errors: Permanent errors have been detected in the following files:

nasferatuvolume/photo@auto-20200420.0500-1w:/2016/2016-06-04_02/VID_20160404.mp4

nasferatuvolume/photo@auto-20200420.0500-1w:/2017/2017-03-04_05/VID_20170304.mp4

nasferatuvolume/photo@auto-20200420.0500-1w:/2017/2017-05-05_01/VID_20170505.mp4I did a lot if googling but could not find a clear set of instructions of what to do next :(

Full stacktrace for "ZFSException: Can detach disks from mirrors and spares only"

Code:

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/zfs.py", line 247, in __zfs_vdev_operation

op(target, *args)

File "libzfs.pyx", line 369, in libzfs.ZFS.__exit__

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/zfs.py", line 247, in __zfs_vdev_operation

op(target, *args)

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/zfs.py", line 256, in <lambda>

self.__zfs_vdev_operation(name, label, lambda target: target.detach())

File "libzfs.pyx", line 1764, in libzfs.ZFSVdev.detach

libzfs.ZFSException: Can detach disks from mirrors and spares only

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.7/concurrent/futures/process.py", line 239, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/worker.py", line 95, in main_worker

res = loop.run_until_complete(coro)

File "/usr/local/lib/python3.7/asyncio/base_events.py", line 579, in run_until_complete

return future.result()

File "/usr/local/lib/python3.7/site-packages/middlewared/worker.py", line 51, in _run

return await self._call(name, serviceobj, methodobj, params=args, job=job)

File "/usr/local/lib/python3.7/site-packages/middlewared/worker.py", line 43, in _call

return methodobj(*params)

File "/usr/local/lib/python3.7/site-packages/middlewared/worker.py", line 43, in _call

return methodobj(*params)

File "/usr/local/lib/python3.7/site-packages/middlewared/schema.py", line 965, in nf

return f(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/zfs.py", line 256, in detach

self.__zfs_vdev_operation(name, label, lambda target: target.detach())

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/zfs.py", line 249, in __zfs_vdev_operation

raise CallError(str(e), e.code)

middlewared.service_exception.CallError: [EZFS_NOTSUP] Can detach disks from mirrors and spares only

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 130, in call_method

io_thread=False)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1077, in _call

return await methodobj(*args)

File "/usr/local/lib/python3.7/site-packages/middlewared/schema.py", line 961, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/plugins/pool.py", line 1194, in detach

await self.middleware.call('zfs.pool.detach', pool['name'], found[1]['guid'])

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1127, in call

app=app, pipes=pipes, job_on_progress_cb=job_on_progress_cb, io_thread=True,

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1074, in _call

return await self._call_worker(name, *args)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1094, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1029, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

File "/usr/local/lib/python3.7/site-packages/middlewared/main.py", line 1003, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

middlewared.service_exception.CallError: [EZFS_NOTSUP] Can detach disks from mirrors and spares only