Elliot Dierksen

Guru

- Joined

- Dec 29, 2014

- Messages

- 1,135

After some upgrades to my home lab, I appear to have set myself back a bit in term of performance which is kind of discouraging. Here is what I have now.

Primary FreeNAS - 11.3-U3.2:

Cisco C240 M4SX - 24 x 2.5" SAS/SATA drive bays

Dual E5-2637 v3 @ 3.50GHz

256 GB ECC DRAM

2 x Intel i350 Gigabit NIC for management (using 2 ports in LACP channel)

Chelsio T580-CR dual port 40 Gigabit NIC for storage network (using 1 port)

LSI 3108 based HBA

Storage pool = 2 RAIDZ2 vdevs of 8 x 1TB 7.2k SATA drives with one spare drive

Intel Optane 900P SLOG

LSI 9207-8E for future external expansion

LSI 9300-8E for future external expansion

Boot pool = mirrored 120G SATA SSD drives

Secondary FreeNAS- 11.3-U3.2:

Cisco C240 M4SX - 24 x 2.5" SAS/SATA drive bays

Dual E5-2637 v3 @ 3.50GHz

160 GB ECC DRAM

2 x Intel i350 Gigabit NIC for management (using 2 ports in LACP channel)

Chelsio T580-CR dual port 40 Gigabit NIC for storage network (using 1 port)

LSI 3108 based HBA

LSI 9207-8E connected to HP D2700 external enclosure - 25 x 2.5" SAS/SATA drive bays

Storage pool (D2700) = 4 RAIDZ2 vdevs of 6 x 300GB 10k SAS drives with one spare drive

Intel Optane 900P SLOG (shared device, separate partition per pool)

Storage pool 2 (D2600) = 2 RAIDZ2 vdevs of 6 x 2TB 7.2k SAS drives with one spare drive (internal)

Intel Optane 900P SLOG (shared device, separate partition per pool)

LSI 9300-8E for future external expansion

Boot pool = mirrored 120G SATA SSD drives

Network Infrastructure:

2 x Cisco 3750E 48 port gigabit PoE, 2 10 gigabit ports

1 x Cisco 3560E 24 port gigabit PoE, 2 10 gigabit ports

1 x Cisco Nexus 3064-X, 48 SFP+ ports, 4 QSFP ports

Virtualization Infrastructure:

2 x Cisco C240 M4SX running ESXi 6.7

Dual E5-2697 v3 @ 2.60GHz

256GB ECC RAM

2 x Intel i350 Gigabit NIC for management & VM guest traffic using 2 ports in LACP channel

Cisco UCS VIC 1227 dual port 10 Gigabit NIC, 1 port for storage (NFS), 1 port for vMotion

UCSC-MRAID12G (LSI Chipset) RAID controller

Boots from RAID1 - 2 x 200G SATA SSD drives

RAID1 datastore - 2 x 1.1T 12G SAS drives

Cisco C240 M3S running ESXi 6.5

Dual E5-2687W v2 @ 3.40GHz

256GB ECC RAM

2 x Intel i350 Gigabit NIC for management & VM guest traffic using 2 ports in LACP channel

Cisco UCS VIC 1225 dual port 10 Gigabit NIC, 1 port for storage (NFS), 1 port for vMotion

LSI 9271-8I RAID controller

Boots from RAID1 - 2 x 200G SATA SSD drives

datastore - 3 x RAID1 (2 x 300G 6G SAS drives)

I am particularly annoyed that I can't seem to get past 27.G with iperf. To keep the number of possible suspect down, I am using a 40Gbase-CR4 DAC cable between the two systems for testing. The testing commands I am using are:

I did have one run that got to 27.7G.

Even with that, my storage Vmotion tasks aren't going as quickly as they had on my older setup (Mostly same cards, Cisco C240S M3 - E5-2637 v2 @ 3.50GHz). I also upgraded Vcenter and Vsphere ESXi to 6.7. I appears that Vcenter is only allowing 2 storage Vmotion tasks to run at once. I had tuned this in Vcenter 6.5 to allow 4 Vmotion tasks at once, and I could frequently get 9G+ read and 4G+ write. I can still occasionally getting 9G read, but the write is down to 2G+.

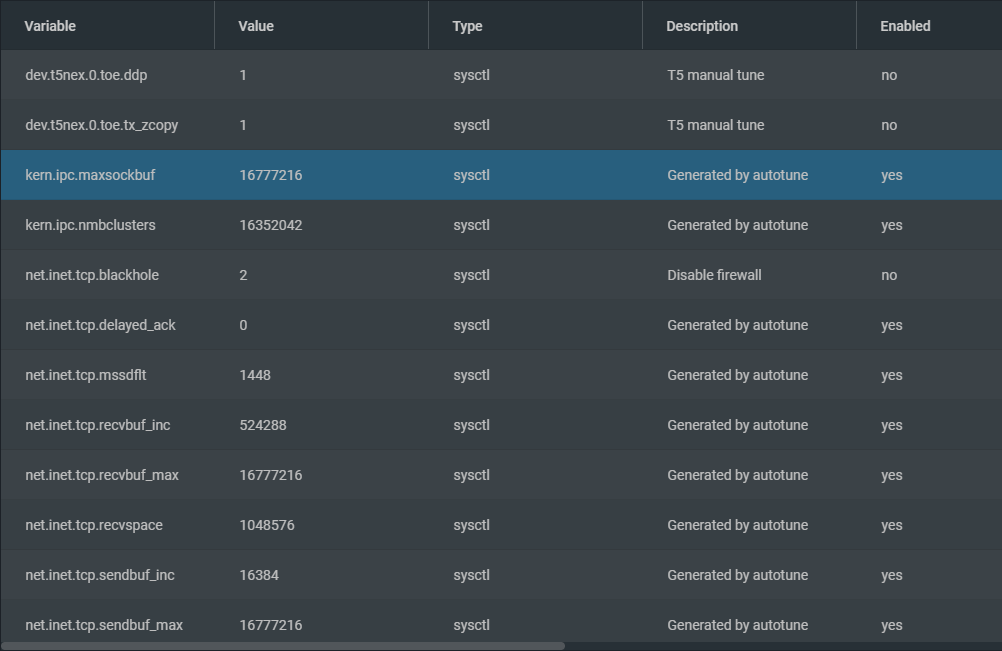

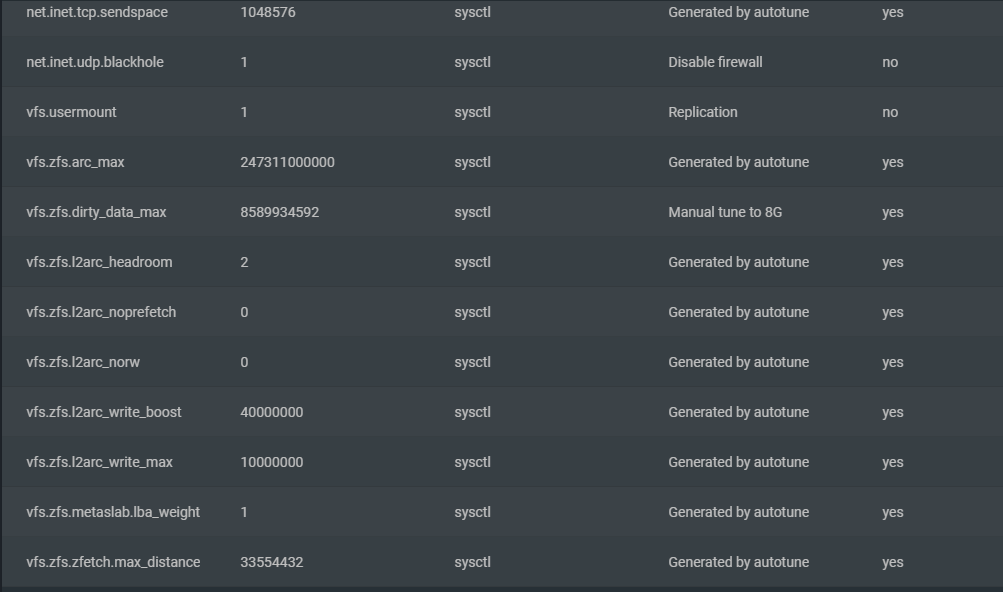

Primary FreeNAS tunables:

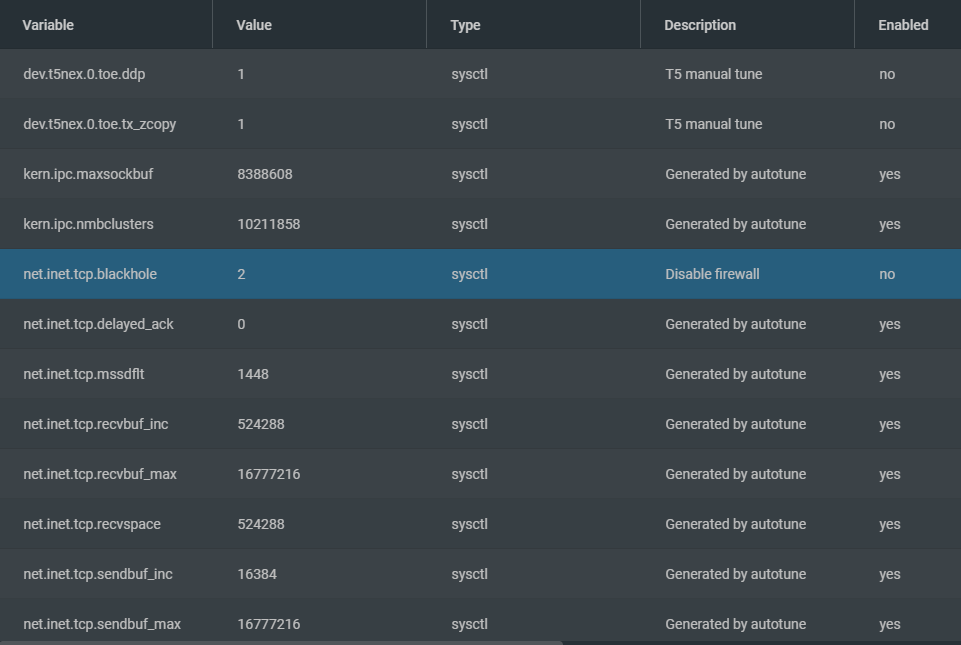

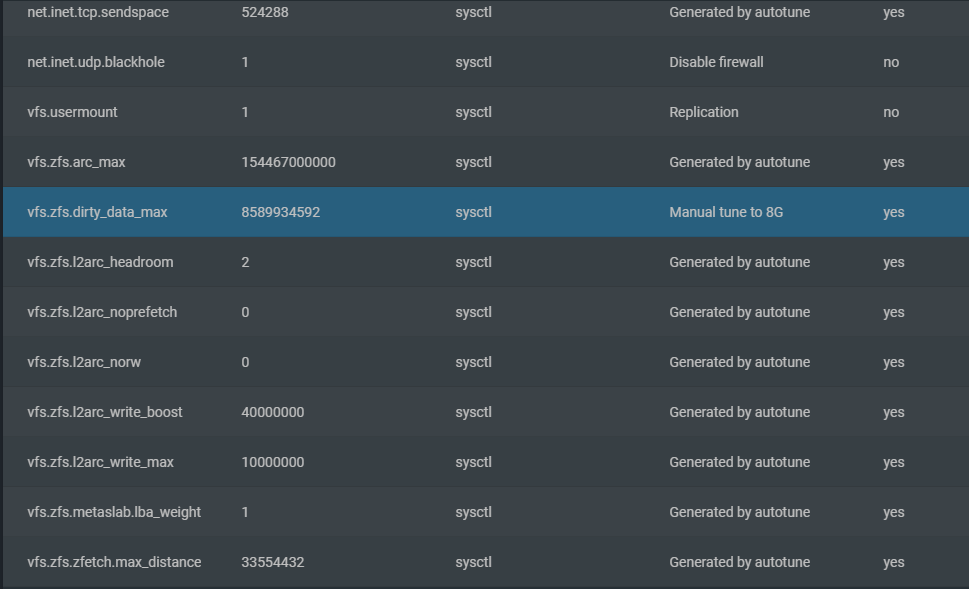

Secondary FreeNAS tunables:

I did have autotune on for the older 11.2 systems, but I have now turned it off. I install 11.3-U3 fresh, and then restored the saved config from the 11.2 systems. What the heck am I missing? :-(

Primary FreeNAS - 11.3-U3.2:

Cisco C240 M4SX - 24 x 2.5" SAS/SATA drive bays

Dual E5-2637 v3 @ 3.50GHz

256 GB ECC DRAM

2 x Intel i350 Gigabit NIC for management (using 2 ports in LACP channel)

Chelsio T580-CR dual port 40 Gigabit NIC for storage network (using 1 port)

LSI 3108 based HBA

Storage pool = 2 RAIDZ2 vdevs of 8 x 1TB 7.2k SATA drives with one spare drive

Intel Optane 900P SLOG

LSI 9207-8E for future external expansion

LSI 9300-8E for future external expansion

Boot pool = mirrored 120G SATA SSD drives

Secondary FreeNAS- 11.3-U3.2:

Cisco C240 M4SX - 24 x 2.5" SAS/SATA drive bays

Dual E5-2637 v3 @ 3.50GHz

160 GB ECC DRAM

2 x Intel i350 Gigabit NIC for management (using 2 ports in LACP channel)

Chelsio T580-CR dual port 40 Gigabit NIC for storage network (using 1 port)

LSI 3108 based HBA

LSI 9207-8E connected to HP D2700 external enclosure - 25 x 2.5" SAS/SATA drive bays

Storage pool (D2700) = 4 RAIDZ2 vdevs of 6 x 300GB 10k SAS drives with one spare drive

Intel Optane 900P SLOG (shared device, separate partition per pool)

Storage pool 2 (D2600) = 2 RAIDZ2 vdevs of 6 x 2TB 7.2k SAS drives with one spare drive (internal)

Intel Optane 900P SLOG (shared device, separate partition per pool)

LSI 9300-8E for future external expansion

Boot pool = mirrored 120G SATA SSD drives

Network Infrastructure:

2 x Cisco 3750E 48 port gigabit PoE, 2 10 gigabit ports

1 x Cisco 3560E 24 port gigabit PoE, 2 10 gigabit ports

1 x Cisco Nexus 3064-X, 48 SFP+ ports, 4 QSFP ports

Virtualization Infrastructure:

2 x Cisco C240 M4SX running ESXi 6.7

Dual E5-2697 v3 @ 2.60GHz

256GB ECC RAM

2 x Intel i350 Gigabit NIC for management & VM guest traffic using 2 ports in LACP channel

Cisco UCS VIC 1227 dual port 10 Gigabit NIC, 1 port for storage (NFS), 1 port for vMotion

UCSC-MRAID12G (LSI Chipset) RAID controller

Boots from RAID1 - 2 x 200G SATA SSD drives

RAID1 datastore - 2 x 1.1T 12G SAS drives

Cisco C240 M3S running ESXi 6.5

Dual E5-2687W v2 @ 3.40GHz

256GB ECC RAM

2 x Intel i350 Gigabit NIC for management & VM guest traffic using 2 ports in LACP channel

Cisco UCS VIC 1225 dual port 10 Gigabit NIC, 1 port for storage (NFS), 1 port for vMotion

LSI 9271-8I RAID controller

Boots from RAID1 - 2 x 200G SATA SSD drives

datastore - 3 x RAID1 (2 x 300G 6G SAS drives)

I am particularly annoyed that I can't seem to get past 27.G with iperf. To keep the number of possible suspect down, I am using a 40Gbase-CR4 DAC cable between the two systems for testing. The testing commands I am using are:

Code:

netperf -cC -H 192.168.250.23 -D 10 -l 30 -- -a -m 512k -s 2M -S 2M iperf -c 192.168.250.27 -w 512k -P 8 -l 2M

I did have one run that got to 27.7G.

Code:

root@freenas:/ # iperf -c 192.168.250.27 -w 512k -P 8 -l 2M ------------------------------------------------------------ Client connecting to 192.168.250.27, TCP port 5001 TCP window size: 508 KByte (WARNING: requested 500 KByte) ------------------------------------------------------------ [ 9] local 192.168.250.23 port 43587 connected with 192.168.250.27 port 5001 [ 8] local 192.168.250.23 port 57509 connected with 192.168.250.27 port 5001 [ 7] local 192.168.250.23 port 29501 connected with 192.168.250.27 port 5001 [ 10] local 192.168.250.23 port 40059 connected with 192.168.250.27 port 5001 [ 4] local 192.168.250.23 port 20443 connected with 192.168.250.27 port 5001 [ 6] local 192.168.250.23 port 43150 connected with 192.168.250.27 port 5001 [ 3] local 192.168.250.23 port 62826 connected with 192.168.250.27 port 5001 [ 5] local 192.168.250.23 port 36698 connected with 192.168.250.27 port 5001 [ ID] Interval Transfer Bandwidth [ 6] 0.0-10.0 sec 3.20 GBytes 2.75 Gbits/sec [ 3] 0.0-10.0 sec 6.81 GBytes 5.85 Gbits/sec [ 9] 0.0-10.0 sec 1.81 GBytes 1.55 Gbits/sec [ 8] 0.0-10.0 sec 1.91 GBytes 1.64 Gbits/sec [ 7] 0.0-10.0 sec 6.78 GBytes 5.82 Gbits/sec [ 10] 0.0-10.0 sec 1.91 GBytes 1.64 Gbits/sec [ 4] 0.0-10.0 sec 6.62 GBytes 5.69 Gbits/sec [ 5] 0.0-10.0 sec 3.21 GBytes 2.76 Gbits/sec [SUM] 0.0-10.0 sec 32.3 GBytes 27.7 Gbits/sec

Even with that, my storage Vmotion tasks aren't going as quickly as they had on my older setup (Mostly same cards, Cisco C240S M3 - E5-2637 v2 @ 3.50GHz). I also upgraded Vcenter and Vsphere ESXi to 6.7. I appears that Vcenter is only allowing 2 storage Vmotion tasks to run at once. I had tuned this in Vcenter 6.5 to allow 4 Vmotion tasks at once, and I could frequently get 9G+ read and 4G+ write. I can still occasionally getting 9G read, but the write is down to 2G+.

Primary FreeNAS tunables:

Secondary FreeNAS tunables:

I did have autotune on for the older 11.2 systems, but I have now turned it off. I install 11.3-U3 fresh, and then restored the saved config from the 11.2 systems. What the heck am I missing? :-(